General information about Intel Arc A580

We reviewed several video cards from Intel, which, having returned to the gaming solutions market after a long break, presented a whole line of products. This line includes the flagship model Arc A770, a model one step lower — Arc A750, as well as a budget version Arc A380. Previously, the company produced graphics processors, mostly built into its own processors. However, a couple of years ago, Intel announced the creation of desktop graphics cards in the Arc line, which have become competitive in mass market segments.

Intel's line of graphics cards includes the Arc A770, A750, A580 and A380. The Arc A300, A500 and A700 sub-series are aimed at entry-level, mid-range and mid-high performance levels respectively. The Arc graphics cards' release strategy seemed a bit unusual: they were initially released only in Asia, although Western reviewers also gained access to the products. First, the budget model Arc A380 was presented, but it turned out to be somewhat weak, which may have affected the release of more powerful Arc A770 and Arc A750 video cards, causing them greater interest among potential buyers.

The appearance on the market of the Arc A580 video card from Intel was accompanied by software problems, driver instability and various glitches, such as crashes from games and incorrect operation of effects, including ray tracing. Despite these difficulties, let us consider this model in more detail.

The Arc A580 modification was introduced in September 2022, but the start of its sales was noticeably delayed. This video card is aimed at the mass market and is positioned as a budget solution, entering the market in the fall of last year at a price of $179. Despite the interesting price range, the release occurred after the market had already become noticeably saturated with previous generations of video cards from AMD and Nvidia.

The differences between the Arc A580 and the older models Arc A750 and Arc A770 are mainly in lower performance while maintaining functionality. All three graphics cards use the same ACM-G10 GPU with different numbers of active execution units. Arc A580 is equipped with 24 Xe cores, which is significantly less than older models. Despite this, it retains support for ray tracing, hardware acceleration and other features, making it a complete gaming solution.

The Arc A580 provides acceptable performance for Full HD gaming at high or maximum graphics settings. The GPU also supports XeSS and FSR image upscaling technologies, which can improve graphics quality in supported games.

Despite some initial struggles, the Arc A580 is an interesting proposition for budget gaming systems, and the $179 price tag makes it competitive.

| Arc A580 graphics accelerator | |

|---|---|

| Chip code name | ACM-G10 |

| Production technology | 7 nm (TSMC N6) |

| Number of transistors | 21.7 billion |

| Core area | 406 mm² |

| Architecture | unified, with an array of processors for stream processing of any type of data: vertices, pixels, etc. |

| DirectX hardware support | DirectX 12 Ultimate, supporting Feature Level 12_2 |

| Memory bus | 256-bit: 8 independent 32-bit memory controllers supporting GDDR6 memory |

| GPU frequency | up to 2400 MHz |

| Computing blocks | 24 (of 32) Xe-Core multiprocessors, including 3072 (of 4096) cores for INT32 integer, FP16/FP32 floating point, and special functions |

| Tensor blocks | 384 (out of 512) XMX matrix cores for INT2/INT4/INT8/FP16/BF16 matrix calculations |

| Ray tracing blocks | 24 (out of 32) RTU kernels for calculating the intersection of rays with triangles and BVH bounding volumes |

| Texturing blocks | 192 (out of 256) texture addressing and filtering units with support for FP16/FP32 components and support for trilinear and anisotropic filtering for all texture formats |

| Raster Operation Blocks (ROPs) | 12 (out of 16) wide ROP blocks of 96 (out of 128) pixels with support for various anti-aliasing modes, including programmable ones and for FP16/FP32 frame buffer formats |

| Monitor support | HDMI 2.0b and DisplayPort 1.4a (2.0 10G) support |

| Arc A580 Graphics Card Specifications | |

|---|---|

| Core frequency | 2000 (2400) MHz |

| Number of universal processors | 3072 |

| Number of texture blocks | 192 |

| Number of blending blocks | 96 |

| Effective memory frequency | 16.0 GHz |

| Memory type | GDDR6 |

| Memory bus | 256 bit |

| Memory | 8 GB |

| Memory Bandwidth | 512 GB/s |

| Compute Performance (FP32) | up to 12.3 teraflops |

| Theoretical maximum fill rate | 192 gigapixels/s |

| Theoretical texture sampling rate | 384 gigatexels/s |

| Tire | PCI Express 4.0 x16 |

| Connectors | one HDMI and three DisplayPort |

| Energy consumption | up to 185 W |

| Additional food | according to the manufacturer's decision |

| Number of slots occupied in the system case | two |

| Recommended price | $179 |

The name of the new Intel graphics card, Arc A580, corresponds to the general principle of naming the company's products. This model occupies a mid-range position in the lineup, preceding the more powerful Arc A750 and Arc A770, as well as the predecessor Arc A380. The naming is made a little more complicated by the fact that the Arc A580 and the two Arc A7 models use the same GPU, but with different performance levels. Perhaps Intel's initial plans for the line were different, but adjustments had to be made.

When comparing the Arc A580 to its AMD and Nvidia competitors, there are no direct competitors in the current generation of graphics cards. In terms of price, it is close to solutions of previous generations, such as the Radeon RX 6600 and GeForce RTX 3050. There is no direct analogue of the Arc A580 among the new Radeon RX 7600 and GeForce RTX 4060 models, which are more expensive and productive.

The Arc A580 graphics card comes in a single configuration with 8 GB of video memory and a recommended price of $179. This amount of video memory is considered optimal for this price segment, providing sufficient performance at various graphics settings and resolutions. Despite the possible requirements of modern games for a larger amount of video memory, for this price range 8 GB is acceptable, which is confirmed by practical use.

Unlike older modifications of the graphics processor on other versions of this chip, Intel did not undertake the production of Arc A580 video cards on its own. This is done only by the company’s partners, including ASRock, Gunnir and Sparkle, who immediately presented their solutions. In particular, Gunnir is offered in Asian markets, including our region. It's also worth noting that Intel has stopped producing limited edition Arc A770 and Arc A750 graphics cards, so only third-party options based on the company's GPU are now available on the market.

Typical features of the Arc A580 graphics card include dual slot occupancy, a PCIe 4.0 x16 interface, and two 8-pin connectors for additional power. According to the specifications, the maximum power consumption of the new video card should not exceed 185 W. However, as tested by the Gunnir model (and similar models from other manufacturers), actual power consumption was only 130 W, which is 60 W less than the Arc A750. This declared value is quite high: for example, the GeForce RTX 3050 has a power consumption of up to 130 W, and the Radeon RX 6600 has a power consumption of 132 W. In practice, the Arc A580 is not inferior to its competitors in this parameter.

Video cards support image output via one HDMI and three DisplayPorts, and we will also talk about these capabilities.

Microarchitecture and features

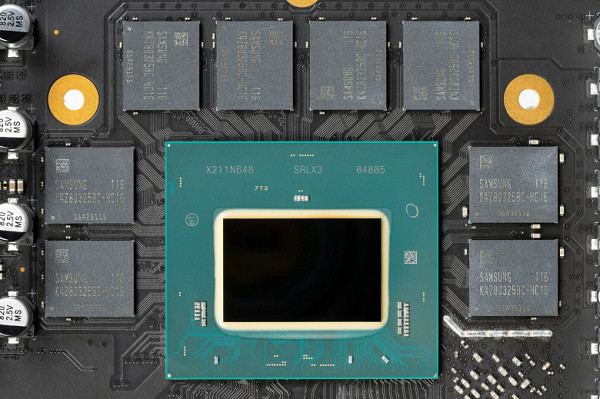

The Arc A580 graphics card uses the DG2-512 GPU, also known as the ACM-G10. In the Intel family of graphics cards, only the Arc A380 uses the ACM-G11 graphics processor with eight Xe cores, while all other models use the ACM-G10 chip with different numbers of active units: 24, 28 and 32 Xe cores, as well as the corresponding number ray tracing units, stream processors and XMX units.

The ACM-G10 GPU is manufactured at TSMC factories in Taiwan using the 6 nm process technology. The die area is 406 mm² and the number of transistors reaches 21.7 billion. Compared to the similar Nvidia AD103 chip, which has a larger area (378.6 mm²) and a surprising double number of transistors (45.9 billion), the Intel GPU is less powerful. In addition, the Arc A580 is inferior in power consumption to its competitors: its maximum consumption reaches 185 W, which is between 200 W for the GeForce RTX 4070 and 160 W for the GeForce RTX 4060 Ti, the latter being more powerful video cards.

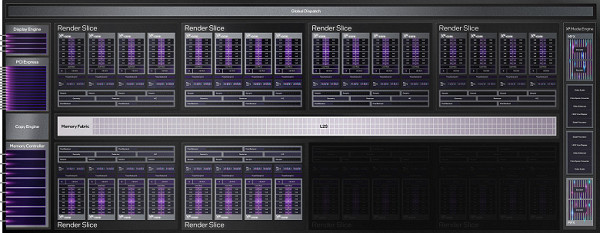

The Xe HPG architecture used in the ACM-G10 GPU was discussed in detail in the review of the flagship Arc A770 model. The Arc line of video cards is divided into subcategories Arc 3, Arc 5 and Arc 7, differing in the number of execution units. The Arc A580, part of the Arc 5 sub-family, uses the same ACM-G10 GPU as the Arc A770, but with a reduced number of active Xe cores — 24 out of 32, as two Render Slice units are disabled.

Structurally, Xe-HPG architecture chips are similar to Nvidia solutions, using a top-level Render Slice organization block. Each of the eight such Render Slice sections contains four Xe cores and four ray tracing, geometry processing and rasterization units. In the case of the Arc A580, two partitions have been disabled, allowing the use of defective crystals that are not suitable for the Arc A770 and Arc A750 models.

ACM-G10 version for Arc A580

The Arc A580 uses a stripped-down version of the ACM-G10 GPU, also known as the DG2-512. This chip includes six active Render Slice units and 24 Xe cores, including 3072 shader units, 192 TMUs and 96 ROPs. In addition, the chip has 384 active cores for accelerating matrix operations used in artificial intelligence tasks, and 24 hardware acceleration cores for ray tracing. The base GPU frequency is 1700 MHz, and the maximum can reach not only 2000, but also 2400 MHz. It's important to note that while the base clock of the Arc A580 (1.7 GHz) is lower than the Arc A750 (2.05 GHz), the maximum clock speed of all Intel graphics cards is close to 2.4 GHz.

Arc A580 has a powerful memory subsystem identical to Arc A750: 8 GB GDDR6 with a 256-bit bus. The chip speed is 16 GHz, providing a memory bandwidth of 512 GB/s, which significantly exceeds competitors in this segment. The video card also offers a full PCIe 4.0 x16 interface, while direct competitors are limited to a truncated PCIe 4.0 x8. This brings benefits on older PCIe 3.0 platforms where the Arc A580 GPU can deliver better performance, especially with the Resizable BAR feature.

The Arc A580 uses Intel Xe-HPG architecture, including hardware-accelerated ray tracing similar to Nvidia's architecture. It includes blocks that handle the intersection of ray and geometry in a hierarchical BVH structure, resulting in high routing efficiency. The Intel GPU also features XMX matrix compute units, which accelerate AI operations and can be used for XeSS resolution scaling technology. These blocks make the Arc A580 a competitive noise reduction and performance solution compared to its competitors.

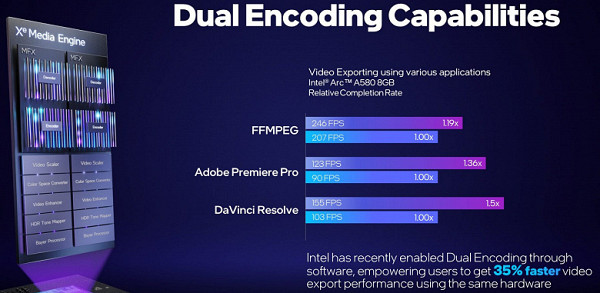

In the field of processing video data and displaying images on the screen, the Arc A580 video card offers similar capabilities to the older models in the line. It supports DisplayPort 2.0 and performs hardware encoding of video data in AV1 format. The dual-engine Xe Media Engine provides hardware acceleration for video encoding and decoding in AV1, VP9, H.265 HEVC and H.264 AVC formats. These two engines can combine their power on a single thread, delivering 20% to 50% higher performance than the competition.

In terms of displaying information on the screen, the Arc A580 video card is equipped with DisplayPort ports (option “2.0 10G Ready” — with UHBR 10 data transfer, up to 40 Gbps) and HDMI. The Xe Display Engine is capable of output to two monitors at up to 8K resolution at 60 Hz, four monitors at 4K resolution at 120 Hz, or four monitors at 1440p resolution at 360 Hz.

Performance evaluation and summary

As for peak computing performance, the Arc A580 video card is not much inferior to older models on the ACM-G10 chip. The stripped-down version has six Render Slice sections, each of which contains four Xe cores equipped with 16 vector engines capable of performing eight FP32 operations per clock cycle. By doubling the number of operations for FMA and multiplying by the frequency, we get peak computing performance of up to 12.3 teraflops. This figure is at the level of 14.7 teraflops for the next model in the line Arc A750, which corresponds to a difference of about 20%.

Compared to competitors such as the GeForce RTX 3060 with a peak performance of 12.7 teraflops and the Radeon RX 6600 with 8.9 teraflops, the Arc A580 graphics card showed competitive performance. It's important to note that peak performance doesn't always reflect actual gaming performance, and more detailed comparisons with competitors will be covered later. Now let's look at the main parameters of the entire line of Intel video cards in the table.

| Arc A380 | Arc A580 | Arc A750 | Arc A770 | |

|---|---|---|---|---|

| GPU model | ACM-G11 | ACM-G10 | ACM-G10 | ACM-G10 |

| Number of Xe cores | 8 | 24 | 28 | 32 |

| Number of FP32 blocks | 1024 | 3072 | 3584 | 4096 |

| Number of RT blocks | 8 | 24 | 28 | 32 |

| Number of XMX blocks | 128 | 384 | 448 | 512 |

| Number of TMUs | 64 | 192 | 224 | 256 |

| Number of ROP blocks | 32 | 96 | 112 | 128 |

| Base GPU frequency, MHz | 2000 | 1700 | 2050 | 2100 |

| GPU turbo frequency, MHz | 2050 | 2400 | 2400 | 2400 |

| Memory capacity, GB | 6 | 8 | 8 | 8/16 |

| Memory bus width, bits | 96 | 256 | 256 | 256 |

| Memory bandwidth, GB/s | 186 | 512 | 512 | 560 |

| Connector | PCIe 4.0 8x | PCIe 4.0 16x | PCIe 4.0 16x | PCIe 4.0 16x |

| Energy consumption, W | 75 | 185 | 225 | 225 |

| Price, $ | 149 | 179 | 289 | 329/349 |

It is easily noticeable that the theoretical difference between the Arc A580 and Arc A750 video cards in all respects is less than 20%, most often within 17%. Perhaps the confusion is created by the difference in the declared gaming frequencies, where the Arc A580 is inferior to the Arc A750. However, in practice, the turbo frequency is used, which is the same for all three older models and amounts to 2.4 GHz. The actual performance difference between the Arc A580 and Arc A750 is likely to be even smaller, as it often depends on the efficiency of different GPU execution units. We can assume that the performance of the Arc A580 in games will be close to the Arc A750, but to what extent?

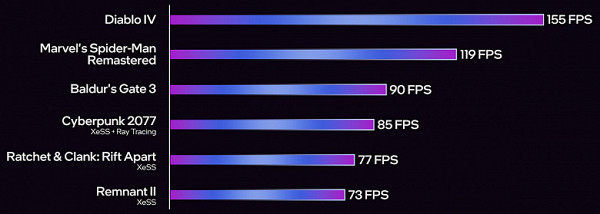

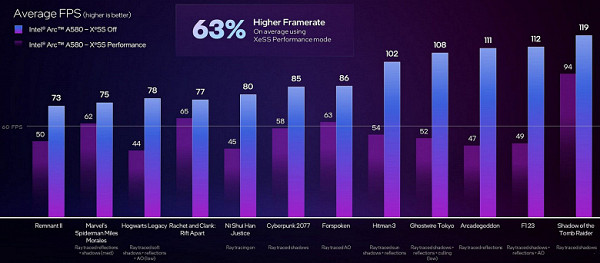

It's interesting to note that Intel itself avoids comparisons between the Arc A580 and other GPUs, although it does highlight the ability to reach over 60 FPS in modern games such as Marvel's Spider-Man: Remastered, Ratchet & Clank: Rift Apart and others. Support for XeSS (Intel Xe Super Sampling) image scaling technology significantly helps achieve high performance in optimized games, where enabling XeSS can more than double the performance of a new graphics card.

The Arc A580 graphics card provides sufficient performance for Full HD gaming with maximum or at least high graphics settings. A particularly important factor in achieving a stable 60 frames per second is the activation of XeSS scaling technology. This technology significantly increases frame rates, raising them from levels of around 45-50 FPS to a comfortable 70-80 FPS and even higher, depending on the specific game.

In general, in terms of performance, the Arc A580 graphics card provides sufficient resources for games in Full HD resolution with maximum or close graphics settings. However, when moving to a resolution of 2560x1440, you will have to reduce the graphics quality to ensure stable 60 FPS or more in modern games. Compared to its competitors, the video card does a better job of ray tracing, especially compared to AMD solutions, but loses in overall performance.

Considering the price/performance ratio, the Arc A580 is a competitive solution in its price segment. With a starting price of $180, it provides an alternative to more expensive Nvidia and AMD graphics cards. Compared to the GeForce RTX 3050, it shows a performance advantage of about 20%-25%, approaching the level of the Radeon RX 6600. However, comparison with the Arc A750, available for a slightly higher price, provides the opportunity to get a noticeable performance boost.

The Arc A580 also faces competition within the Intel Arc family, especially the Arc A750, which could make it more attractive to buyers if its price comes down. Overall, the Intel Arc A580 is an interesting solution, especially for those looking for an entry-level graphics card with the ability to be used in professional tasks such as rendering and artificial intelligence. However, Intel is expected to release the next generation of graphics cards to improve its competitiveness in the market.

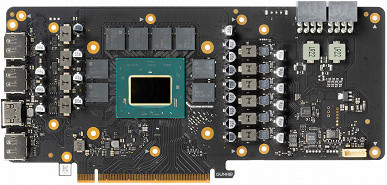

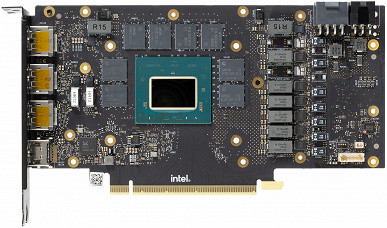

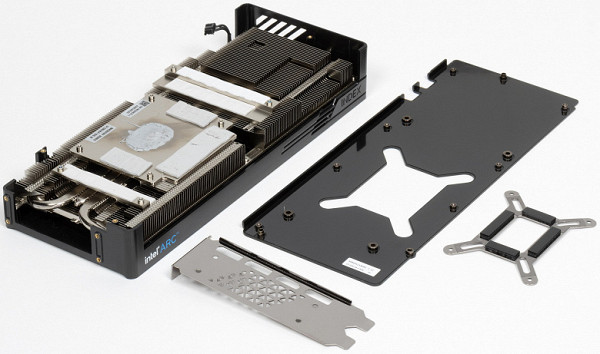

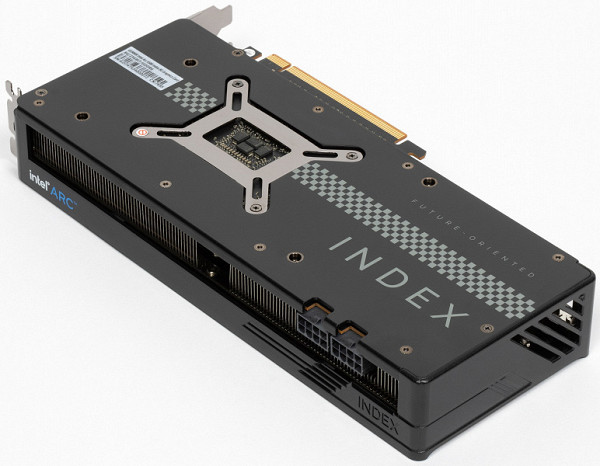

Gunnir Intel Arc A580 Index 8GB Video Card

Shenzhen Lanji Technology Development, known under the brand name Gunnir, was founded in 2002 in China and is headquartered in Shenzhen. The name Gunnir comes from Gungnir from Norse mythology, representing the spear of the supreme god Odin. This magical spear had the unique ability to hit any target, breaking through all shields and armor. The company's logo combines the tip of a spear, symbolizing quality and strength, with a blue lightning bolt inside, representing speed. Initially, the company specialized in the production of all-in-one PCs and mini-PCs, but its main focus was on a research and development center working on orders from IT companies. Several years ago, Lanji (Gunnir) became Intel's key graphics partner in mainland China, and currently all Intel Arc family implementations are carried out through Lanji. Therefore, the company is currently focused specifically on the production of video cards based on Intel processors, while the research and development center continues to work on OEM orders.

Object of study: commercially produced graphics accelerator (video card) Gunnir Intel Arc A580 Index with 8 GB of video memory on a 256-bit GDDR6 bus.

| Gunnir Intel Arc A580 Index 8GB 256-bit GDDR6 | ||

|---|---|---|

| Parameter | Meaning | Nominal value (reference) |

| GPU | Arc A750 (ACM-G10) | |

| Interface | PCI Express x16 4.0 | |

| GPU operating frequency (ROPs), MHz | 2000(Boost)—2400(Max) | 2000(Boost)—2400(Max) |

| Memory operating frequency (physical (effective)), MHz | 2000 (16000) | 2000 (16000) |

| Memory bus width, bits | 256 | |

| Number of computational units in the GPU | 24 | |

| Number of operations (ALU/CUDA) in block | 128 | |

| Total number of ALU/CUDA blocks | 3072 | |

| Number of texturing units (BLF/TLF/ANIS) | 192 | |

| Number of rasterization units (ROP) | 96 | |

| Number of Ray Tracing blocks | 24 | |

| Number of tensor blocks | 384 | |

| Dimensions, mm | 250×114×40 | 265×100×38 |

| Number of slots in the system unit occupied by a video card | 2 | 2 |

| PCB color | black | black |

| Peak power consumption in 3D, W | 134 | 140 |

| Power consumption in 2D mode, W | 20 | 20 |

| Energy consumption in sleep mode, W | 6 | 6 |

| Noise level in 3D (maximum load), dBA | 33.8 | 25.0 |

| Noise level in 2D (video viewing), dBA | 18.0 | 18.0 |

| Noise level in 2D (idle), dBA | 18.0 | 18.0 |

| Video outputs | 1×HDMI 2.1, 3×DisplayPort 2.0 | 1×HDMI 2.1, 3×DisplayPort 2.0 |

| Multiprocessor support | No | |

| Maximum number of receivers/monitors for simultaneous image output | 4 | 4 |

| Power: 8-pin connectors | 2 | 1 |

| Power: 6-pin connectors | 0 | 1 |

| Power: 16-pin connectors | 0 | 0 |

| Weight of the card with delivery set (gross), kg | 1.1 | 1.2 |

| Card weight (net), kg | 0.87 | 0.9 |

| Maximum resolution/frequency, DisplayPort | 3840×2160@144 Hz, 7680×4320@60 Hz | |

| Maximum resolution/frequency, HDMI | 3840×2160@144 Hz, 7680×4320@60 Hz |

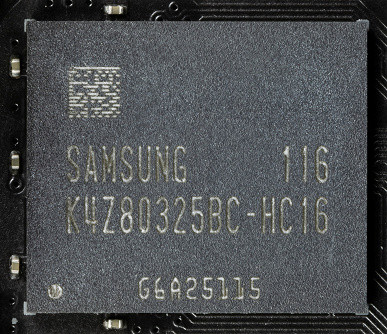

Memory

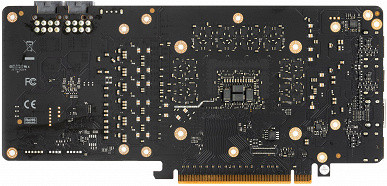

The video card is equipped with 8 GB of GDDR6 SDRAM, located on the front side of the printed circuit board in 8 chips of 8 Gbit each. Memory chips provided by Samsung (GDDR6) are designed for a nominal frequency of 2000 (16000) MHz.

Card features and comparison with Intel Arc A750

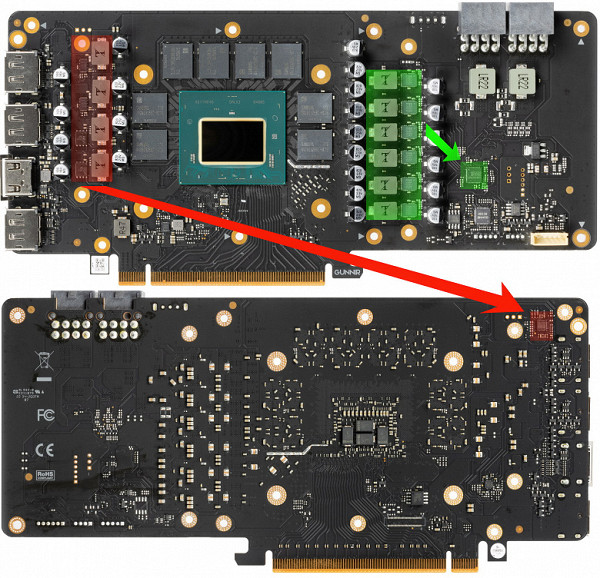

We are comparing Intel video cards positioned close to each other, and this is quite logical: the main differences relate only to the degree of reduction of the graphics processor and operating frequencies. It is obvious that Gunnir engineers used their own PCB (printed circuit board) design, which differs only slightly from the reference analogue, primarily in the area of the power system.

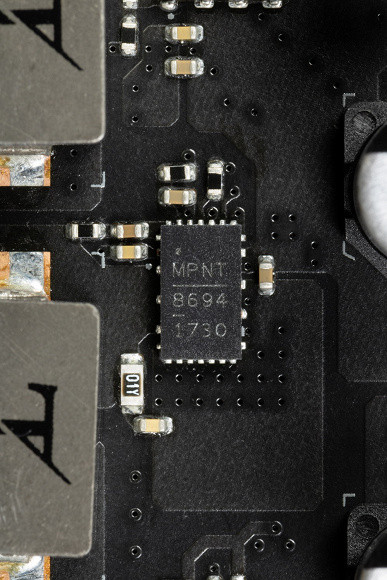

The core has encrypted markings.

The total number of power phases on the Gunnir card is 10, and the phase distribution is as follows: 6 phases per core and 4 per memory chips.

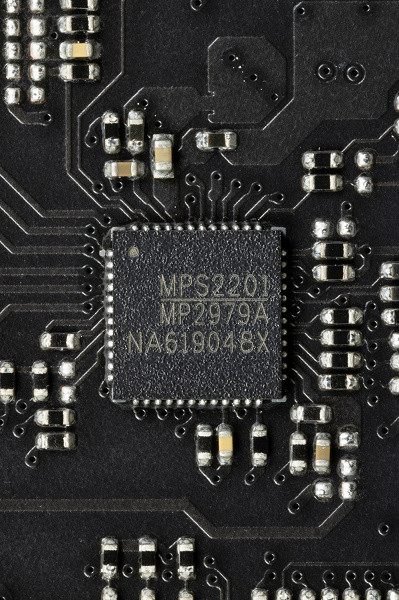

The core power circuit is shown in green, and the memory power supply is shown in red. Core power management is carried out using an 8-phase PWM controller MP2979A (Monolithic Power Systems), located on the front side of the card.

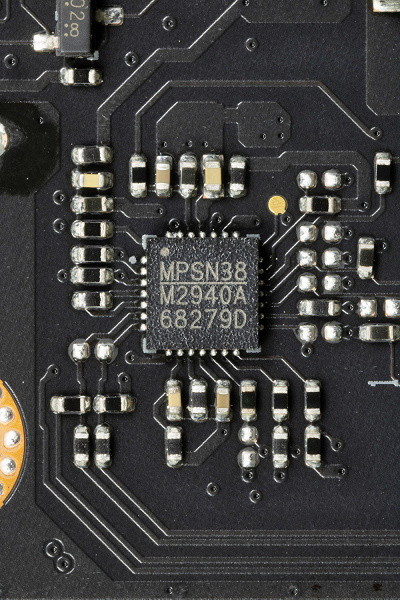

The 4-phase power supply circuit for the memory chips is managed by another PWM controller from the same company, located on the reverse side of the PCB.

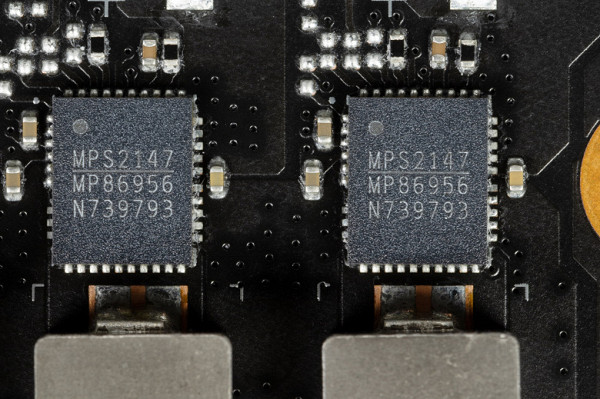

The core power converter uses very expensive DrMOS transistor assemblies — MP86956 (Monolithic Power Systems), each of which is rated at a maximum of 70 A.

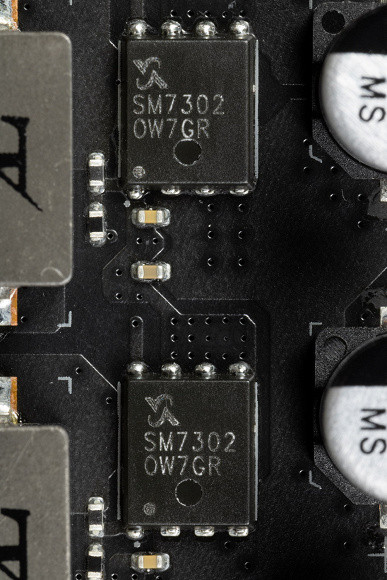

The power supply circuit for the memory chips is divided into two blocks. Two phases are equipped with DrMOS chips (also MPS) designed for a maximum current of up to 50A, while the other two phases use Sinopower MOSFETs with a similar maximum load of up to 50A.

We did not find a separate controller for monitoring the card. Perhaps this functionality is implemented by the GPU itself.

It is worth noting the compact dimensions of the card, especially its thickness of 40 mm. As a result, the video card occupies only 2 slots in the system unit.

The video card is equipped with a standard set of video outputs: three DisplayPort ports (version 2.0) and one HDMI port (version 2.1).

It is important to note that HDMI 2.1 is not implemented directly, but via DisplayPort 2.0. For this purpose, the card provides a signal transfer controller with

Marked Gunnir. This controller is likely from a third party manufacturer and Gunnir has relabeled it.

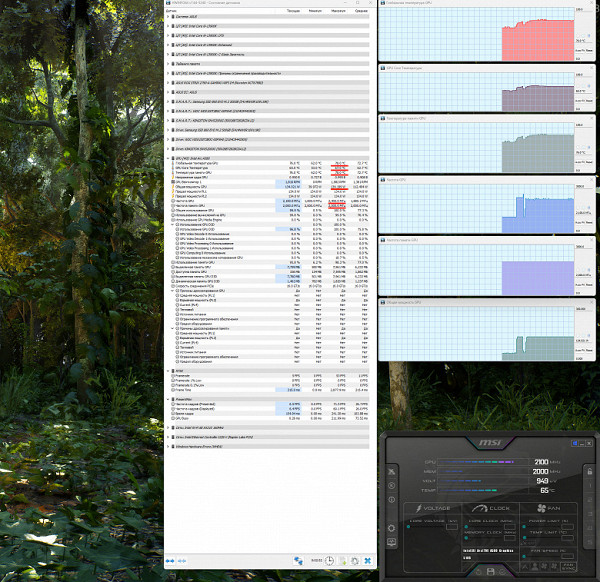

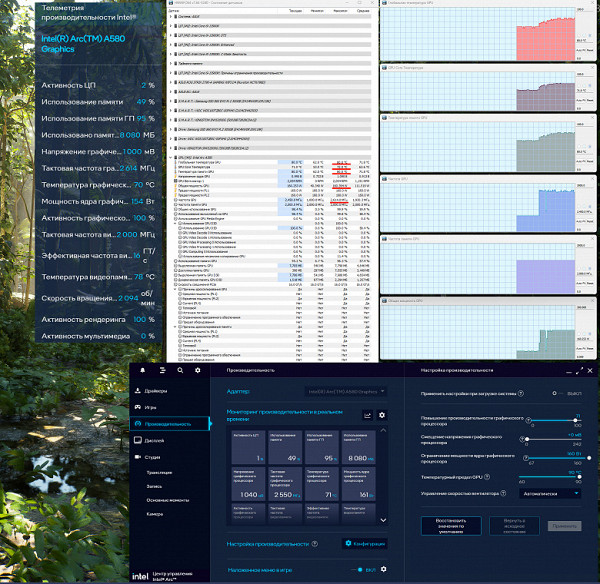

Factory GPU and memory frequencies correspond to reference values. By using the settings panel in the Intel drivers (where only raising the GPU frequency is possible), we overclocked the video card. As a result, the core operated stably at frequencies up to 2614 MHz, which is 8.9% higher than the reference value. This resulted in an average 8% increase in productivity. It should be noted that the performance of an Intel video card significantly depends on the activation of Resizable BAR technology in the BIOS Setup of the motherboard. This technology allows the processor to directly access video memory.

The power consumption of the Gunnir card in tests reached 134 W. Power for the card is provided via two 8-pin PCIe 2.0 connectors.

The card's operation is controlled using a proprietary utility included in the Intel software package.

Heating and cooling

The basis of the CO is a multi-section nickel plated radiator with heat pipes that distribute heat along the radiator fins. The radiator is relatively thin.

The liquid cooling tubes are soldered to an extensive nickel-plated plate, which cools both the graphics core and memory chips (via thermal pads). To effectively cool the VRM power converters, their own heat sinks are installed on the radiator.

The back plate serves primarily as a protection element for the PCB and is an important part of the overall design concept.

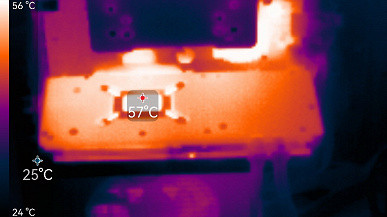

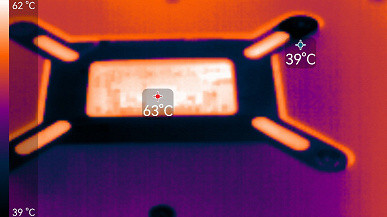

Temperature monitoring:

After two hours of testing at maximum load, the core temperature did not exceed 67 degrees (the highest point was 78°C), and the temperature of the memory chips was 78 degrees, which is a satisfactory result for video cards of this class. The card's power consumption reached 134 W.

We recorded and sped up the eight-minute heating process by 50 times.

The maximum heating was observed in the central part of the PCB, as well as near the PCIe connector.

During manual overclocking, the heating parameters changed slightly: the core heated up to 72°C (hot spot — 80°C), memory chips — up to 80°C.

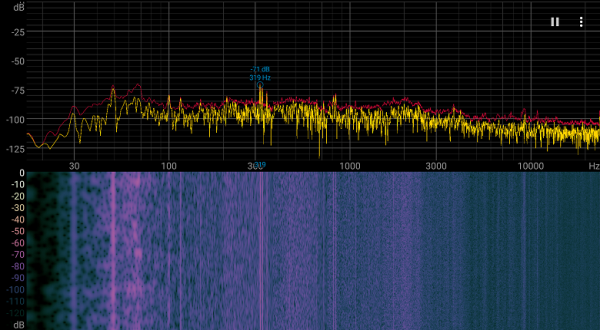

Noise

The technique used to measure the noise level assumes the presence of a soundproofed room with minimal sound reflections and the absence of mechanical noise from the system unit. The background noise level is 18 dBA, which is the total noise level in the room including the sound level meter level. The measurements were taken at a distance of 50 cm from the video card at the level of the cooling system.

Measurement modes include:

- Idle mode in 2D: using an Internet browser with the iXBT.com website open, a Microsoft Word window and several Internet communicators.

- 2D mode with movie viewing: using SmoothVideo Project (SVP) with hardware decoding and insertion of intermediate frames.

- 3D mode with maximum load on the accelerator: using the FurMark test.

The assessment of noise level gradations is carried out as follows:

- less than 20 dBA: relatively silent

- 20 to 25 dBA: very quiet

- from 25 to 30 dBA: quiet

- from 30 to 35 dBA: clearly audible

- from 35 to 40 dBA: loud, but tolerable

- above 40 dBA: very loud

In idle mode in 2D, the temperature did not exceed 52-54 °C, the fans were mostly idle, and the noise level was consistent with the background level of 18 dBA. The fans are periodically turned on for a short period, followed by their repeated shutdown. This is an obvious flaw and requires more careful adjustment of the fan stop threshold.

When watching a movie with hardware decoding, no changes were observed.

In the maximum 3D load mode, the temperature reached 67/78/78 °C (core/hot spot/memory). The fans reached a speed of 1982 revolutions per minute, and the noise level increased to 33.8 dBA, which is clearly audible, but not too loud.

The noise spectrogram is quite smooth, there are several not very pronounced peaks, but subjectively no annoying overtones seem to be audible.

Do not forget that the heat generated by the card remains inside the system unit, so using a case with good ventilation is highly desirable.

Backlight

The map is not backlit.

Delivery and packaging

In addition to the map, the package contains only a short user manual.

Testing: synthetic tests

We tested the new Intel graphics card using our synthetic benchmark suite, which is constantly updated and expanded. Included are benchmarks that evaluate the performance of ray tracing, as well as resolution scaling and performance enhancement technologies such as DLSS, XeSS, and FSR. Semi-synthetic tests include subtests from the 3DMark package, such as Time Spy, Port Royal, DX Raytracing, Speed Way and others.

Synthetic tests were carried out on the following video cards:

- Intel Arc A580 with default settings.

- Intel Arc A750 with default settings.

- Intel Arc A380 with default settings.

- GeForce RTX 3050 with default settings.

- Radeon RX 6600 with default settings.

To analyze the performance of the Arc A580 video card, we selected competing models from Nvidia (GeForce RTX 3050) and AMD (Radeon RX 6600). In some cases, more powerful video cards were used, such as GeForce RTX 3060 and Radeon RX 6600 XT, if there were no results from lower-end solutions.

Also taken into account are Intel Arc A380 and Arc A750 video cards, representing the lower and higher segments of the Intel Arc product line.

Our test suite is under constant development and we welcome informed suggestions for improvement.

3DMark Vantage test

Over the years, we have continued to study legacy synthetic benchmarks, including those from 3DMark Vantage, which often provide unique and interesting data not found in more modern benchmarks. Feature tests from this set are equipped with support for DirectX 10 and continue to be relatively relevant, bringing important conclusions when analyzing the results of new video cards.

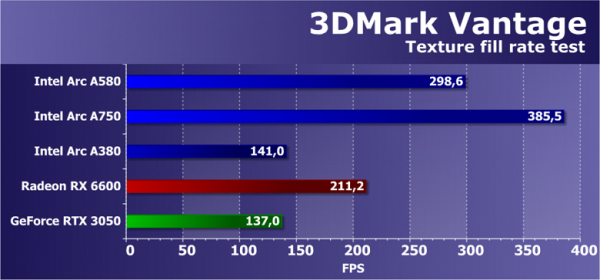

Feature Test 1: Texture Fill

This test measures the performance of texture fetch units. The process involves filling a rectangle with values read from a small texture using multiple texture coordinates that change every frame.

The performance of video cards from different manufacturers and generations in Futuremark's texture test is usually quite high, and the test shows results close to the corresponding theoretical parameters, although sometimes they are still somewhat underestimated for some GPUs. Despite the fact that Intel drivers do not work perfectly in DX10 applications, the results of Arc family video cards in this benchmark are fine. The only thing visible is a very big difference between the A380 and A580/A750 — the younger GPU is noticeably slower.

Selected for comparison at a similar price, the Radeon and GeForce are quite different in speed from each other in this test, the RTX 3050 is clearly less powerful due to fewer texture units. But Intel in older solutions uses a much more complex GPU with an unusual ratio of execution units — it has more texture modules than its competitors, and the operating frequency of the A580 is quite high. The Arc A580 lagged behind the older A750 approximately as much as it should in theory, and at the same time still remained second — the advantage of the new product over the RTX 3050 is more than twofold, and over the RX 6600 almost one and a half times.

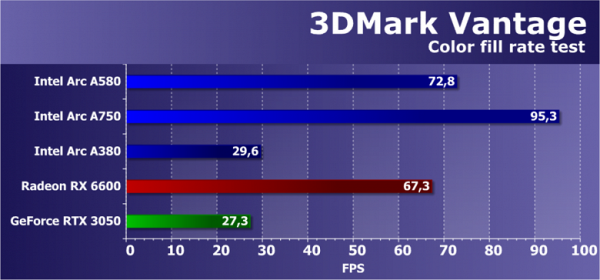

Feature Test 2: Color Fill

The second task is a fill rate test. It uses a very simple pixel shader that does not limit performance. The interpolated color value is written to an off-screen buffer (render target) using alpha blending. It uses a 16-bit off-screen buffer of the FP16 format, most commonly used in games that use HDR rendering, so this test is quite modern.

The results of the second 3DMark Vantage subtest show the performance of ROP units, without taking into account the amount of video memory bandwidth, and the test most often measures the performance of the ROP subsystem, and in this case, memory bandwidth did not have a clear impact. AMD and Nvidia video cards are again very different from each other in speed (this time in filling the frame with pixels), which indicates that the RTX 3050 is clearly overpriced.

Arc A580 lagged behind A750 for obvious reasons in the form of fewer ROP blocks, everything is fine here. But the Intel video card model we are considering today, even after another reduction in some ROP units, still has more of them than competing solutions from AMD and Nvidia. Therefore, we again see a serious advantage of the new product, as in the previous test. There is no point in comparing the speed with the RTX 3050; even the younger A380 outperformed the Nvidia video card! But the Radeon RX 6600 is not bad — it showed results quite close to the A580.

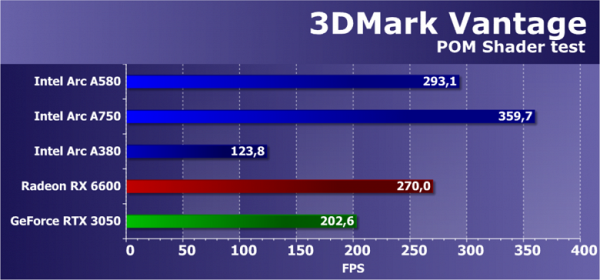

Feature Test 3: Parallax Occlusion Mapping

One of the most interesting feature tests, since a similar technique has been used in games for a long time. It draws one quadrilateral (more precisely, two triangles) using a special Parallax Occlusion Mapping technique that simulates complex geometry. Quite resource-intensive ray tracing operations and a high-resolution depth map are used. This surface is also shaded using the heavy Strauss algorithm. This is a test of a very complex pixel shader that is heavy for a video chip, containing numerous texture samples during ray tracing, dynamic branching and complex lighting calculations according to Strauss.

The results of this test from the 3DMark Vantage package do not depend solely on the speed of mathematical calculations, the efficiency of branch execution or the speed of texture fetching, but on several parameters simultaneously. To achieve high speed in this task, the right balance of the GPU is important, as is the efficiency of running complex shaders, and this is exactly what Intel's first powerful GPU lacks. The chip is not balanced in the best way, its organization does not provide the same efficiency as that of competitors, but due to the fact that they use much more complex GPUs compared to competitors in the same price ranges, their solutions look quite good.

In this test from 3DMark Vantage, the second-from-bottom Arc A580 again performed as expected, lagging behind the older A750 on the same GPU with varying degrees of reduction in accordance with the theoretical peak performance. As for competitors, it’s the same thing again — the new product is clearly faster than the RTX 3050, but the RX 6600 is already much closer to it, although the difference between all solutions has greatly decreased — the subtest is still much closer to real games. The A580 is far ahead of AMD and Nvidia cards in peak compute speed and other theoretical metrics, but the lower efficiency puts the A580 closer to them, especially the RX 6600.

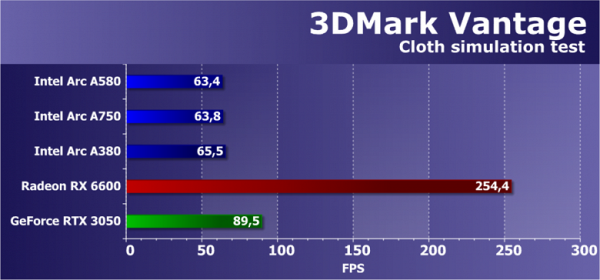

Feature Test 4: GPU Cloth

The fourth subtest is interesting because it calculates physical interactions (fabric simulation) using the GPU. Vertex simulation is used, using the combined work of vertex and geometry shaders, with several passes. Use stream out to transfer vertices from one simulation pass to another. Thus, the execution performance of vertex and geometry shaders and the stream out speed are tested.

The rendering speed in this test should also depend on several parameters at once, and the main influencing factors should be geometry processing performance and the efficiency of geometry shaders. The strengths of chips in geometry processing should be evident here, but we have long been getting incorrect results for many video cards, including GeForce and Radeon in this test, so Intel video cards have been added to them.

There is simply no point in analyzing such strange and low results in this subtest for all video cards except the Radeon RX 6600; they are clearly incorrect. The A580 should always be ahead of its competitors in theoretical parameters, and the RTX 3050 cannot lag so far behind the RX 6600. Unfortunately, everything is simple — no one is optimizing drivers for such an ancient test package anymore.

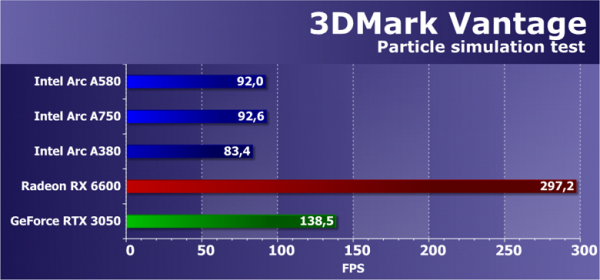

Feature Test 5: GPU Particles

A test of physical simulation of effects based on particle systems calculated using a graphics processor. A vertex simulation is used, where each vertex represents a single particle. Stream out is used for the same purpose as in the previous test. Several hundred thousand particles are calculated, all are animated separately, and their collisions with the height map are also calculated. Particles are rendered using a geometry shader that creates four vertices from each point to form the particle. Most of all, shader units are loaded with vertex calculations; stream out is also tested.

In the second geometry test from 3DMark Vantage we also see results that are just as far from theory, but in the case of GeForce they at least became a little closer to the truth compared to the previous subtest of the same benchmark. But nothing has changed with Intel Arc, they are still behind, which cannot be — and these results are also clearly incorrect, since the Intel GPUs used should differ in speed from each other, and older GPUs should be faster in all respects competing video cards from AMD and Nvidia. Another useless result.

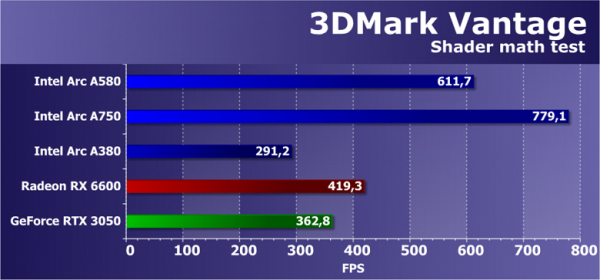

Feature Test 6: Perlin Noise

Vantage's latest feature test is a math-intensive GPU test that calculates several octaves of Perlin noise in a pixel shader. Each color channel uses its own noise function to put more stress on the video chip. Perlin noise is a standard algorithm often used in procedural texturing and uses a lot of math.

In the mathematical test, the performance of solutions, although not entirely consistent with theory, is usually close to the peak performance of video chips in extreme tasks, in contrast to the POM Shader test, discussed just above. This test uses floating point operations, and modern graphics architectures can reveal some of their new capabilities, but the test is quite outdated and does not show all the capabilities of modern GPUs. Nevertheless, it is quite possible to use it to estimate the limiting mathematical productivity.

AMD and Nvidia graphics processors competing with each other in price this time are not so far from each other in terms of efficiency — they are located close on the diagram, but still GeForce is a little slower. The junior Intel graphics processor is far from the older ones, and they are all balanced somewhat differently, so the video card models of this company show quite high results in simple mathematics that even the A380 is not much inferior to video cards from other companies. The advantage of the A580 over them is fully consistent with the theory and is associated with a large number of execution units and a fairly high clock frequency. In real games, not everything is determined by shaders, and even there they are more complex, so the situation is unlikely to be as optimistic. Well, the model in question lags behind the A750 by quite an expected distance.

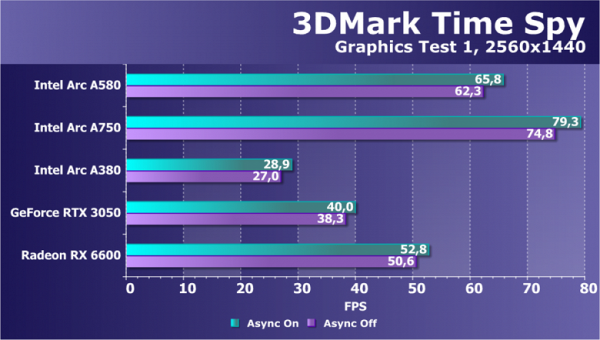

Direct3D 12 tests

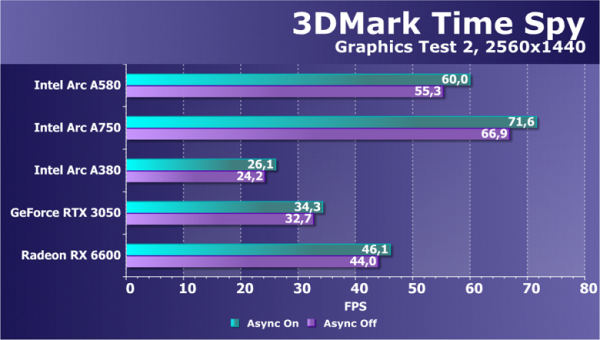

We decided to remove examples from Microsoft's DirectX SDK and from AMD's SDK that use the Direct3D12 graphics API from our tests, since they have long shown incorrect results in most cases. And the main computing test with Direct3D12 support in this section will be the famous Time Spy benchmark from 3DMark. In it, we are interested not only in the general comparison of GPUs in terms of power, but also in the difference in performance with the asynchronous computing capabilities that appeared in DirectX 12 enabled and disabled. To be sure, we tested the video cards in two graphics tests at once.

This test often results in a ratio of results similar to what is then observed in games. However, you should understand that all companies optimize drivers well for Time Spy, including Intel, which is noticeable in the results. The A580 is not far behind the A750 in this test, close to theory. The new product also turned out to be twice as fast as the younger A380 and is significantly superior not only to the RTX 3050, but also to the RX 6600, which are at about the same price. Thus, in this test, the efficiency of using Intel's GPU execution units is normal, and it will be interesting to see how it will manifest itself in more complex tests.

Ray tracing tests

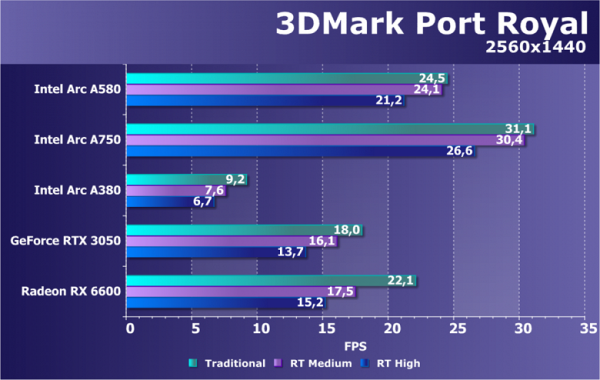

One of the earliest tests of ray tracing performance was the Port Royal benchmark from the creators of the famous 3DMark series of tests. This benchmark is supported by all GPUs that support the DirectX Raytracing API. We analyzed several video cards at a resolution of 2560x1440 on various settings, including ray tracing modes, as well as the traditional rasterization method.

The benchmark demonstrates several new uses of ray tracing through the DXR API, including algorithms for rendering reflections and shadows. Despite the imperfect optimization of the test, it successfully loads powerful GPUs, providing the opportunity to compare the performance of different video cards in ray tracing tasks. The results of the video card on the older Intel GPU in this test are close to the performance of the model one step higher, but the A750 remains noticeably faster, and the A380 lags significantly behind.

The results clearly demonstrate the difference in the three companies' approaches to hardware acceleration of ray tracing. AMD GPUs based on the RDNA 2 architecture show a lag behind both GeForce and Intel graphics cards, especially in the context of complex ray tracing tasks. The Arc puts the A580 ahead of its direct competitors, such as the RTX 3050 and RX 6600, with high-performance Ampere-level hardware ray tracing. In ray tracing applications, the graphics card in question becomes competitive even with the more expensive RTX 3060 and RX 6600 XT.

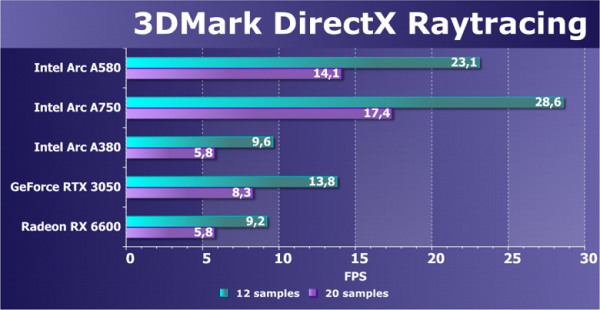

Later, another 3DMark subtest was released, the purpose of which was to test the performance of ray tracing in DirectX Raytracing. Unlike the previous one, this test is entirely dedicated to ray tracing, without using rasterization. It more accurately reflects GPU speed in hardware tracing acceleration tasks. The benchmark scene, although small, can provide the benefit of a large amount of cache, which is especially true for Radeon graphics cards, and the Arc graphics card has a dedicated cache for BVH.

The Arc A580 we're reviewing today delivers an impressive result, repeating its performance compared to the A750 as in the previous test. Intel's implementation of hardware-accelerated ray tracing shows excellent results, and the A580 is almost twice as fast as the RTX 3050 in this test, and more than double the RX 6600. Comparison with Radeon turns out to be unfavorable for AMD, where even the RX 7600 is inferior to the A580. The Speed Way focuses the load on the RT blocks, which are efficient on the Intel microarchitecture. With a sufficient number of RT blocks in the Arc A580, the graphics card stands out with a huge advantage over its competitors.

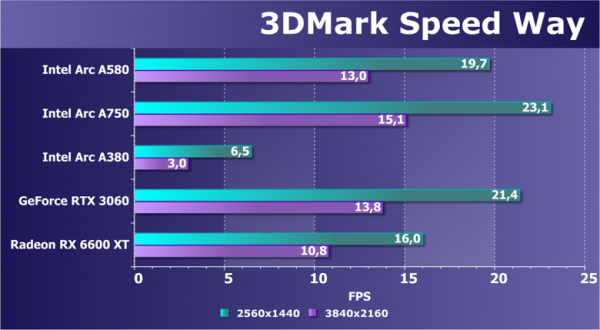

With the release of new graphics processors from Nvidia and AMD last year, another test was introduced as part of the 3DMark suite, focused on ray tracing — Speed Way. This test puts a heavy load on ray tracing and is of interest for evaluating performance in conditions similar to future gaming projects where ray tracing will be used more heavily.

In this test, the situation is already noticeably different: the RT blocks are not as loaded as in more synthetic tests that evaluate the performance of ray tracing blocks. This results in Radeon graphics cards not falling behind as much, especially when compared to the more expensive RX 6600 XT and RTX 3060. RT cores from Nvidia and Intel are more versatile and are able to do most of the work when tracing, making them less susceptible to loss performance than Ray Accelerator cores and conventional SIMD cores of AMD video chips.

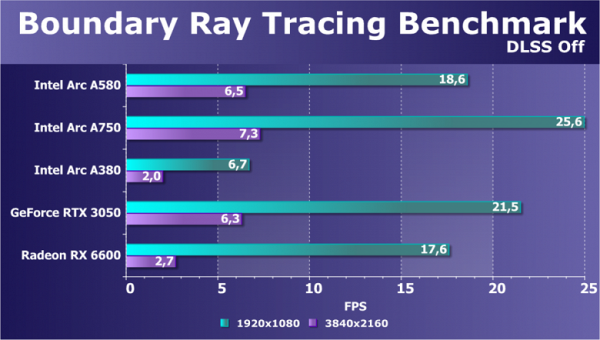

The Arc A580 comes close to the speed of the RTX 3060 in this test, although it performs less efficiently compared to previous tests. The gap from the older A750 again corresponds to theory and remains small. Let's consider another semi-synthetic benchmark created on a game engine. Boundary is a Chinese game project that supports DXR and DLSS. The benchmark puts a heavy load on the GPU, using ray tracing for complex reflections, soft shadows and global illumination. It's important to note that the test also includes DLSS performance technology, but does not include XeSS and FSR.

Even in Full HD resolution, none of the presented video cards have enough power to achieve a minimum comfortable 30 FPS, let alone 4K resolution, which is considered nominal for this class. The A580 model reviewed today is inferior to its older version in low resolution even more than the theoretical difference would suggest. However, in high resolution they became noticeably closer. This may be due to flaws in the Intel drivers for this situation. The RTX 3050 in 4K showed results close to the A580, and in Full HD it even outperformed the model in question. The Radeon RX 6600 is slightly inferior to the A580 in Full HD, but they all can't even deliver 30 FPS. 4K resolution without upscaling technologies remains unplayable, even on more powerful GPUs.

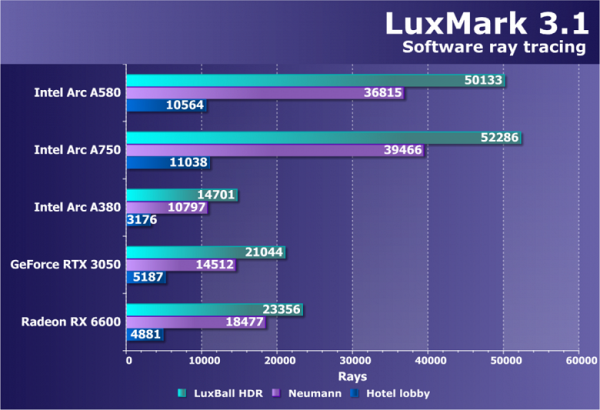

Computational tests

We are actively researching benchmarks that use OpenCL to run cutting-edge computing tests. It is important for us to include such tests in our synthetic testing package. Currently one of the ray tracing benchmarks featured in this section is LuxMark 3.1. This test, based on LuxRender and using OpenCL, is cross-platform.

In our tests, the Arc A580 video card showed a slight lag behind the older A750 model, not exceeding 10%. However, despite the stripped-down configuration, the A580 has a significant number of computing units, a high clock speed and a sufficient amount of secondary cache. As a result, the new product showed impressive performance, ahead of its competitors GeForce RTX 3050 and RX 6600. Even the more powerful RTX 3060 and RX 6600 XT could not catch up with the A580.

Unfortunately, not all computing performance tests such as V-Ray Benchmark, OctaneRender and Cinebench 2024 support Arc graphics cards. However, in the areas of ray tracing and overall computing performance, the A580 proved to be competitive, surpassing the RTX 3050 and RX 6600. Considering the similar prices of these solutions, the Intel graphics card demonstrates outstanding performance in such tasks.

Tests of DLSS/XeSS/FSR technologies

In this section we include additional tests related to various performance enhancing technologies. Previously, these were only resolution scaling technologies (DLSS 1.x and 2.x, FSR 1.0 and 2.0, XeSS), but recently the technology for generating intermediate frames was added to them, so far only in the Nvidia version — DLSS 3.

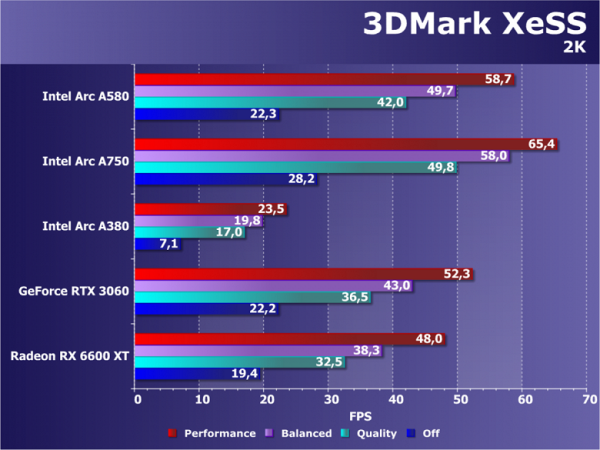

Let's look at a method to improve performance by rendering at a lower resolution and scaling the image to a higher one using XeSS, an analogue of DLSS 2.0 proposed by Intel. It also uses artificial intelligence capabilities and hardware acceleration on Arc family matrix units when restoring information in a frame. But it differs from DLSS 2 in that it works not only on video cards from the developer company, but also on all modern GPUs, albeit not as efficiently as on Intel’s own solutions. For testing, we took a specialized benchmark from the 3DMark package at a resolution of 2560x1440.

When XeSS is activated, frame rates are more than doubled, although with some loss in image quality. In this case, we compare the Arc A580 with the RTX 3060 and RX 6600 XT, more expensive graphics cards. This provides an excellent opportunity to understand how competitive an Intel graphics card is even compared to more expensive alternatives. Fortunately, the A580 delivered impressive performance, outperforming not only its direct competitors, but also the more expensive RTX 3060 and RX 6600 XT, especially with XeSS enabled. This indicates that Intel video cards effectively handle XeSS technology, outperforming Radeon and GeForce, thanks to the use of specialized matrix operations acceleration units.

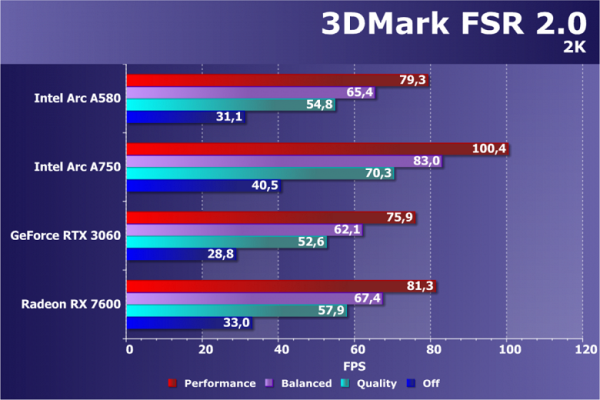

Another rendering scaling technology is FSR 2.0 from AMD. For some reason, this technology became the last to be available as part of specialized 3DMark subtests. It's important to note that the scenes used by different upscaling technologies vary, making direct comparisons difficult. We can only evaluate the performance gains while also taking into account the actual rendering resolution and possible differences in image quality, which is a difficult task.

FSR is a universal technology that performs roughly the same on different GPUs. In the FSR 2.0 tests, we again compare the Arc A580 not with direct competitors in price, but with the more expensive RTX 3060 and RX 7600. It is important to note that even against the backdrop of more powerful video cards, the new Intel product demonstrated impressive performance, being approximately in the middle between them, closer to a more productive Radeon. This is an encouraging result for the A580, which, although it lags behind the A750 on the same GPU, provides a noticeable advantage over its main price competitors, such as the RTX 3050 and RX 6600, thanks to its higher number of execution units.

It remains to move on to consider the performance of the Intel video card in modern games that use all modern graphics technologies, including hardware acceleration of ray tracing. Will the Arc A580 be able to perform as impressively as in the synthetic tests?

Testing: gaming tests

Test bench configuration

- Computer based on Intel Core i9-13900K processor (Socket LGA1700) :

- Platform:

- Intel Core i9-13900K processor (overclocked to 5.4 GHz on all cores);

- ZhSO Cougar Helor 360;

- Asus ROG Strix Z790-A Gaming WiFi D4 motherboard based on the Intel Z790 chipset;

- RAM TeamGroup Xtreem ARGB White (TF13D416G5333HC22ADC01, CL22-32-32-52) 32 GB (2×16) DDR4 5333 MHz;

- SSD Intel 760p NVMe 1 TB PCIe;

- SSD Intel 860p NVMe 2 TB PCIe;

- ThermalTake Toughpower GF3 1000W power supply ;

- Thermaltake Level20 XT case;

- operating system Windows 11 Pro 64-bit;

- TV LG 55Nano956 (55″ 8K HDR, HDMI 2.1);

- AMD drivers version 24.1.1;

- Nvidia drivers version 551.22;

- Intel drivers version 101.5125;

- VSync is disabled.

- Platform:

3D gaming performance in a nutshell

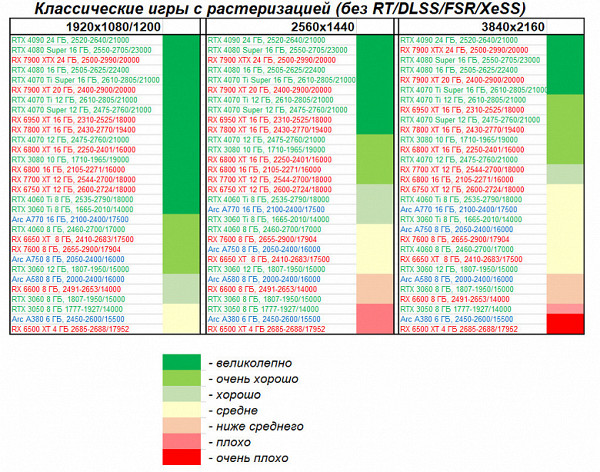

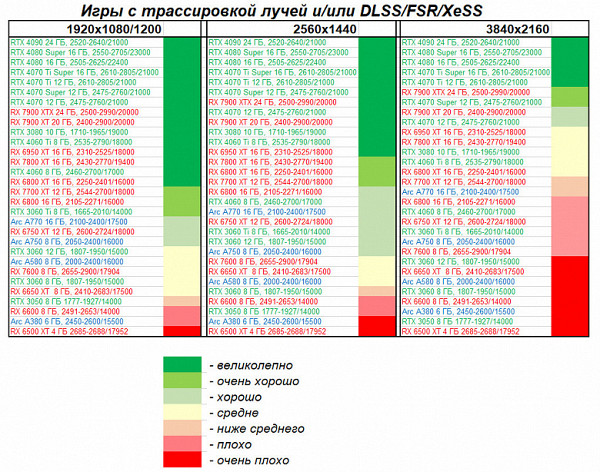

Before we provide more detailed test results, we provide a brief performance assessment for the series of graphics accelerators in question, as well as their competitors. Our subjective assessment is expressed on a scale of seven levels.

Games without ray tracing (classic rasterization):

The graphics accelerator in question is an entry-level budget solution focused on 1080p resolution (Full HD), which is clearly shown in the illustration presented. When used in classic games without ray tracing and scaling technologies, the Arc A580 video card is capable of providing comfortable gameplay at maximum settings in 1080p resolution. At the same time, it outperforms the Radeon RX 6600 and even the GeForce RTX 3060 8 GB, being only slightly inferior to the version with 12 GB of memory. It is important to note that Intel drivers are optimized for modern games (DX12/DX11), and the performance of Arc family graphics cards may degrade more significantly in older games compared to similarly priced devices. We focus on studying the performance of video cards in the most current games.

Games using ray tracing and DLSS/FSR/XeSS:

It should be noted that for video cards of this level (Arc A580, Radeon RX 6600, GeForce RTX 3060) it is recommended to activate ray tracing only when scaling technologies are simultaneously enabled. Otherwise, even with Full HD resolution, you may experience insufficient performance. It is important to remember that Intel Arc family video cards support both XeSS and FSR. Using these technologies, the Arc A580 delivers acceptable Full HD performance while maintaining high graphics settings in games.

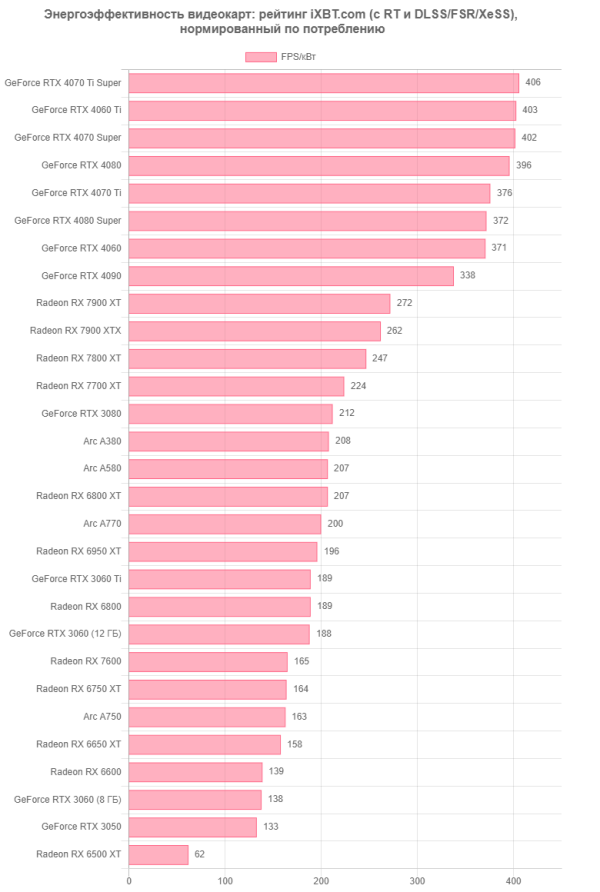

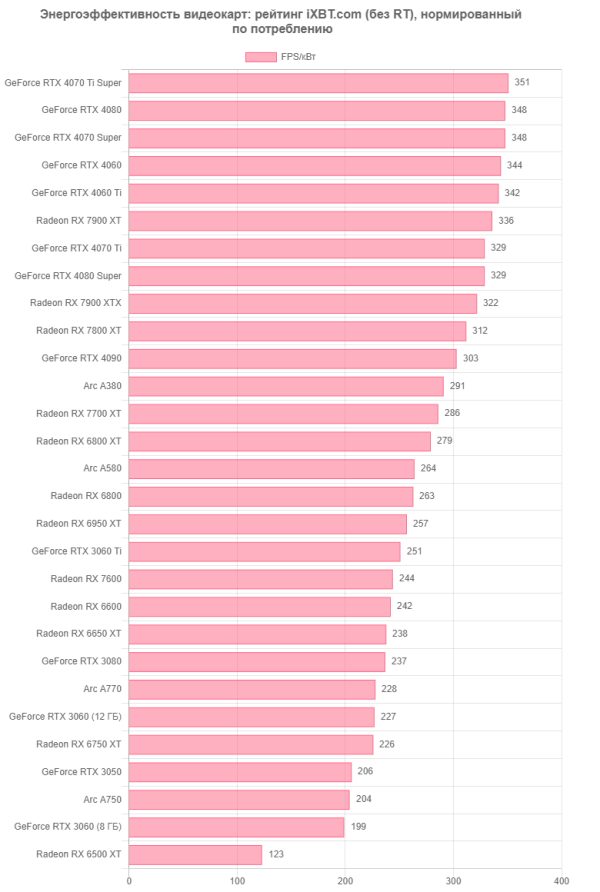

Conclusions and comparison of energy efficiency

The Intel Arc A580 (8GB) is Intel's third-best gaming graphics card for desktop PCs. It would be more logical to call it Arc A730, given the use of the same GPU, memory and bus, with a slight reduction in execution units.

The name is not as important as the significance of the product itself. We are dealing with a fun and relatively affordable solution for novice gamers, despite the difficulties in saturating the market. Even major manufacturers such as Asus, MSI and Gigabyte face restrictions from Nvidia, the leader in the graphics card market. Nvidia, having a dominant position, dictates its terms to its partners and does not allow the production of video cards on Intel processors. Those who still decide to release video cards based on Intel Arc will lose contracts with Nvidia to produce cards based on their processors. These are the realities of the modern IT market.

The result is a paradoxical situation: major PC component manufacturers such as Asus, MSI and Gigabyte are unable to expand their cooperation with Intel into the graphics card space due to Nvidia's dominance, thereby creating limited opportunities for competition. While Intel Arc-based graphics cards are produced by only a few companies, such as ASRock, Acer and the Chinese company Lanji. This situation, as well as supply problems with Sparkle and Gigabyte graphics cards, pose challenges to the availability of Intel Arc products in the market.

Additionally, Intel Arc graphics cards require a modern PC with Resizable BAR support, limiting their use for upgrading older gaming PCs. Also, despite improvements in drivers, some optimization difficulties for older DirectX 9/10 games may influence consumers' decision to purchase these video cards.

Thus, despite the attractive characteristics of Intel Arc video cards, their low prevalence, limited choice of manufacturers and some technical requirements may influence the consumer decision to purchase these cards.

Compared to its competitors, the Arc A580 performs outstandingly in both Rasterization games and RT and Upscaling games. It is confidently ahead of its direct competitors.

From a technical perspective, the Arc Alchemist family fully supports DirectX 12 Ultimate, including hardware ray tracing, variable shading, mesh shaders, and other advanced features. The number of Xe cores and significant cache memory in the Arc A580 provide outstanding computing performance compared to low-end graphics cards from AMD and Nvidia.

In addition to these technologies, it is worth noting support for the HDMI 2.1 standard, which allows you to output 4K images at 120 FPS or 8K using a single cable, as well as support for hardware decoding of video data in AV1 format and the DP 2.0 standard.

The Gunnir Intel Arc A580 Index card (8 GB) has the standard dimensions of a dual-slot card and is equipped with an effective, although somewhat noisy, cooler. The card's power consumption can reach 135 W, and it has two 8-pin PCIe 2.0 power connectors.

The Arc A580 demonstrated good gaming performance without ray tracing technology at Full HD resolution and maximum graphics settings. In certain cases, to achieve comfortable gaming performance, you may need to slightly reduce the graphics settings. If ray tracing is enabled, scaling technologies such as FSR and XeSS must be used to maintain acceptable performance.

In games that support ray tracing technology, but do not support FSR/XeSS, it is recommended to leave ray tracing disabled. For less complex games at 2.5K resolution, acceptable performance can be achieved, but it's worth noting that the card is primarily aimed at Full HD resolution.