General information about Intel Arc A310

We have already reviewed most of the Intel video cards from the Arc Alchemist series — the company has returned to the discrete video card market for games after a long break and has achieved significant success. The series became famous thanks to the powerful Arc A750 and Arc A770 models, which belong to the middle class, as well as the Arc 5 and Arc 3 subfamilies. We reviewed flagship video cards and mid-range models, and today it’s time to evaluate the most affordable solution — Intel’s most budget graphics card.

These video cards have attracted many users with their excellent performance at a reasonable price, not inferior to competitors in terms of functionality. At the beginning of sales, problems arose with software, driver instability and other shortcomings. However, the situation has improved over time with regular driver updates that fix issues and improve performance, especially in new games.

As noted earlier, the Intel Arc Alchemist line of video cards consists of the Arc A770, A750, A580 and A380 models, aimed at mid-high, mid-range and entry-level performance, respectively. However, the most affordable and junior model is the Arc A310, introduced to the market along with the A750 in October last year. Initially, it was only available to OEMs, but then Intel decided to release it at retail.

At launch, both the A380 and A310 faced a number of issues, including software issues and driver instability. However, thanks to constant updates, Intel has been able to improve the quality of drivers, which has led to improved performance of video cards and lower prices. The Arc A310 now offers a more attractive solution.

Although the Arc A310 performs lower than the more expensive models in the Arc A7 family, it is not inferior in functionality. The main difference is the use of a less powerful ACM-G11 GPU with fewer Xe cores. However, even the Arc A310 supports ray tracing and image scaling technologies, which makes it competitive in the market.

While the Arc A310 isn't a gaming powerhouse and doesn't compete with top-end models from AMD and Nvidia, it is a good option for Full HD gaming at medium to low graphics settings. Image scaling technologies may help improve performance in supported games. It is also worth noting that the Arc A310 works well with video data in the AV1 format and supports output to displays via DisplayPort 2.0.

| Arc A310 graphics accelerator | |

|---|---|

| Chip code name | ACM-G11 |

| Production technology | 7 nm (TSMC N6) |

| Number of transistors | 7.2 billion |

| Core area | 157 mm² |

| Architecture | unified, with an array of processors for stream processing of any type of data: vertices, pixels, etc. |

| DirectX hardware support | DirectX 12 Ultimate, supporting Feature Level 12_2 |

| Memory bus | 64-bit: 2 independent 32-bit memory controllers supporting GDDR6 memory |

| GPU frequency | up to 2000 MHz |

| Computing blocks | 6 (of 8) Xe-Core multiprocessors, including 768 (of 1024) cores for INT32 integer, FP16/FP32 floating point, and special functions |

| Tensor blocks | 96 (out of 128) XMX matrix cores for INT2/INT4/INT8/FP16/BF16 matrix calculations |

| Ray tracing blocks | 6 (out of 8) RTU cores for calculating the intersection of rays with triangles and BVH bounding volumes |

| Texturing blocks | 32 (out of 64) texture addressing and filtering units with support for FP16/FP32 components and support for trilinear and anisotropic filtering for all texture formats |

| Raster Operation Blocks (ROPs) | 2 (out of 4) wide ROP blocks of 16 (out of 32) pixels with support for various anti-aliasing modes, including programmable ones and for FP16/FP32 frame buffer formats |

| Monitor support | HDMI 2.0b and DisplayPort 2.0 10G support |

| Arc A310 Graphics Card Specifications | |

|---|---|

| Core frequency | 2000 MHz |

| Number of universal processors | 768 |

| Number of texture blocks | 64 |

| Number of blending blocks | 32 |

| Effective memory frequency | 15.5 GHz |

| Memory type | GDDR6 |

| Memory bus | 64 bit |

| Memory | 4 GB |

| Memory Bandwidth | 124 GB/s |

| Compute Performance (FP32) | up to 2.7 teraflops |

| Theoretical maximum fill rate | 28 gigapixels/s |

| Theoretical texture sampling rate | 56 gigatexels/s |

| Tire | PCI Express 4.0 x8 |

| Connectors | according to the manufacturer's decision |

| Energy consumption | 40-75 W |

| Additional food | according to the manufacturer's decision |

| Number of slots occupied in the system case | 1-2 |

| Recommended price | about $100 |

The lowest-performance video card model in the Arc 3 series is the Arc A310, which follows the general naming principle for Intel solutions. It is distinguished by the A310 index, positioning itself as a budget option compared to the more powerful A380. The lineup also includes the A750 and A770, aimed at the top segment, and the mid-range A580.

Arc A310 is available only with 4 GB of video memory, which is logical for the most affordable model. With the A380 having 6GB due to a 96-bit memory bus, the A310 had a choice between 4GB and 8GB, but the latter option was too overkill for the price segment.

Despite the limited performance, 4 GB of video memory is sufficient for typical workloads found on entry-level graphics cards. Although in rare cases this volume can become a performance limiter, especially in more demanding games.

In terms of price, there is no official recommendation due to the fact that the A310 was initially only available to OEMs. However, it could supposedly sell for $100-$110, making it Intel's most affordable graphics card of the current generation.

Even though the A310's integrated graphics are slower than the competition, it's still of interest to budget gamers. This model's closest competitors are the GeForce GTX 1650/1630 and the Radeon RX 6500 XT and RX 6400, but the A310 may be more affordable, albeit slower.

The presence of HDMI and DisplayPort video outputs, as well as low power consumption of up to 75 W, makes the Arc A310 a convenient and affordable solution for novice gamers on a limited budget.

Microarchitecture and features

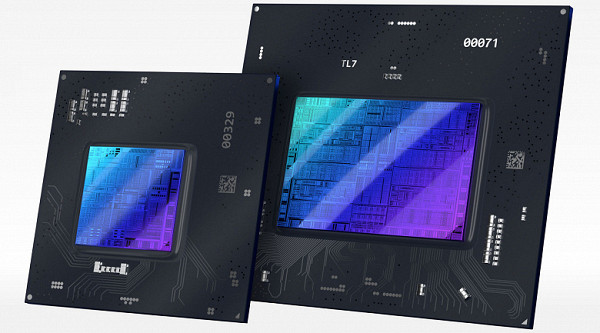

In our review of the older Arc A770 model, we examined the Xe-HPG architecture and looked at the main characteristics of the ACM-G10 GPU. The Arc line of video cards is divided into subfamilies: Arc 3, Arc 5 and Arc 7, which differ in the number of execution units and the corresponding level of performance and price. The first generation of discrete Arc video cards uses GPUs from the Alchemist family, and in the future it is planned to release models based on the Battlemage, Celestial and Druid microarchitectures, united by a common Xe-HPG architecture.

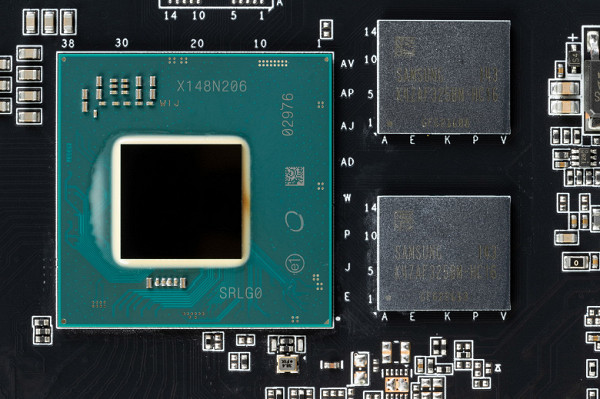

The Arc 3 subfamily uses a low-end version of the chip, while Arc 5 and Arc 7 are based on different GPU models with different numbers of active compute units. The first generation of discrete Intel Arc graphics cards consists of two Xe-HPG architecture graphics processors: DG2-128 and DG2-512 (or ACM-G11 and ACM-G10), and in this case the younger of them is considered.

ACM-G11 GPUs are manufactured using TSMC's 7nm N6 process technology, delivering superior performance and lower manufacturing costs compared to Intel's in-house processes. These chips have a die area of 157 mm² and contain about 7.2 billion transistors, which is about three times less than the older ACM-G10 chip.

Structurally, Xe-HPG architecture chips are similar to Nvidia solutions, where the cores are assembled into a top-level Render Slice block. Each Render Slice contains multiple cores, ray tracing, geometry processing, and other components. The ACM-G11 GPU on which the Arc A310 is based uses a minimal configuration of a pair of Render Slice units, with only six of the eight Xe cores active.

This configuration allows you to create video cards of different performance levels. The OEM version of the Arc A310 was configured with cores disabled, resulting in low cost and performance.

Unlike the Arc A380 model, which is based on a full version of the GPU, the Arc A310 has a stripped-down chip with only 768 compute units, 32 texturing units, 16 ROP units, 96 tensor cores and 6 ray tracing hardware acceleration cores. Although the Arc A310 also has 6 hardware ray tracing units, their nominal support is not effective in games — even at low quality settings, turning on ray tracing greatly reduces the frame rate.

The ACM-G11 GPU also offers 96 matrix compute units, which roughly matches the tensor cores of Nvidia GPUs. The GPU frequency is 2 GHz and can be dynamically adjusted by the manufacturer.

The Arc A310 features 4GB of GDDR6 memory connected to the GPU via a 64-bit interface and running at an effective frequency of 15.5Gbps, delivering 124GB/s of bandwidth. Although the competitor Radeon RX 6500 XT has a slightly higher figure — 144 GB/s. In modern games, even when playing in Full HD with average quality, 4 GB of video memory settings may sometimes not be enough.

The Xe-HPG architecture includes hardware-accelerated ray tracing that is similar to Nvidia technology, including advanced blocks for finding ray intersections and geometry. Also, trace blocks in Intel GPUs can reorder shader commands to optimize the operation of SIMD blocks, which increases the efficiency of tracing.

Intel GPUs also include matrix (tensor) computing units, which allow for hardware-accelerated noise reduction and performance-enhancing technologies such as XeSS. These blocks can provide 4 to 8 times faster matrix computation speeds than similar function blocks in competitive GPUs.

In terms of video processing and display, the Arc A310 supports advanced DisplayPort functions and hardware video encoding in AV1 format. It is also capable of displaying information on multiple high-resolution monitors at high refresh rates.

| Arc A310 | Arc A380 | Arc A580 | Arc A750 | Arc A770 | |

|---|---|---|---|---|---|

| GPU model | ACM-G11 | ACM-G11 | ACM-G10 | ACM-G10 | ACM-G10 |

| Number of Xe cores | 6 | 8 | 24 | 28 | 32 |

| Number of FP32 blocks | 768 | 1024 | 3072 | 3584 | 4096 |

| Number of RT blocks | 6 | 8 | 24 | 28 | 32 |

| Number of XMX blocks | 96 | 128 | 384 | 448 | 512 |

| Number of TMUs | 32 | 64 | 192 | 224 | 256 |

| Number of ROP blocks | 16 | 32 | 96 | 112 | 128 |

| Base GPU frequency, MHz | 2000 | 2000 | 1700 | 2050 | 2100 |

| GPU turbo frequency, MHz | 2000 | 2050 | 2400 | 2400 | 2400 |

| Memory capacity, GB | 4 | 6 | 8 | 8 | 8/16 |

| Memory bus width, bits | 64 | 96 | 256 | 256 | 256 |

| Memory bandwidth, GB/s | 124 | 186 | 512 | 512 | 560 |

| Connector | PCIe 4.0 8x | PCIe 4.0 8x | PCIe 4.0 16x | PCIe 4.0 16x | PCIe 4.0 16x |

| Energy consumption, W | 40—75 | 75 | 185 | 225 | 225 |

| Price, $ | ≈100 | 149 | 179 | 289 | 329/349 |

With the Arc A310, it is clear that it is the weakest of the entire line of Intel graphics cards, falling behind even the A380 in terms of peak performance parameters. Even though they are both based on the same ACM-G11 chip, the difference in configuration is noticeable — the A310 has significantly fewer compute units and other characteristics. Despite the reduced specifications listed on the Intel website, actual performance may vary.

The performance of the A310 is significantly lower than that of older models, which makes it less attractive for gaming purposes. However, for simple tasks such as displaying information, it is quite suitable, especially given the support for hardware video encoding in the AV1 format — this makes it one of the cheapest video cards with this function. Overall, the A310 could be an interesting choice for users who want an inexpensive and compact graphics card that can handle basic tasks.

However, to be more competitive, Intel needs to improve the hardware of its video cards, increase their efficiency and performance. Only then will they be able to truly compete with AMD and Nvidia solutions in the discrete graphics card market.

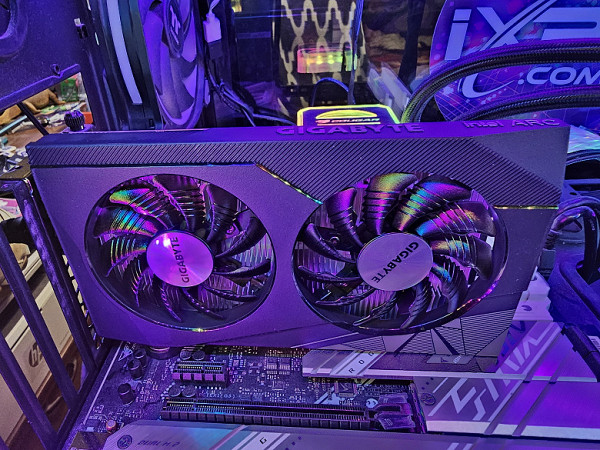

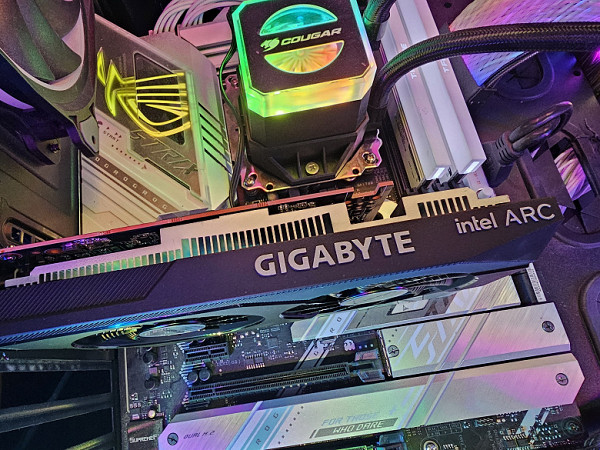

Video card Gigabyte Intel Arc A310 WindForce 4G 4 GB

Gigabyte Technology Corporation, founded in 1986 in the Republic of China (Taiwan), began its journey as a group of developers and researchers. Its headquarters are located in Taipei, Taiwan. In 2004, the company became the basis for the creation of the Gigabyte holding, which included several divisions, including Gigabyte Technology, specializing in the development and production of video cards and motherboards for PCs, as well as Gigabyte Communications, which has been producing communicators and smartphones under the GSmart brand since 2006.

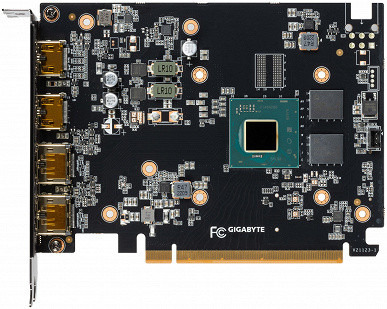

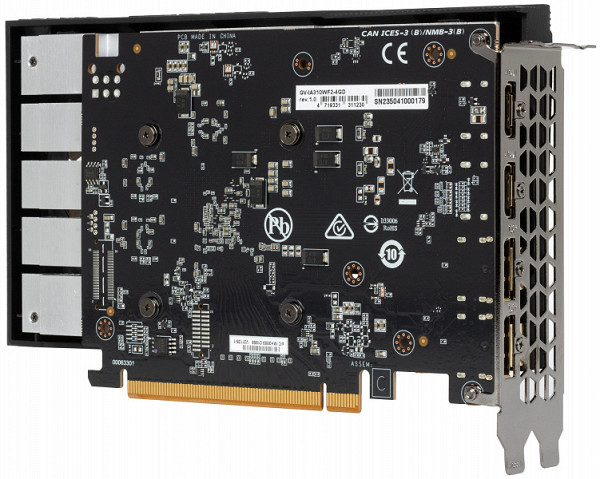

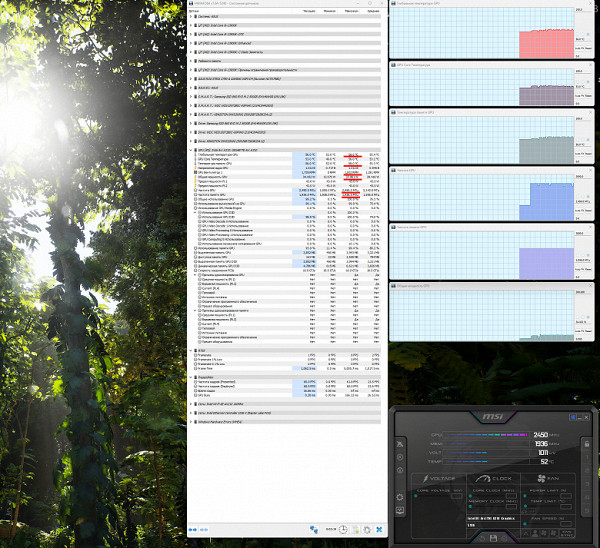

The object of the study is the commercially released Gigabyte Intel Arc A310 WindForce 4G 3D graphics accelerator with 4 GB of GDDR6 video memory and a 64-bit interface.

| Gigabyte Intel Arc A310 WindForce 4G 4GB 64-bit GDDR6 | ||

|---|---|---|

| Parameter | Meaning | Nominal value (reference) |

| GPU | Arc A310 (ACM-G11) | |

| Interface | PCI Express x8 4.0 | |

| GPU operating frequency (ROPs), MHz | 1750(Boost)—2450(Max) | 1750(Boost)—2450(Max) |

| Memory operating frequency (physical (effective)), MHz | 1937 (15500) | 1937 (15500) |

| Memory bus width, bits | 64 | |

| Number of computational units in the GPU | 6 | |

| Number of operations (ALU/CUDA) in block | 128 | |

| Total number of ALU/CUDA blocks | 768 | |

| Number of texturing units (BLF/TLF/ANIS) | 32 | |

| Number of rasterization units (ROP) | 16 | |

| Number of Ray Tracing blocks | 6 | |

| Number of tensor blocks | 96 | |

| Dimensions, mm | 185×100×38 | 190×70×32 |

| Number of slots in the system unit occupied by a video card | 2 | 2 |

| PCB color | black | black |

| Peak power consumption in 3D, W | 38 | thirty |

| Power consumption in 2D mode, W | 10 | 10 |

| Energy consumption in sleep mode, W | 6 | 6 |

| Noise level in 3D (maximum load), dBA | 27.5 | 24.0 |

| Noise level in 2D (video viewing), dBA | 18.0 | 18.0 |

| Noise level in 2D (idle), dBA | 18.0 | 18.0 |

| Video outputs | 2×HDMI 2.1, 2×DisplayPort 2.0 | 4×DisplayPort 2.0 |

| Multiprocessing support | No | |

| Maximum number of receivers/monitors for simultaneous image output | 4 | 4 |

| Power: 8-pin connectors | 0 | 0 |

| Power: 6-pin connectors | 0 | 0 |

| Power: 16-pin connectors | 0 | 0 |

| Weight of card with delivery set (gross), kg | 0.68 | 0.8 |

| Card weight (net), kg | 0.47 | 0.5 |

| Maximum resolution/frequency, DisplayPort | 3840×2160@144 Hz, 7680×4320@60 Hz | |

| Maximum resolution/frequency, HDMI | 3840×2160@144 Hz, 7680×4320@60 Hz |

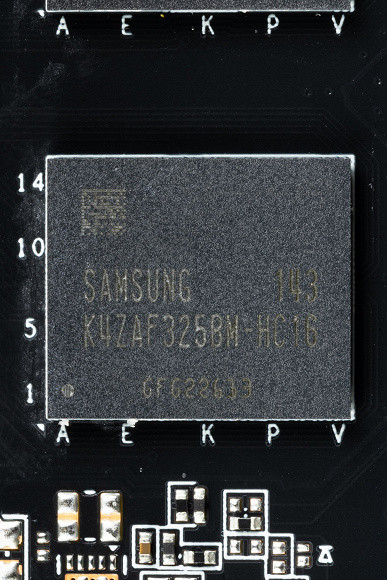

Memory

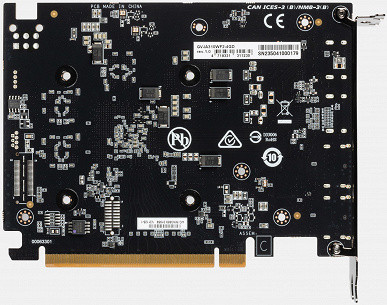

The card has 4 GB of GDDR6 SDRAM memory, located in 2 16 Gbit chips on the front side of the PCB. Samsung memory chips are designed for a nominal operating frequency of 2000 (16000) MHz.

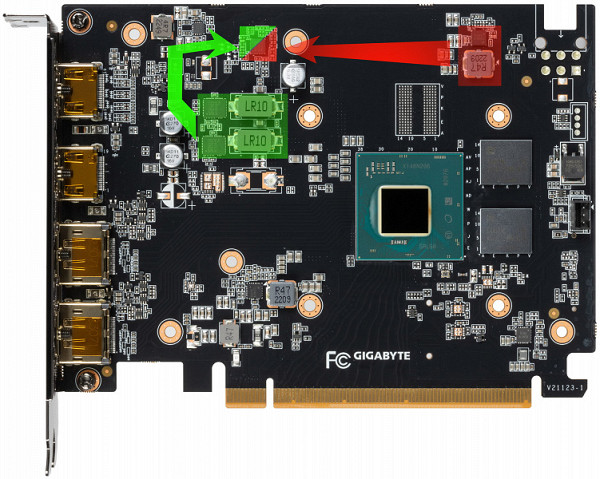

Card features and comparison with Intel Arc A380

We analyze similar Intel video cards that are positioned close to each other. This is quite logical: the differences between them come down to reduced GPU characteristics, bus and operating frequencies, as well as the presence of less memory on one chip.

The core markings remain encrypted.

The total number of power phases on the Gigabyte card is 3, and the phase distribution is as follows: 2 phases per core and 1 per memory chip.

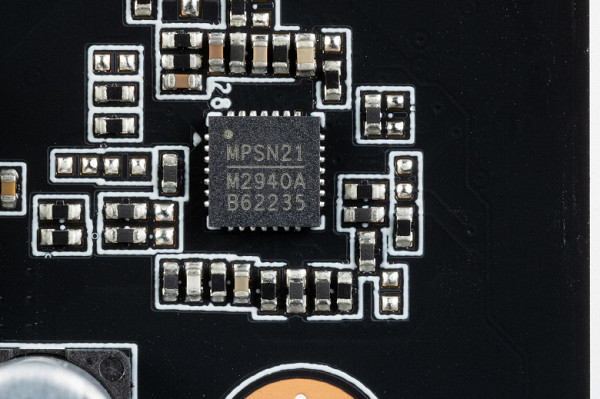

The card shows the core power supply in green and the memory in red. Power management of the core and memory chips is carried out through an 8-phase PWM controller MP2940A (Monolithic Power Systems), located on the front side of the card.

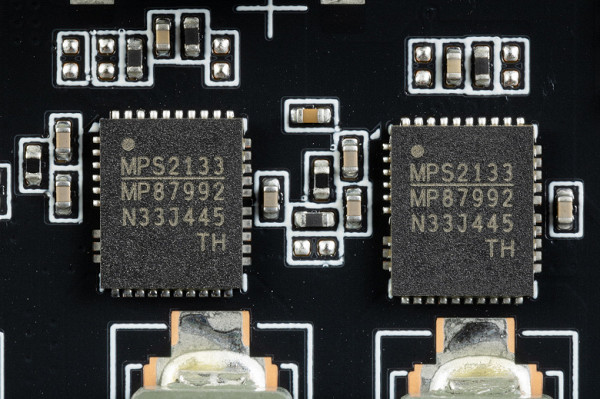

The core power converter uses very expensive DrMOS transistor assemblies — MP86956 (Monolithic Power Systems), each of which is rated for a maximum of 50 A.

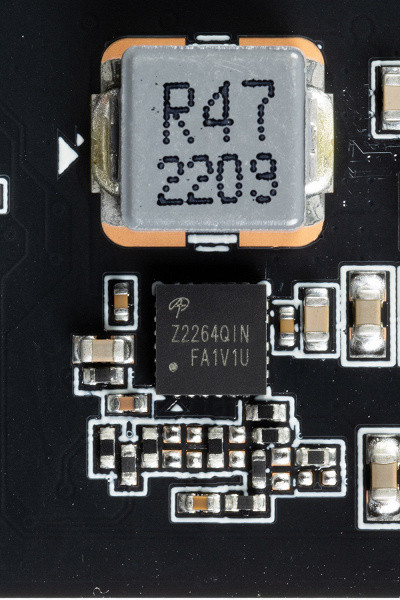

And the power supply circuit for the memory chips uses the DrMOS AOZ2264QIN (Alpha&Omega Semi) assembly, rated for a maximum of 15 A.

We did not find a separate controller for monitoring on the card. This function is probably performed by the GPU itself.

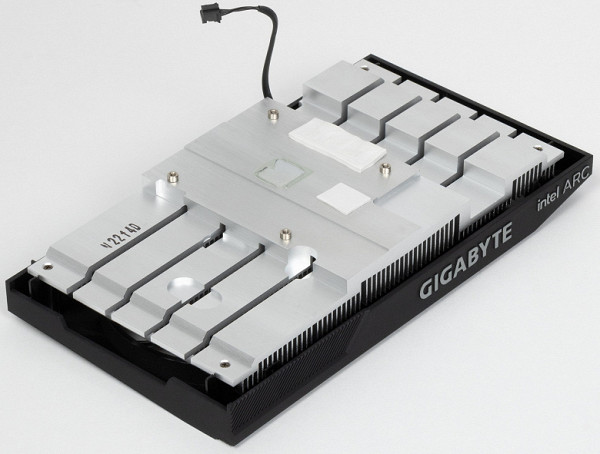

It should be noted the compact dimensions of this card, especially its thickness: only 38 mm. Thanks to this, the video card occupies only 2 slots in the system unit.

The video card is equipped with a standard set of video outputs: two DisplayPort ports (version 2.0) and two HDMI ports (version 2.1).

The operating frequencies of the memory and graphics processor correspond to the reference values. It is important to remember that the performance of an Intel video card can be significantly increased by activating Resizable BAR technology in the motherboard BIOS Setup, which provides direct processor access to video memory.

The power consumption of the Gigabyte video card in tests reaches 38 W. The card does not require additional power to operate.

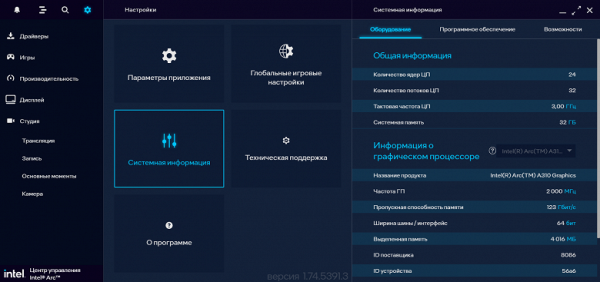

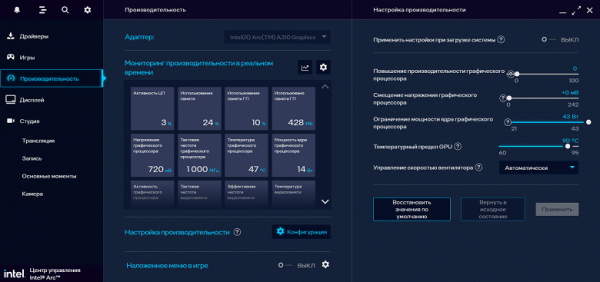

The card's operation is controlled using a proprietary utility included in the Intel software package.

General settings

Information panel

Frequency and limit management settings

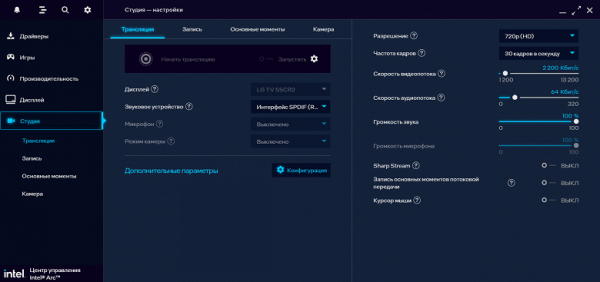

Video broadcast settings panel

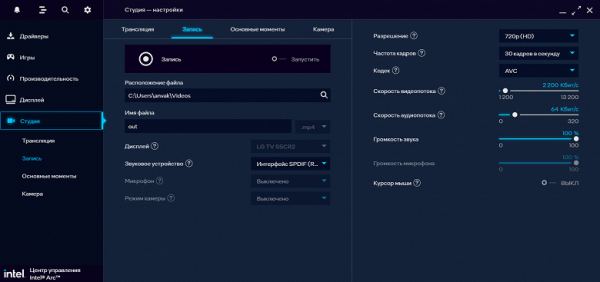

Video capture recording settings

Graphics settings panel

Heating and cooling

The basis of the CO is a very simple aluminum plate radiator. It is clear that with a total card consumption of about 40 W, a bulky CO is not required.

The heatsink on the graphics card cools both the GPU and memory chips using thermal pads for efficient heat dissipation. VRM power converters are not equipped with cooling elements.

In addition, there is no plate on the back of the card for additional cooling.

On the top of the radiator there is a casing with two fans with a diameter of 80 mm, which operate at the same speed.

When the graphics card is under low load, the fans stop if the GPU temperature drops below 50 degrees and the memory chip temperature drops below 70 degrees. After loading the video driver and polling the operating temperature, the fans turn off.

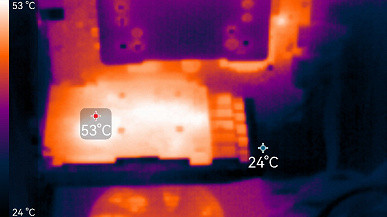

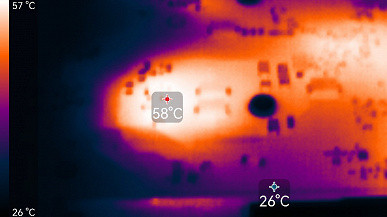

Temperature monitoring:

After a two-hour test under maximum load, the temperature of the core and memory chips remained below 58 degrees, which is an excellent indicator. The card's power consumption was up to 38 W.

The maximum heating was observed in the central part of the printed circuit board (PCB).

Noise

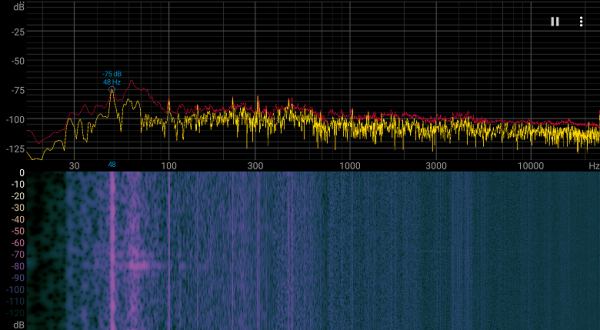

The noise measurement technique assumes that the room is soundproofed and attenuated, with reduced reverberations. The system unit in which the video card noise is analyzed is not equipped with fans and is not a source of mechanical noise. The background level of 18 dBA is the noise level in the room and also the noise level of the sound level meter itself. The measurements were taken at a distance of 50 cm from the video card at the level of the cooling system.

Measurement modes:

- Idle mode in 2D: an Internet browser with the site iXBT.com, a Microsoft Word window, and several Internet communicators are loaded.

- 2D mode with movie viewing: SmoothVideo Project (SVP) was used for hardware decoding with insertion of intermediate frames.

- 3D mode with maximum load on the accelerator: the FurMark test was used.

The noise level rating is as follows:

- Less than 20 dBA: relatively silent.

- 20 to 25 dBA: very quiet.

- From 25 to 30 dBA: quiet.

- From 30 to 35 dBA: clearly audible.

- From 35 to 40 dBA: loud, but tolerable.

- Above 40 dBA: very loud.

In idle mode in 2D, the temperature was no more than 52-54 °C, the fans mostly did not work, the noise level was equal to the background — 18 dBA. Periodically, the fans would start for a short time and then stop again. This is a flaw of the engineers; the threshold for stopping the fans should have been more carefully adjusted.

When watching a movie with hardware decoding, nothing changed.

At maximum load in 3D, temperatures reached 58/58/58 °C (core/hot spot/memory). At the same time, the fans spun up to 1832 rpm, the noise increased to 27.5 dBA: it was quiet.

The noise spectrogram is quite smooth; subjectively, no annoying overtones are heard.

Backlight

The map is not backlit.

Delivery and packaging

In addition to the card itself, the package includes only a short user manual.

Testing: synthetic tests

We tested the new Intel graphics card, evaluating its performance in comparison with other competing models on the market. Our set of synthetic tests is constantly updated, adding new benchmarks and removing outdated ones. Recently, we have started using additional benchmarks to measure the performance of ray tracing and resolution scaling technologies: DLSS, XeSS and FSR. In addition to this, we also include semi-synthetic benchmarks from popular suites such as 3DMark.

To compare the performance of Intel's junior Arc A310 model, we selected several video cards from all three major companies in the discrete GPU market. From Nvidia, we took the GeForce GTX 1650 and GTX 1630, as well as the RTX 3050 in some tests. From AMD, we chose the Radeon RX 6500 XT and RX 5500 XT. We even conducted some tests with more powerful video cards, such as GeForce RTX 3060 and Radeon RX 6600 XT, if we did not have results from lower-end solutions.

Based on the tests performed, we will be able to evaluate the performance of the Arc A310 and compare it with other models from both the Intel line and competitors — Nvidia and AMD.

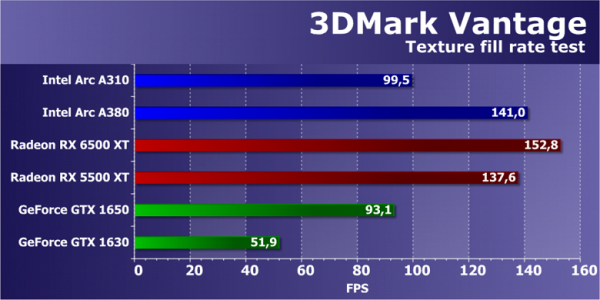

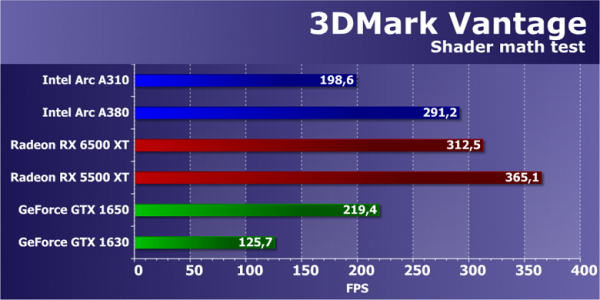

3DMark Vantage tests

We've been including legacy synthetic benchmarks from 3DMark Vantage in our tests for a long time now, as they often provide unique information that can be useful when analyzing the performance of new video cards. Feature tests that support DirectX 10 are especially valuable.

One such test is Feature Test 1: Texture Fill, which evaluates the performance of texture fetch blocks. This test uses filling a rectangle with values from a small texture using multiple texture coordinates that change every frame.

The performance of video cards from different manufacturers and generations in Futuremark's texture test is usually quite high, and the results of this test are often close to theoretical parameters. Although sometimes there are slight discrepancies that can be caused by various factors, including driver performance.

Even though Intel drivers don't always work perfectly in DX10 applications, the results of Arc family graphics cards are usually fine in this benchmark. The A310, being the younger version, expectedly lost to the older A380 by about 1.5 times, which corresponds to theoretical expectations.

The Radeon and GeForce models chosen for comparison are very different in terms of speed from each other in this test, and Nvidia graphics cards are generally less performant due to fewer texture units. Even the lowest-end Intel card outperformed the GTX 1650. Comparisons with AMD products show that even the older Radeon RX 5500 XT is stronger, not to mention the RX 6500 XT, which leads this test by a large margin over the Arc A310.

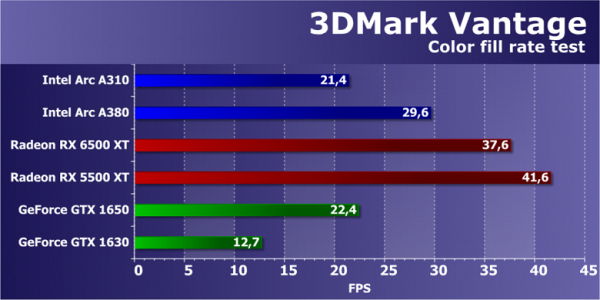

Now about the second task — the fill speed test (Feature Test 2: Color Fill). This test uses a simple pixel shader that does not limit performance. The color value is written to an off-screen buffer using alpha blending. A 16-bit FP16 off-screen buffer is used, making this test modern and relevant for games with HDR rendering.

The results of the second subtest of 3DMark Vantage show the performance of ROP units without taking into account video memory bandwidth. The test usually measures the performance of the ROP subsystem, in which case the performance of the raster processor is not greatly affected by the impact of memory. Here again, AMD and Nvidia graphics cards are very different from each other in terms of pixel filling rate, and GeForce again lags behind similarly priced competitors.

The Arc A310 naturally lags behind the A380 due to fewer ROPs, but the roughly 40% difference is close to the theoretical difference. The Intel graphics card still shows good performance compared to Nvidia, ahead of the GTX 1630 and almost catching up with the GTX 1650. However, the Radeon RX 6500 XT showed even better performance, almost double the results of the A310. But the most interesting point is the RX 5500 XT, which showed noticeable progress, beating even the latest video cards.

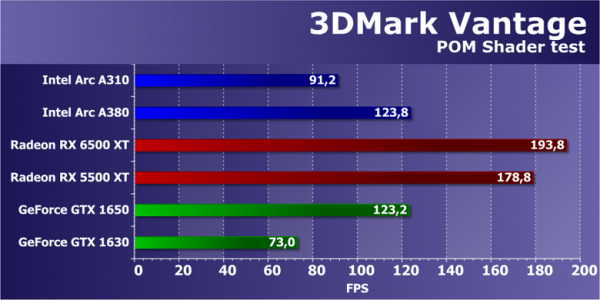

Now about the third subtest: Parallax Occlusion Mapping. This test is of particular interest because Parallax Occlusion Mapping has been used in games for a long time. It involves complex ray tracing operations and uses a high resolution depth map, making it very resource intensive. The test also includes heavy Strauss lighting calculations. As a result, this test is a significant load on the pixel shader, which contains numerous texture samples and complex lighting calculations.

The results of this test from the 3DMark Vantage package are determined not only by the speed of mathematical calculations, the efficiency of branch execution or the speed of texture fetches, but also by several other parameters simultaneously. What is important is the correct balance of the GPU and the efficiency of executing complex shaders, which is not usually the case with Intel GPUs. Their chips don't always have the same efficient balance and organization as their competitors, but by using more complex GPUs in the same price ranges, their solutions don't look as bad.

In the fourth subtest of 3DMark Vantage, the Arc A310 again performed as expected compared to the A380, falling noticeably behind it. However, the difference between them already exceeds a third, but does not reach half or double, as might be expected in some cases. Compared to the competition, Intel's solution is clearly superior to the GTX 1630, but inferior to the GTX 1650, and the Radeon RX 6500 XT is more than double the performance of the A310. Apparently, it will be extremely difficult to resist this AMD solution in games.

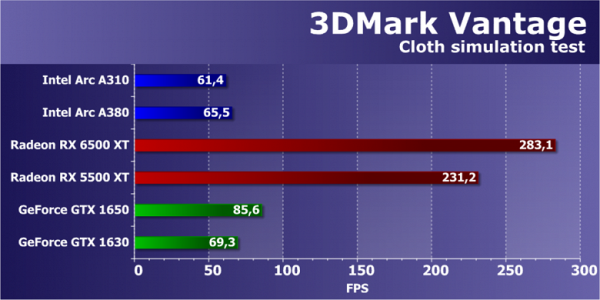

Now to the fourth subtest: GPU Cloth. This test simulates physical interactions (fabric simulation) using the GPU. Vertex simulation is used with the combined work of vertex and geometry shaders, as well as several passes. Stream out is used to transfer vertices from one simulation pass to another. Thus, the test evaluates the execution performance of vertex and geometry shaders, as well as the data transfer speed through stream out.

Rendering speed in this test should also depend on several parameters, including geometry processing performance and geometry shader execution efficiency. However, we have been receiving incorrect results for many video cards for a long time, including GeForce and the new Radeon in this test. Such strange and low results for all video cards except Radeon do not make sense for analysis, since they are clearly incorrect. Nowadays no one optimizes drivers for such an ancient test package, which is why the results are as follows.

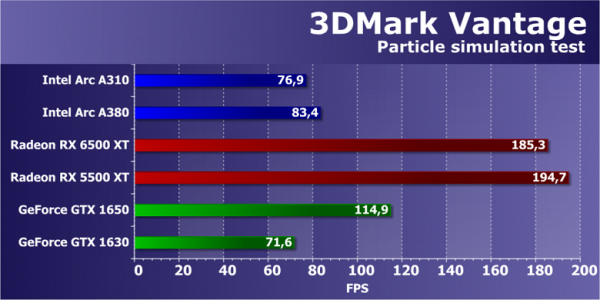

Now to the fifth subtest: GPU Particles. This test evaluates the physics simulation of effects based on particle systems calculated using the GPU. A vertex simulation is used, where each vertex represents a single particle, and stream out is used to pass data between passes. Several hundred thousand particles are calculated, each one is animated separately, and their collisions with the height map are also taken into account. Particle rendering is done using a geometry shader that creates four vertices for each particle from each point. The greatest load falls on the shader units that perform vertex calculations, and the efficiency of stream out is also tested.

The second geometry test from 3DMark Vantage also shows results that are far from the theory, but in the case of GeForce they are a little closer to expected compared to the previous subtest. However, Intel graphics cards continue to lag behind, remaining in the background, although they managed to overtake the GTX 1630. All of these results also seem incorrect, given that Intel GPUs should have been stronger and significantly faster from each other.

Now about the latest feature test of the Vantage package: Perlin Noise. This test is mathematically intensive on the GPU as it performs multiple octaves of Perlin noise algorithm calculations in the pixel shader. Each color channel uses its own noise function to create a large load on the video chip. The Perlin Noise algorithm is widely used in procedural texturing and requires significant computational resources.

In the mathematical test, the performance of video cards, although not always in line with theory, is usually close to the peak performance of GPUs in the most demanding tasks. This test uses floating point operations for calculations, allowing modern GPU architectures to unleash some of their new capabilities. However, it should be noted that the test is outdated and does not fully reflect the potential of modern GPUs. However, it can be used to evaluate maximum mathematical performance.

GPUs that compete on price perform more similarly in this test, although the GeForce is still slightly slower. The junior Intel graphics processor is significantly inferior even to the A380, by about one and a half times — according to theory. However, the company's unbalanced GPUs can result in high scores in simple math tests that don't always reflect actual gaming performance. We doubt that the A310 will be able to compete in speed with the GTX 1650, but whether it will be inferior to the RX 6500 XT — there is no doubt about this.

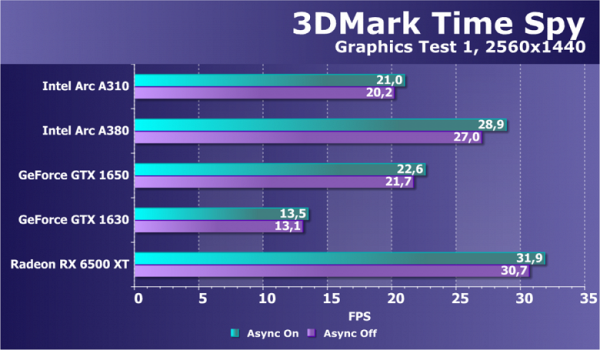

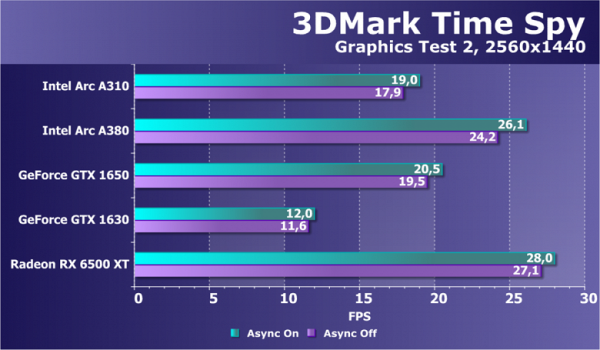

Direct3D 12 tests

We decided to exclude examples from Microsoft's DirectX SDK and from AMD's SDK, which use the Direct3D12 graphics API, from our tests. For a long time they showed incorrect results in most cases. Instead, we chose the famous Time Spy benchmark from 3DMark as our main Direct3D12-enabled computing test. We are interested not only in a general comparison of GPU power, but also in the difference in performance with the support for asynchronous computing introduced in DirectX 12 enabled and disabled. To be sure, we tested the video cards in two graphics tests simultaneously.

In this test, there is often a correlation between results that reflects gaming performance, although not with absolute accuracy, of course. It is important to consider that all companies actively optimize drivers for Time Spy, and Intel is no exception. As a result, the A310 trails the A380 by about 35%-40% in this test, which is in line with expectations. The younger model also surpassed the GTX 1630 in speed and almost caught up with the GTX 1650. At the same time, the Radeon RX 6500 XT is ahead of everyone else. However, in this test, Intel showed good efficiency in using GPU execution units. We will monitor the results in other tests.

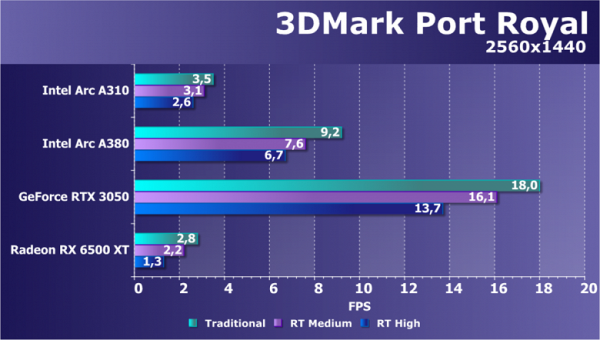

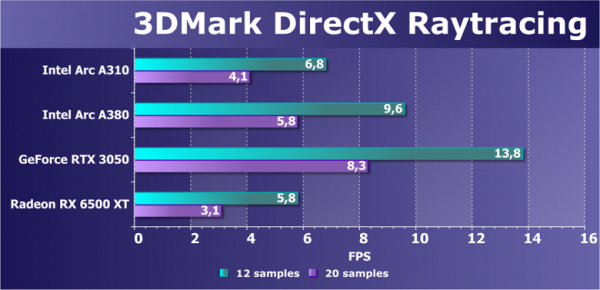

Ray tracing tests

One of the first benchmarks to evaluate ray tracing performance is Port Royal, created by the developers of the famous 3DMark series of tests. This test is available on all GPUs that support the DirectX Raytracing API. We tested several video cards at 2560x1440 resolution on various settings. During the test, reflections were calculated using ray tracing in two modes, as well as the traditional rasterization method.

The Port Royal benchmark is an indicator of new ray tracing capabilities through the DXR API. It includes reflection and shadow rendering algorithms that use this technology. The test is not perfectly optimized and requires significant resources even from powerful GPUs. However, it is well suited for comparing the performance of different graphics cards in this particular task.

The results of low-end Intel GPU graphics cards in this test highlight several important points. Firstly, 4 GB of video memory is clearly not enough, since the A310 is more than half behind the A380, which does not correspond to theory, except for the lack of video memory.

The Radeon RX 6500 XT has an even worse situation. 4 GB of local memory is not enough, and the ray tracing blocks are much simpler. AMD's RDNA 2 architecture lags behind both GeForce and Intel graphics cards, especially in challenging environments.

The second subtest of 3DMark, focused on ray tracing, does not use rasterization, and better reflects the speed of the GPU in hardware-accelerated tracing. The scene in this test is already known from other 3DMark subtests and is quite small. Large amounts of cache memory can benefit many solutions, including Radeon and Arc graphics cards.

We can say that the Arc A310 model reviewed today showed an impressive result, losing about 40% to the A380. This is close to the theoretical difference and indicates a high-quality implementation of hardware acceleration of ray tracing even in the lowest-power GPU from Intel. In this test, the low-end Intel video card outperformed even the RX 6500 XT, which calls into question the performance of AMD solutions compared to competitors.

The execution speed of RT blocks in the Intel microarchitecture proved to be very effective in this test, where the load mainly falls on them. However, of course, the Arc A310 remains far behind the RTX 3050, their direct price competitors.

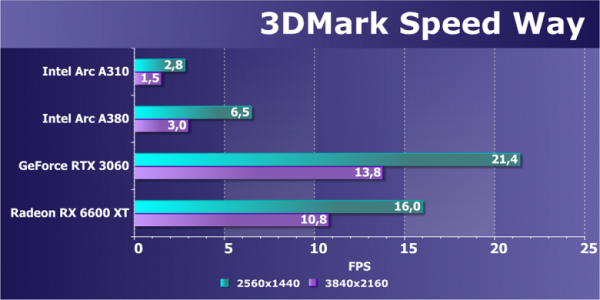

With the advent of new generations of Nvidia and AMD GPUs, another test was released last year as part of the 3DMark suite — Speed Way. This test, which is similar in load to future game projects that will actively use ray tracing, is of great interest to us.

In this test, the scenario is significantly different — the RT blocks are no longer as heavily loaded as in more synthetic tests, where ray tracing performance is measured exclusively. Instead, other blocks begin to work more actively. As a result, Radeon video cards are no longer behind GeForce as much as in the previous test. However, this can be explained by the choice of the more expensive RX 6600 XT and RTX 3060 for comparison, since there are no results for lower-end AMD and Nvidia video cards in this test.

Thus, the lag between the A310 and A380 is explained by the difference in the level of GPUs and their prices. The fact that the younger model is again more than twice as slow as the older one also indicates the need for more video memory in the test.

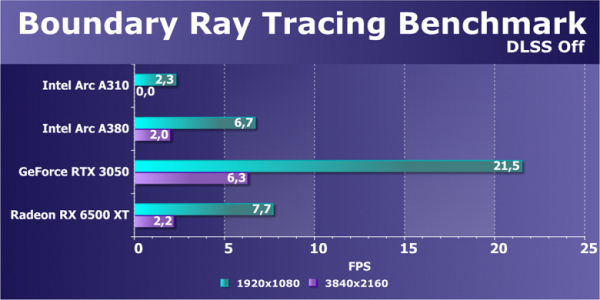

Next, it’s worth considering a semi-synthetic benchmark based on a game engine. Boundary is one of the Chinese game projects that supports DXR and DLSS. This benchmark puts GPUs under heavy load, using ray tracing for complex reflections, soft shadows, and global illumination. It's important to note that the test also uses DLSS performance enhancement technology, but does not include XeSS and FSR.

Even in Full HD resolution, none of the presented video cards have enough power to achieve a minimum comfortable 30 FPS, not to mention 4K. The Arc A310 model lagged behind its older sister by three times more than the theoretical difference, which once again indicates a lack of video memory — 4 GB is extremely small for modern applications. In 4K resolution, this game didn't even run on the A310 after a Full HD slideshow. This seems to be a bug in the Intel driver for this test game. Although the A380 is very close to the Radeon RX 6500 XT, the latter is hampered by the same lack of video memory. 4K resolution without upscaling technologies becomes unplayable even on more powerful GPUs.

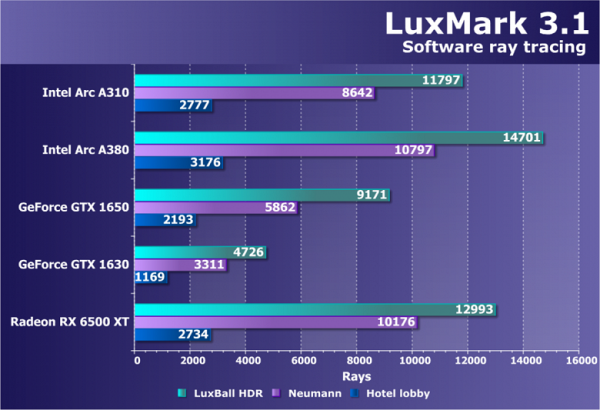

Computational tests

We continue to look for benchmarks that use OpenCL to solve modern computing problems to include in our synthetic benchmark suite. At the moment, LuxMark 3.1 remains in this section — an old and not very optimized ray tracing test (not hardware). This cross-platform test is based on LuxRender and runs on OpenCL.

This first test was an exception, where the Arc A310 video card was only a quarter behind its older version, the A380, which explains its impressive results. Even with significant limitations, the Intel graphics card still has a sufficient number of processing units, high clock speeds and a decent amount of cache memory. Therefore, even the youngest model demonstrated results significantly superior to the GTX 1630 and even outperformed the more expensive GTX 1650. It was only slightly inferior to the RX 6500 XT, and even in the most difficult test, the A310 showed a nominal superiority over its AMD competitor. In general, the results came out very good.

Unfortunately, all of our other compute performance benchmarks are not suitable for use with Arc graphics cards — not V-Ray Benchmark, not OctaneRender, not even Cinebench 2024, since they are also based on ray tracing, which is executed either on hardware accelerated compute units, or without it.

Tests of DLSS/XeSS/FSR technologies

In this section we add additional tests related to various performance enhancing technologies. Previously, this included only resolution scaling technologies (DLSS 1.x and 2.x, FSR 1.0 and 2.0, XeSS), but they have recently been joined by frame generation technology, currently only in the Nvidia variant — DLSS 3.

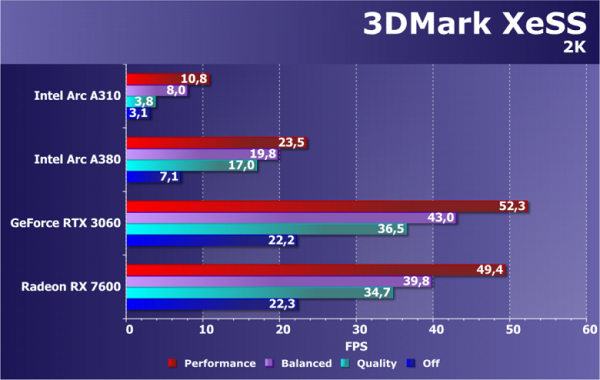

Let's look at a method to improve performance by rendering at a lower resolution and scaling the image to a higher one using XeSS, an analogue of DLSS 2.0 proposed by Intel. It also uses artificial intelligence capabilities and hardware acceleration on Arc family matrix units when restoring information in a frame. The difference from DLSS 2 is that it works not only on video cards from the developer company, but also on all modern GPUs, although not as efficiently as on Intel solutions. For testing, we used a specialized benchmark from the 3DMark package at a resolution of 2560x1440.

When XeSS is activated, the frame rate more than doubles, although there is a loss in image quality. This time, for comparison, we even included the RTX 3060 and RX 7600, which are clearly more expensive than the Intel graphics card we are reviewing today. However, this turned out to be insignificant, since the next test again did not have enough 4 GB of memory for the Intel GPU, and the A310 simply failed it, showing results 2.5-3 times worse than the A380. Typically, the company's video cards handle XeSS better than Radeon and GeForce, but not today.

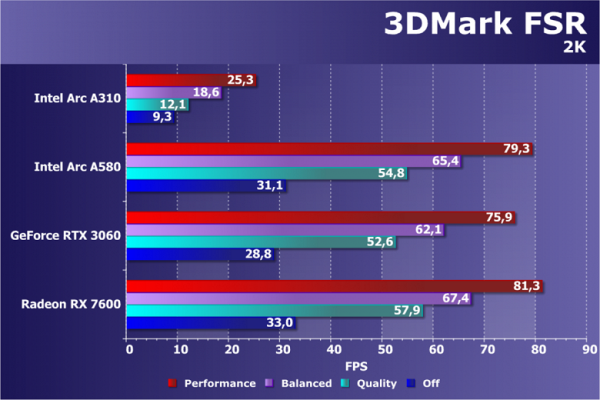

Another representative of the family of rendering scaling technologies is FSR 2.0 from AMD. For some reason, this particular technology was the last to appear in the list of specialized 3DMark subtests. Unfortunately, the scenes of different upscaling technologies are different and cannot be compared directly. We can only estimate the performance gain, but we also need to take into account the actual rendering resolution and quality differences, which makes the task more difficult.

FSR is another universal technology that performs roughly the same on different GPUs, so don't expect any special revelations in the FSR 2.0 tests. This time we again had to use non-direct price competitors for the Arc A310, and there were no A380 results for FSR. So we took the RTX 3060 and RX 7600 again, adding the A580 on the older chip. However, even in this case, the results of the lower-end Intel graphics card are not impressive, since it cannot cope with these tests even at 2K resolution. It's likely that 4 GB of local memory is again missing, although the shortage here is not as severe as in previous tests. However, just turning on FSR Performance mode allows the A310 to achieve at least 25 FPS, instead of the 9 FPS average without it.

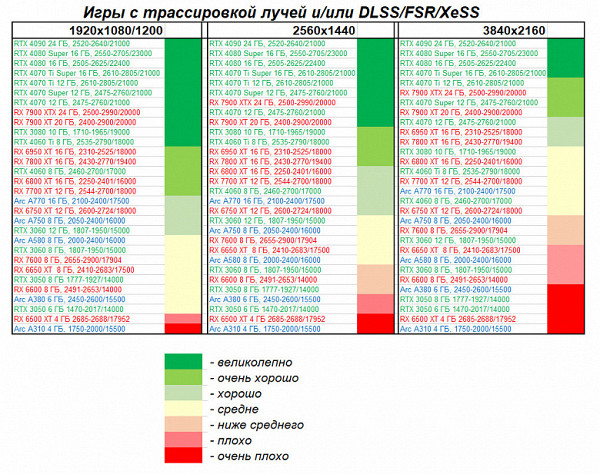

It remains to move on to consider the performance of the new Intel video card in games using all modern graphics technologies, including hardware acceleration of ray tracing. Will the Arc A310 video card, which is quite weak on paper, be able to perform strong enough in real conditions so that it can at least conditionally be called a gaming card, taking into account all the reservations and limitations?

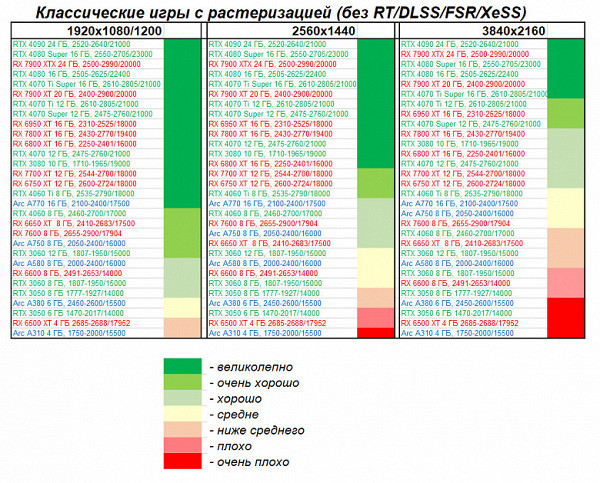

3D gaming performance in a nutshell

Games without ray tracing (classic rasterization):

The Arc A310 is a budget card designed primarily for resolutions up to 1080p. Even in Full HD, you will have to lower the graphics settings in many games to achieve comfortable performance. Among modern video cards, there are practically no competitors for the Arc A310, since all the closest analogues have higher prices and performance. When compared with older solutions such as GeForce GTX 1630, GeForce GTX 1650, GeForce GTX 1050 Ti, GeForce GTX 1060, Radeon RX 550/560, Arc A310 is slightly slower than GeForce GTX 1050 Ti, but slightly faster than GeForce GTX 1630, and significantly faster than the Radeon RX 550. At the same time, the GeForce GTX 1060 6 GB and GeForce GTX 1650 are significantly ahead of the Arc A310.

Intel's driver optimization strategy focuses on modern games using DX12/DX11. In very old games, the performance of Arc family video cards may decrease relative to similarly priced competitors.

Games using ray tracing and DLSS/FSR/XeSS:

Conclusions and comparison of energy efficiency

The Intel Arc A310 (4GB) is Intel's smallest entry-level desktop graphics card. Despite its affordability, the performance of this accelerator is so low that even its cost seems overpriced.

Companies such as Asus, MSI and Gigabyte cannot openly resist the “wishes” of Nvidia, which, having a dominant position in the gaming video card market, actually dictates its terms to its partners. Therefore, they do not release video cards on Intel processors (although they do not make official statements about this). Such restrictions force partners to choose between collaborating only with AMD, which does not prevent the production of video cards based on Intel processors, or abandoning the production of video cards altogether. As a result, there are Intel Arc cards on the market from manufacturers such as ASRock, XFX, Sapphire, Acer and some Chinese companies, as well as in small quantities from Gigabyte. However, these cards can be difficult to find, and shipping them from overseas can create warranty issues.

In terms of compatibility, Intel Arc cards require a modern PC with Resizable BAR technology, which means AMD 500 generation chipsets (X570/B550/A520 and newer) or Intel 500 generation chipsets (Z590/B560/H510 and newer). This means that Arc cards cannot be used to easily upgrade older gaming PCs on legacy platforms.

Despite Intel's promises to improve driver optimization for older DirectX 9/10 games, it is currently difficult to predict how Arc cards will perform in them due to limited driver optimization. However, over the past year, the gaming performance of the Intel Arc family has improved significantly due to improvements in drivers.

In general, low prevalence and difficulties with availability affect the popularity of Arc video cards on the market. Even with their support for DirectX 12 Ultimate and modern technologies like hardware ray tracing and HDMI 2.1, their high cost and limited availability make them unattractive for most consumers.

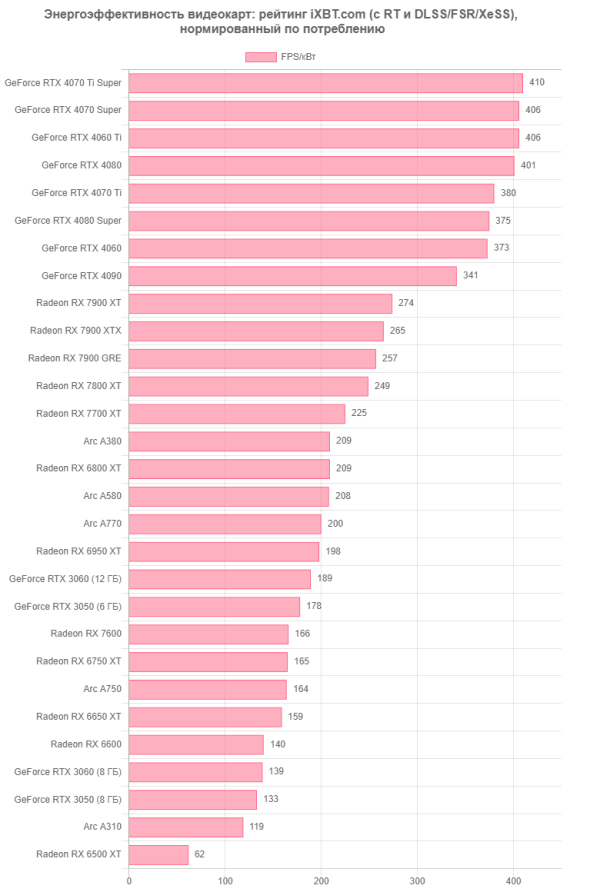

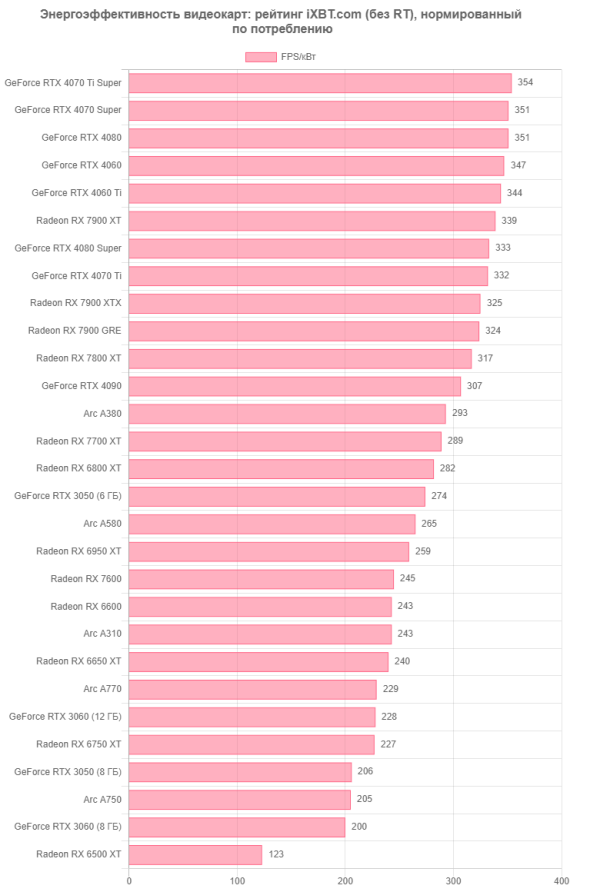

The new Arc 310 is quite energy efficient. In the overall energy efficiency rating, which does not take into account hardware ray tracing, it even surpasses older models of Intel accelerators and occupies a worthy position without being at the very bottom of the list.

The tested Gigabyte Intel Arc A310 WindForce 4G (4 GB) model has a standard dual-slot size and provides quiet and efficient cooling. The card's power consumption can reach up to 38 W, and it does not require additional power.

Let us note once again that a video card based on Arc A310 is suitable for games in resolutions no higher than Full HD. However, to achieve acceptable performance, many games will require the graphics quality to be reduced to medium or low. If the game supports scaling technologies such as Intel XeSS or AMD FSR, their use is highly recommended. However, it is worth noting that support for ray tracing on the Arc A310 accelerator is rather formal, since the performance of this card is not enough for the practical use of this technology.