The massive adoption of the M.2 format in the consumer SSD segment has led to funny results. The vast majority of retail offerings use (almost) the largest 2280 boards within the standard, which is 22mm wide and 80mm long. In principle, for high-end devices this is the best option — you can accommodate a large amount of flash memory, not forgetting also about fairly large controllers that require one or two DRAM chips. However, when it comes to mobile solutions, these sizes become suboptimal.

In many laptops, tablets and compact devices where space is limited, using a 2280 SSD can be inconvenient. For example, in a Steam Deck console measuring 298x117x49 mm, the M.2 2280 slot is too large, and placing several such devices in it becomes problematic. Also, with the advent of small laptops and mini-PCs, the M.2 2280 format turns out to be redundant for their compact size.

This problem did not go unnoticed, and the emergence of SSDs in the M.2 2230 format, where 30 mm is half the length of the standard 2280, became a response to requests for more compact devices. These SSDs are fully functional and suitable for mobile devices where every millimeter matters. Such drives can be an excellent solution for devices where the M.2 2280 slot is too large, while providing all the necessary functionality for storing data and running applications.

And today we will look at TeamGroup SSD as one of the characteristic representatives of the upcoming changes. At first glance, the company is not so well known, operating in the shadow of more famous competitors in the market. However, given the complex relationships between controller manufacturers, memory manufacturers and those who provide ready-made drives to the market, the picture may not be so simple.

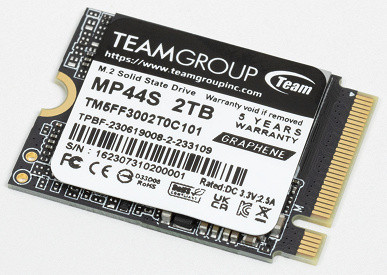

TeamGroup MP44S 2 TB

Those who have not previously encountered this format may be surprised by its compactness. This is not our first experience, so it was interesting not only the external impression, but also the internal structure. We reviewed the MP44L with a Maxio MAP1602 controller and 128-layer YMTC TLC memory last summer and were impressed. This drive also has a bigger brother, the MP44, which has the same controller but 232-layer memory (similar to the one found in the recently reviewed Digma Meta M6), which promises to be even faster. Team, unlike many other manufacturers, takes full advantage of the platform's capabilities, even introducing 8 TB modifications in this line — something that recently seemed incredible. The MP44S was also on sale at a price above $800, and now it has risen even above $1000. But there will probably be those who are ready to buy it. But it is important for us to note that both versions of MP44 (MAP1602 and TLC) interest us more. What's inside the short MP44S?

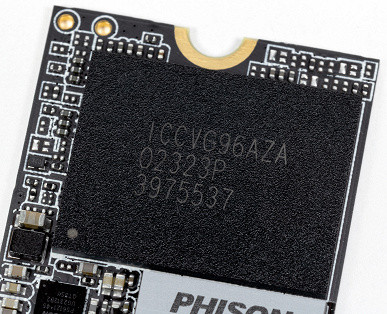

Alas, this is due to a slightly different popular platform, which TeamGroup has limited involvement with (although it sells it, like the aforementioned Sabrent and Corsair). This platform arose when Micron decided to release mass SSDs using QLC. Previously, Micron used controllers exclusively from Silicon Motion, but now they remain only in SATA SSD Crucial (after all, in the third decade of the 21st century there is no need to introduce a new SATA line) and some OEM models. The company moved away from developing “in-house” controllers, not releasing anything after the Crucial P5 Plus. Thus, the main models in retail were SSDs on various Phison platforms — the expanded Crucial T700 on Phison E26 with support for PCIe Gen5 and almost equivalent to it, with the exception of this very support, Crucial T500 on Phison E25, as well as two budget models — Crucial P3 and P3 Plus. Both are hardware identical, except that in the “non-flattened” version of P3, the Phison E21T controller disables support for PCIe Gen4, which Phison has been able to do and actively uses for a long time. The memory in both models is the same — 176-layer QLC of our own production. Note also that any of the Phison designs is available to everyone, and not just to the first customer. However, the “full-size” version apparently did not interest many partners, while the possibility of producing inexpensive and capacious M.2 2230 SSDs on this platform has found its application among many manufacturers. Thus, in fact, today we will look at two SSDs at once — and the Crucial P3 Plus now does not require additional testing. The only difference between the P3/P3 Plus is the presence of a 4 TB modification, which is unlikely to fit in the 2230 form factor. However, high-capacity budget SSDs are of interest only to a small audience, since they are a rather specific product.

So, to put it bluntly, the MP44S (as well as similar models) is based on a bufferless four-channel Phison E21T controller and Micron N48R 176-layer QLC memory. Of course, this combination may not seem very attractive to a true enthusiast (and may cause him to hiccup), but given the main purpose of these SSDs, it is quite acceptable. It may not be practical to use such a base to create a compact external SSD, even if you would like to. We'll check the validity of this idea later. At these sizes, TLC use is typically limited to a terabyte, and each gigabyte of capacity costs significantly more, which is an inevitable trade-off. We will see how justified this compromise is during testing.

Testing

Testing methodology

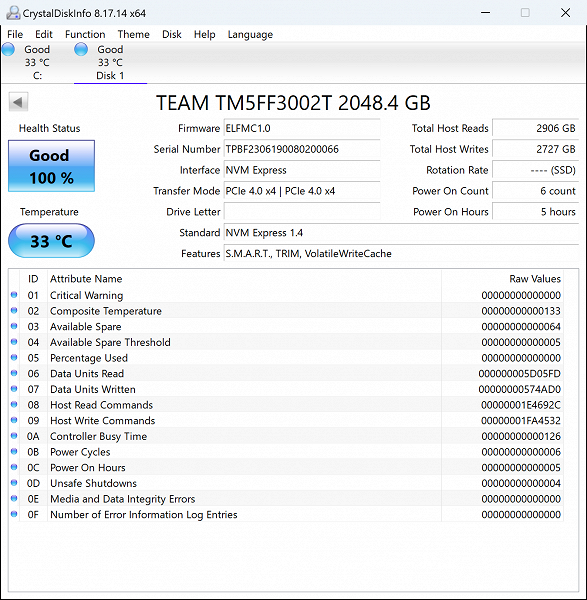

For testing, we used a test platform equipped with an Intel Core i9-11900K processor and an Asus ROG Maximus XIII Hero motherboard based on the Intel Z590 chipset. This gave us two options for connecting SSDs: to PCIe Gen4 lanes, targeting modern SSDs capable of reaching their full potential, and to PCIe Gen3 lanes in compatibility mode. The latter option is also interesting, since the PCIe chipset controller first appeared in Intel's fifth series chips (in 2015) and has undergone only minimal changes since then. However, keep in mind that we have already researched this controller quite a bit, especially in relation to budget models that, until recently, did not use Gen4. Thus, in this test we will limit ourselves to using the standard mode for our SSDs, focusing on more relevant research.

Samples for comparison

First, we decided to compare the Team MP44S with the Kingston NV1 and Silicon Power UD85, which have similar capacities. The Kingston NV1 uses a Phison E13T controller and 64-layer Intel QLC memory, which, in fact, represents the older generation of the platform. Silicon Power UD85, in turn, is equipped with a Phison E19T controller, which is a kind of link between the E13T and E21T, and fast TLC memory from YMTC. It is important to note that the UD85 model under test uses exactly the same set of components as the Team MP44S and Crucial P3 Plus — Phison E21T and Micron 176-layer QLC memory. As such, we plan to analyze the performance of these SSDs and determine how they compare to each other.

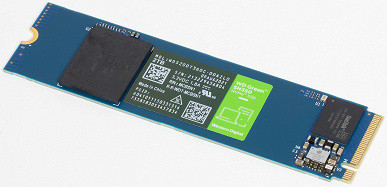

For a more comprehensive analysis, let's add a couple of outstanding two-terabyte SSDs with QLC memory to the comparison: Intel SSD 670p and WD Green SN350. Both of these drives are limited to the PCIe Gen3 interface, which, one might say, puts them within a certain framework. Although the Intel SSD 670p can be considered relatively outdated, given the appearance of the Solidigm P41 Plus on the Silicon Motion SM2269XT bufferless controller with PCI Gen4 support. However, it is worth noting that both SSDs use the same QLC memory (144-layer Intel), and the DRAM buffer is retained only in the Intel 670p. So far, the latter has not completely disappeared from the Solidigm range. Thus, these two disks can serve as a reference point for our analysis.

Filling with data

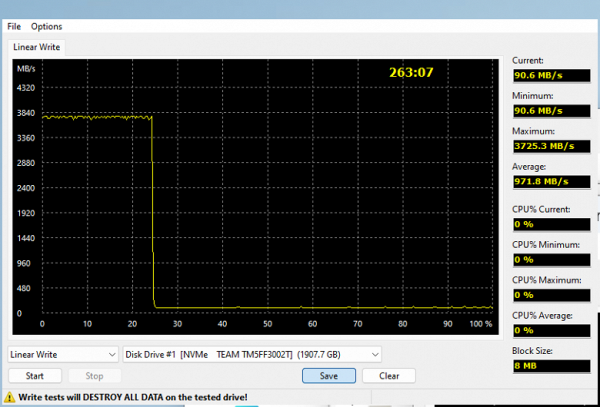

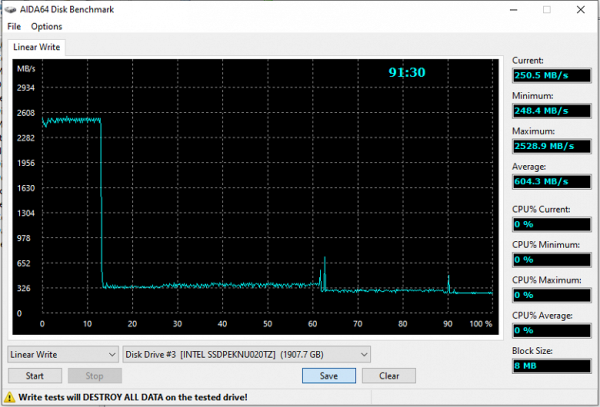

Fear and trembling. But, of course, not unexpected — taking into account the controller and QLC memory, such results were predictable. The high write speed here is limited by the SLC cache, and then there is a slow and sequential write of new data, combined with cache clearing at a speed of only 90 MB/s. It is useless to compare this with the performance of decent SATA models — even laptop hard drives can be faster in some areas of the surface. Why do manufacturers still use QLC? Firstly, it is more economical. Secondly, there are practically no scenarios that require quickly writing hundreds of gigabytes, and small amounts of data can quickly get into the cache at high speed. As long as this process continues, everything is fine. However, take a step left or right — and trouble is just around the corner. For example, in a game console, such behavior of the device is practically not noticeable, with the exception of installing games or when major updates appear. However, here too the result is influenced by various factors, such as Internet speed and others. It is important to remember these features of the operation of such devices.

And to be fair, it is important to look at the situation from different points of view. Modern models using QLC memory did not appear just like that. They primarily replace previous generations of devices with slow controllers and slower early QLC memory. From this perspective, significant progress can be noted — new models write data much faster, even taking into account differences in the cache, compared to their predecessors.

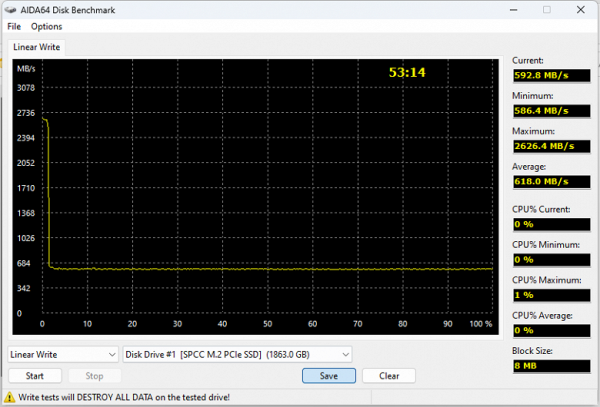

But given equal conditions, of course, we would prefer a similar situation. This Silicon Power UD85 uses a small static SLC cache, but the fast TLC memory means it doesn't slow down too much during operation. In this case, competition is possible not only with any hard drives, but also with any SATA drives. However, we repeat that such efficiency is only possible using TLC, since the use of cheaper QLC forces you to rely entirely on the cache, since it is difficult to achieve high speed outside of it.

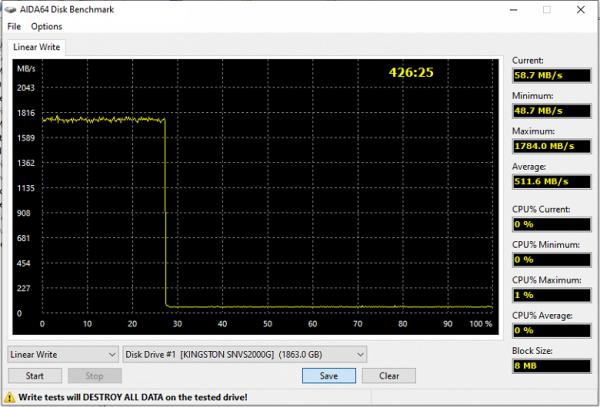

Or almost impossible — the WD Green SN350 does the job twice as fast, since its write speed outside the cache is twice as high. The cache is slightly smaller, but this is due to the limitation of the controller, which only supports PCIe Gen3. All the more interesting is the comparison of these two devices in less trivial scenarios.

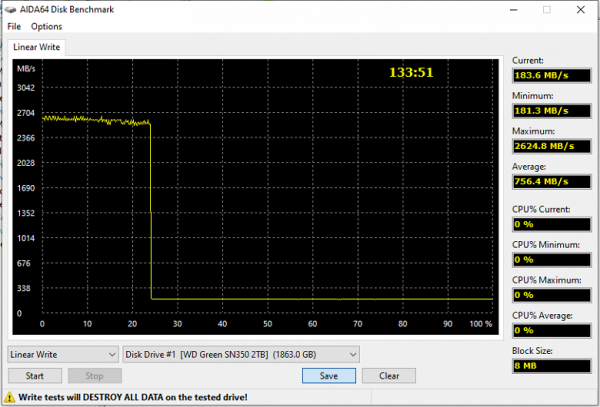

And Intel is even faster; and here it’s not far from many budget SSDs with TLC memory. The company took the issue of using QLC very seriously, so in the latest “proprietary” product we have SLC cache for only half of the free cells, but then direct recording at a speed of about 350 MB/s — and about 250 MB/s for tail processing. It will be interesting to see whether this approach has been preserved in SSDs from Solidigm. It should — because they are developed by the same people, and only the brand and the general manager change. And these people have already demonstrated their competencies. Phison doesn't do that. True (again, assessing the situation from different angles), we should not forget that Intel SSDs have always been expensive, but Phison’s budget QLC platforms are really affordable. And the new ones are noticeably better than the previous ones. What can you be satisfied with — for this money. Or choose initially in another segment — if there is such an opportunity.

Maximum speed characteristics

Low-level benchmarks in general, and especially CrystalDiskMark 8.0.1, have long been limited in their ability to deal with SLC caching — so they can only evaluate the cache itself. However, the information provided by manufacturers regarding device performance is also limited by these limits, so it is always a good idea to check the test results. This is especially important because a lot of the caching work is aimed at maximizing efficiency in real-world scenarios where the device is often cache-hit. This way, benchmarks can reflect high speeds even as memory costs drop.

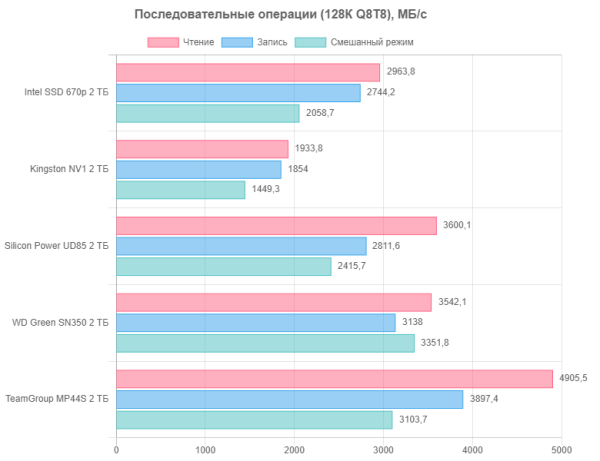

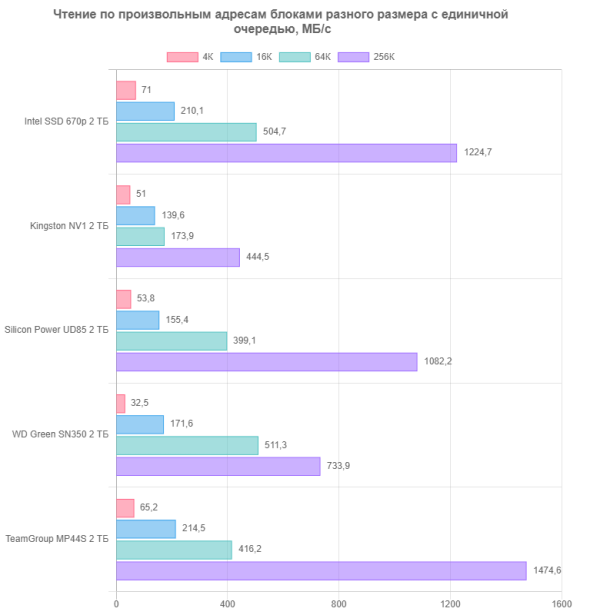

In the phase when the device makes full use of the cache, the Phison E21T exhibits excellent performance. This controller represents the second generation of Gen4 controllers, capable of reaching data read speeds of up to 5 GB/s (assuming there are no obstacles, as is the case with the Kingston NV2). The third generation, which is gradually appearing on the market, can reach 7 GB/s, while the first generation is almost indistinguishable from the best Gen3 models. Modern Gen3 models generally outperform quad-channel models, which, even under ideal conditions, could not reach 2 GB/s. Comparison with the limitations of SATA in the past makes such speeds ridiculous if they are supported always and everywhere.

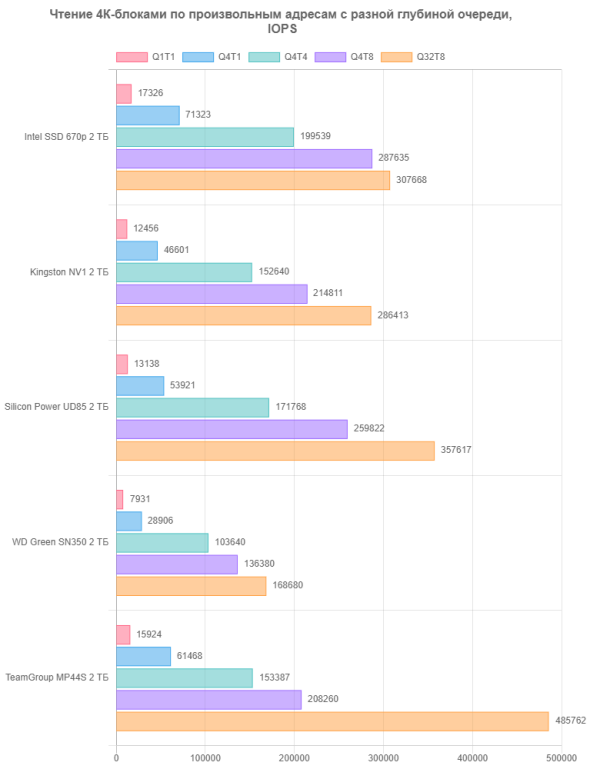

Strict requirements for random operations have long become a real challenge for manufacturers. In this context, the poor performance of the WD Green SN350 can be explained by a strategic choice by the company, which decided that the current performance was satisfactory. Real-world use cases require up to three thousand IOPS in most cases, so eight thousand headroom seems excessive. Increasing this figure by four times is probably considered unnecessary, since this already significantly exceeds real needs. This approach may not be acceptable to testers, but for most users it doesn't really matter.

Indeed, the situation with Kingston NV1 looks more logical, and it turned out to be an outsider in this case. However, the use of such loads in tests is driven more by tradition than by real needs. Some readers may be discouraged by not discovering small-block operations. In practice, just like midnight turning Cinderella into a pumpkin, real-world use cases typically don't involve such high loads. However, controller manufacturers also strive to continually improve their products.

The speed of such operations does have an impact on the performance of the actual software. Unlike previous tests, long queues of operations are not as important in this case, but data blocks other than 4K bytes are much more common. Although the number of operations per second on large blocks may decrease slightly, they themselves are larger. This results in a higher resulting speed in megabytes per second. Therefore, various programs tend to work with blocks of this type, as this optimizes performance. While even budget SSDs have achieved impressive speeds, programs can have a hard time competing with them, especially when running within the SLC cache, which temporarily masks the differences between different types of flash memory.

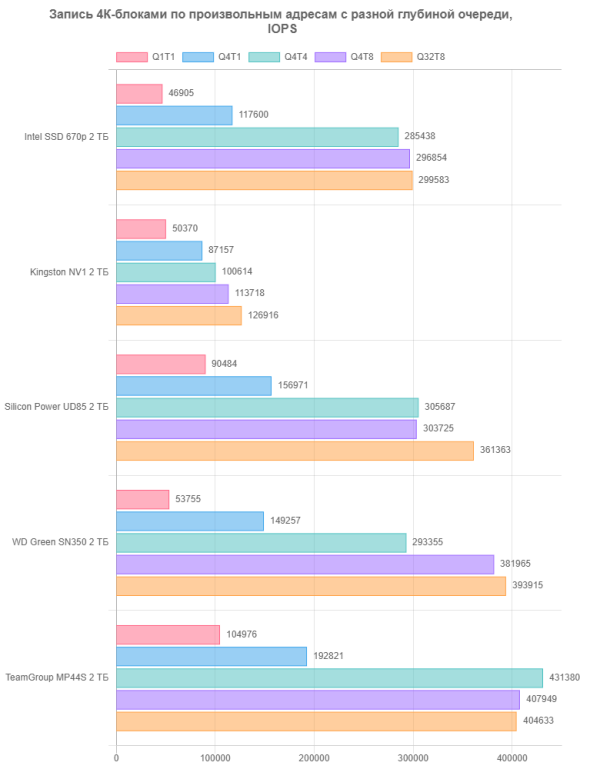

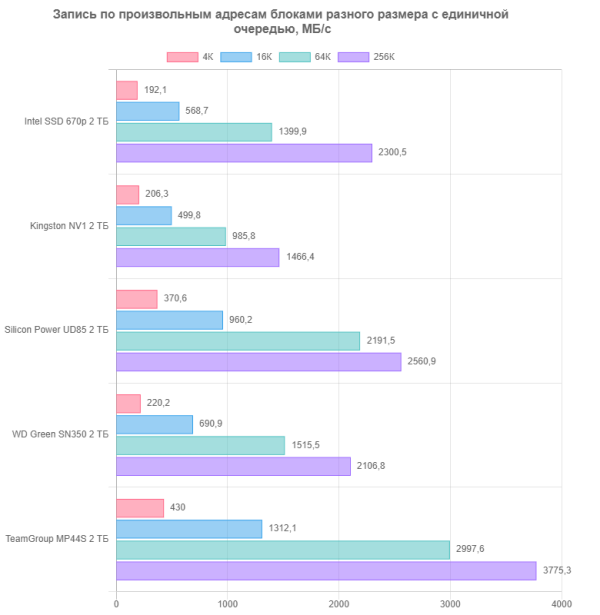

The same is true for write operations. Even budget controllers in single-bit mode are capable of achieving such impressive speeds in megabytes per second that even some serial data interfaces from the recent past would envy. While such high speeds may seem excessive, increasing controller performance is an objective process that is sometimes necessary in various scenarios. Such results are just side effects of this process.

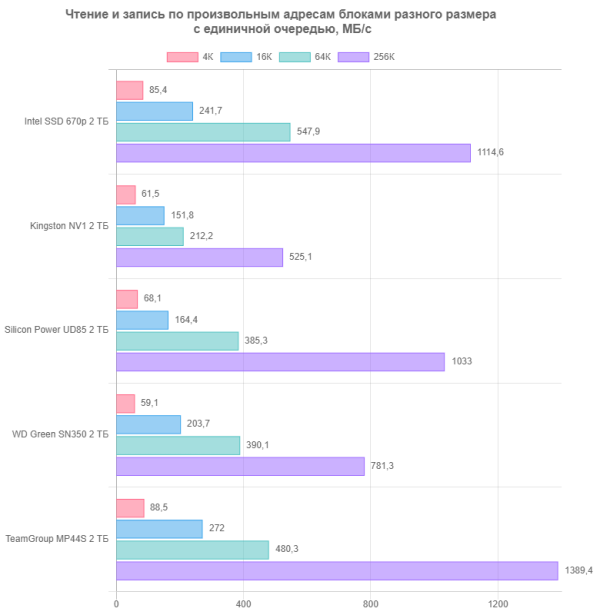

Mixed mode is also important because in reality it is rare to have to perform only write operations or only read operations for long periods of time. This is especially true in multitasking scenarios, given the complex inner workings of modern operating systems. However, there is nothing new here — all the results are predictable and fit into the themes discussed earlier. Productivity numbers continue to improve and will continue to do so as it happens naturally when solving important problems. As for the software and the fact that it no longer requires such high performance, then these are its own problems.

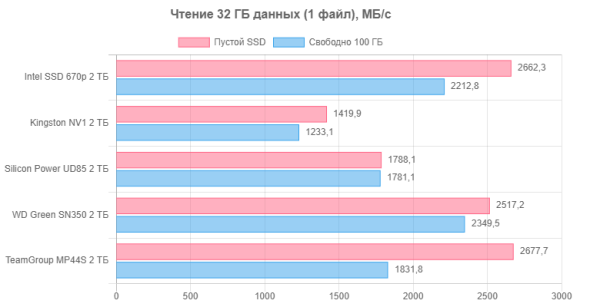

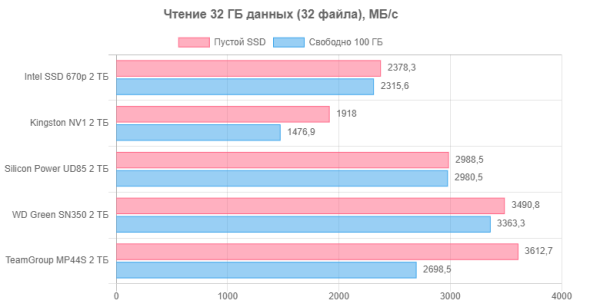

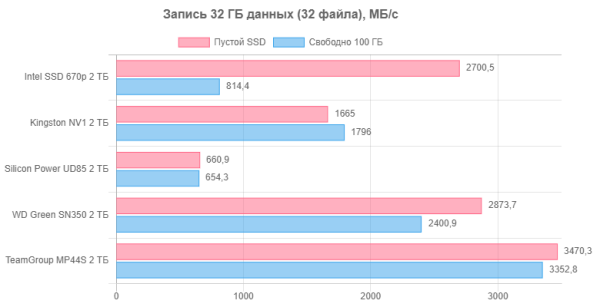

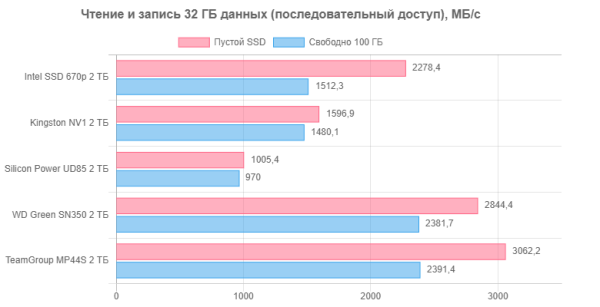

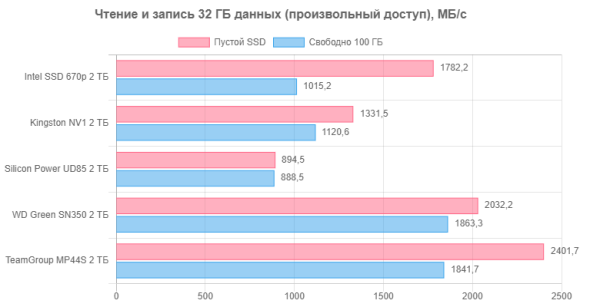

Working with large files

Despite the impressive results in low-level tests, the actual achievability of such speeds in practice is not always confirmed. This is due to several factors. First, higher productivity requires more complex work. For example, CrystalDiskMark operates on small pieces of information, providing testing within a single file. This approach often allows you to work with data that is guaranteed to be in the SLC cache for the duration of the test. Second, the tests should consider file system overhead operations such as MFT (Master File Table) modification and file system logs, which is a more realistic scenario for writing a file.

To obtain more practically accurate results, use the Intel NAS Performance Toolkit. This tool allows you to test not only within the cache, but also in conditions closer to real ones, for example, when there is almost no free space.

Working in one thread is the most common scenario, but at the same time the most complex. Modern controllers cope with this task much better than their predecessors, even when it comes to budget options. When evaluating performance, it is important not only to pay attention to the high peak results, but also to the ability of the Phison E21T to cope with the task of reading data from the QLC memory array, rather than from the SLC cache.

Working in one thread is the most common scenario, but at the same time the most complex. Modern controllers cope with this task much better than their predecessors, even when it comes to budget options. When evaluating performance, it is important not only to pay attention to the high peak results, but also to the ability of the Phison E21T to cope with the task of reading data from the QLC memory array, rather than from the SLC cache.

Sometimes there is a desire for household SSDs to become more independent again and not hide their shortcomings behind active caching. However, when you consider the Silicon Power UD85, which consistently lags behind the rest despite the use of TLC, the idea of observing reality without embellishment somehow loses its appeal. The concept of SLC caching is based on the idea that long-duration write loads are typically rare in everyday use, and short bursts are effectively (and quickly!) absorbed by the cache. It is also understandable to use a cache to speed up reading, where temporary files are usually written and read only once before being deleted. However, it becomes obvious that the further we move from this ideal situation, the more serious the problems arise.

The number of programs that simultaneously decide to write data to the SSD is not the main criterion for such devices. Hard drives fail at any deviation from strictly sequential single-threaded operation, but solid-state drives are more convenient for parallelizing even sequential loads within themselves. Therefore, their behavior remains the same in these scenarios. The only important factor is the amount of data, not the number of worker threads.

The direction of the flows also does not play an important role. Reading does not affect the write process (especially given the bidirectional nature of PCIe) and even increases the resulting speed since it is performed more quickly.

Another example of why a scheme with an SLC cache for all free cells and its fastest release at the first pause in operation has become a de facto standard. Top-end SSDs can afford to deviate from this rule in the first point, but in the budget segment this often creates problems than it solves. So the main thing is that there is enough space in the cache for any “session” of work, and that these “sessions” do not occur more often than the controllers have time to deal with their consequences. It is also advisable not to fill the drive all the way, which is easier when the total capacity is high. And the high total capacity in the form of “budget controller + QLC” is cheaper than any other combination. That's all.

Comprehensive performance

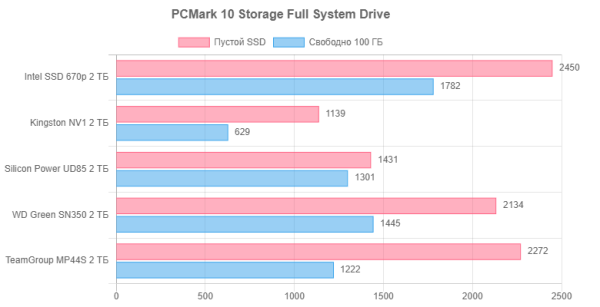

Currently, the most comprehensive benchmark for assessing storage performance is PCMark 10 Storage. A brief description of this tool is available in our review. We have emphasized that not all three tests included in this tool are equally useful. The most informative test is the “full” Full System Drive, which covers almost all usage scenarios: from loading the operating system to copying data (internal and “external”). The other two tests represent only subsets of Full System Drive and, in our opinion, are less “interesting”. This test is useful not only for accurately measuring real-world throughput in practical applications, but also for estimating the latency involved. Although averaging these metrics across scenarios and then reducing them to a single number has a certain degree of syntheticity, at the moment there are no estimates “in general” that are closer to reality, and not just in individual cases. Therefore, familiarizing yourself with PCMark 10 Storage is advisable.

The main drawback of the above-mentioned «standard» caching approach in the presented test is that it operates at a higher intensity than is typical in everyday life. But this is necessary, because otherwise the testing process would take not one hour, but at least a day. However, this has a significant impact on budget models, reducing their performance below the possible level. However, it also gives a realistic view of performance, providing information not only about potential peaks, but also about the actual capabilities of the device. It's important to note that this performance drop is visible even when testing an empty SSD, where the SLC cache reserve provides enough space to run the test without having to clear the cache in the process. The results also show a two- to three-fold decrease in performance for models that rely solely on SLC caching, and a less significant decrease for devices with other technologies. An interesting point is the evolution of performance in the same class over two to three years, where there is a doubling of performance. For example, the Kingston NV1, which many chose because of its affordable price for the two-terabyte model, is becoming more attractive in new platforms as they have become noticeably faster, although it is still not the fastest in its class. This circumstance makes it more attractive, especially for those who are looking for budget options with good compactness.

Total

The conclusions can be divided into two parts, since in this test we not only assessed the performance of a specific device, but also for the first time got acquainted with the Phison E21T platform, which uses Micron N48R 176-layer QLC memory. This platform is widely available in a variety of form factors, including the Crucial P3 and P3 Plus models, and is at the very entry level in today's scale. It is clear that in such cases there is no need to talk about high productivity. However, it is important to make a comparison, and in this context, structurally similar SATA drives turn out to be many times slower. Even with the limited capabilities of this platform, productivity in this segment has doubled in two to three years.

SSDs of this class do not pretend to be powerful universal drives capable of satisfying the most demanding user. However, they find their niche by providing stability through an affordable price and highly visible benefits. It is important to note that on such a basis it is possible to create compact and capacious drives, which became possible thanks to the appearance on the market of many similar models. M.2 2230 provides a unique niche, especially in gaming consoles, where the disadvantages of such drives are less noticeable, and their advantages, especially in the price segment, become more noticeable. Such SSDs find their place given the limited size and needs of portable devices.