The Hiper brand was introduced to the local market in 2010 with a diverse range of IT equipment, including laptops, compact PCs, all-in-one PCs and peripherals. The company recently expanded its range to include servers under the same brand. At the time of writing, fifteen models of rack-mounted servers and one floor-standing model were presented on the official website. The company offers both ready-made server solutions created according to customer requirements, and the ability to create a product based on the Hiper platform through distributors and partners.

In this article we will look at Hiper Server R3 — Advanced. This name hides three 2U devices, differing only in the configuration of the data storage system. The R3-T223225-13 model with the ability to install 25+2 2.5" drives was presented for testing.

Contents of delivery

The server was delivered in a strong cardboard box of large dimensions — about 70x30x110 cm. The packaging was provided with thick foam inserts to reliably protect the device. The kit also included power cables, drive mounting screws, and rack mounting brackets. There was no documentation, which is typical for test samples.

Rack installation was easy thanks to special snap mounts and special locks. The rails are equipped with bearing blocks for smooth movement. The server can be completely pulled out for maintenance. Additional locking latches ensure structure fixation.

Technical specifications, basic drivers for Windows OS and brief documentation in Russian are available on the manufacturer's website. The manufacturer does not limit the use of standard components.

The standard equipment warranty is three years, but service options are available, including warranty extensions and quick replacement of components.

Appearance and design

The server has standard 2U dimensions for installation in a 19-inch server rack. Its depth is 82 cm, which requires the use of a cabinet or rack with a depth of at least 90-100 cm.

It makes no sense for rack-mount equipment to stand out in terms of visual design. Reliability, ease of maintenance and other more practical criteria are more important here.

The front panel of the server includes 25 bays for 2.5-inch drives. This configuration is most popular among users who require a compact format with high capacity and speed of local data storage.

To accommodate the front buttons, LEDs and ports, protruding “ears” are used on the left and right. On the left ear there is a VGA port and two USB 3.0 ports, and on the right there are power and ID buttons (used to restart the BMC), four LED indicators (general status, memory status, fan status, OCP network interface activity and status) and Mini- USB, signed “LCD for connecting a monitoring system” (the manufacturer did not provide information about it). There are also holes for screws securing the server to the rack, hidden by spring-loaded covers.

At the bottom right under the disks there is a retractable label for placing information about the server, but the buyer will have to put the information on it.

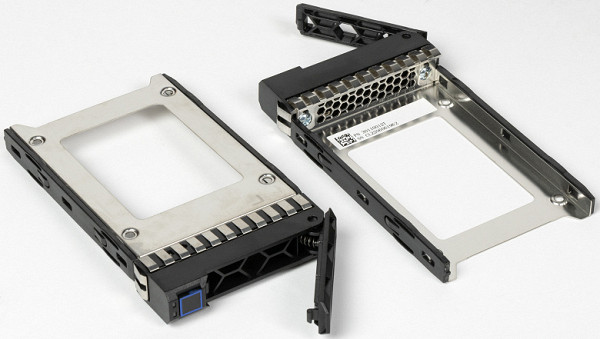

Drives are installed in the main compartments through special slides made of metal and plastic. The disk is secured to them with four screws. The slide provides two LED indicators for disk status. It is worth noting that there is no additional protection against accidental opening — for this operation you just need to press the button at the bottom of the slot.

On the rear panel there are horizontal slots for expansion cards: six full-height and two half-height. Details about their use will be given below.

At the bottom right is the OCP slot, occupied in our case by a network card with two SFP28 ports supporting speeds of 10/25 Gbit/s. There is also an IPMI network port, a VGA output, a serial port and two USB 3.0 ports. There is also an ID indicator button and a hidden BMC/NMI reset button.

At the top left there are two additional bays for 2.5″ drives with a SATA interface. Below them are two power supplies with redundancy and hot-swappable support.

To access the inside of the server, there is a special lock with an additional latch on the top cover. On the back of the cover there are stickers with a brief description of the system elements and the sequence of various maintenance operations, such as replacing fans. However, all information is presented only in Chinese. It is also worth noting the presence of a case tamper sensor. To access the ports on the front backplane, you can remove the second part of the top panel.

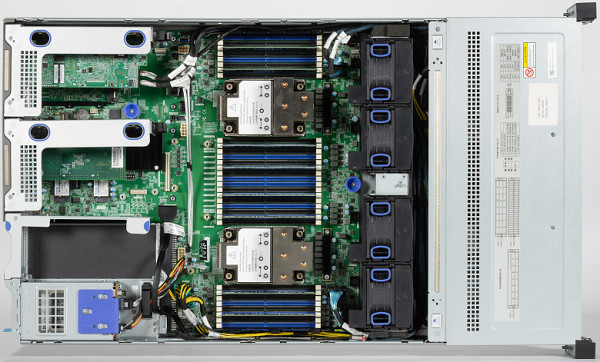

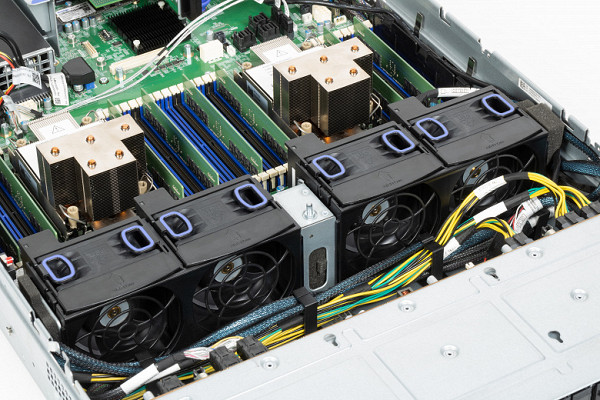

The server design itself is traditional. After the disk bays there is a cooling unit of four fans and a motherboard with expansion cards and power supplies.

Of the 25 front bays, the last eight support SATA/SAS/NVMe interfaces, while the rest only support SATA/SAS. U.2/NVMe connectivity is done with custom cables for maximum performance, requiring 4x8=32 PCIe lanes from processors or controllers. The remaining configurations operate through a multiplexer and are connected to the controller via three standard Mini SAS HD ports (SFF-8643).

The cooling system is very well thought out: four 80x38mm fans circulate air through the entire case, blocking all large cracks for optimal ventilation. Fans model DYTB0838B2GP311 from the famous AVC brand are connected to the motherboard via a 4-wire interface, and the maximum current is 4.5A.

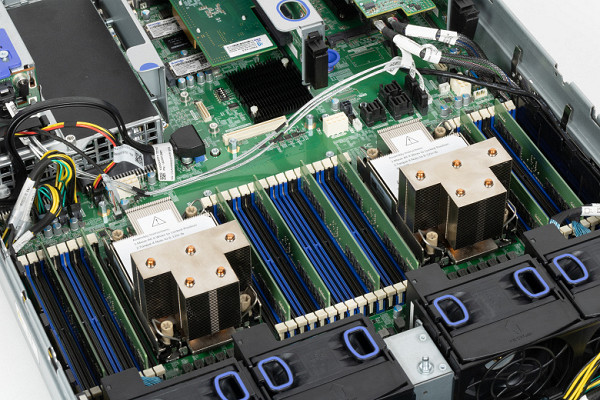

Only radiators are installed on the processors, and although they are relatively small, special air flow guides ensure optimal temperature conditions.

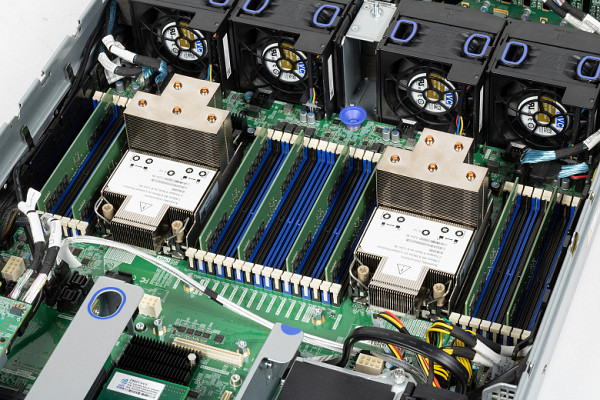

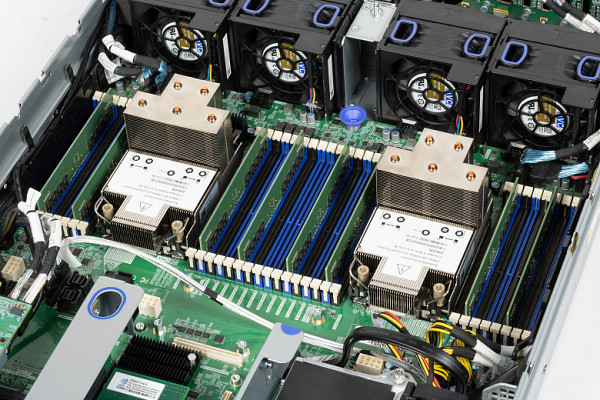

Three compartments are provided for connecting expansion cards. The first two can accommodate three full-height cards, while the third can accommodate two half-height cards. The design of the case allows you to place video cards up to 30 cm long.

Overall, the design impresses with its quality of workmanship, thoughtfulness and wide configuration options.

Configuration

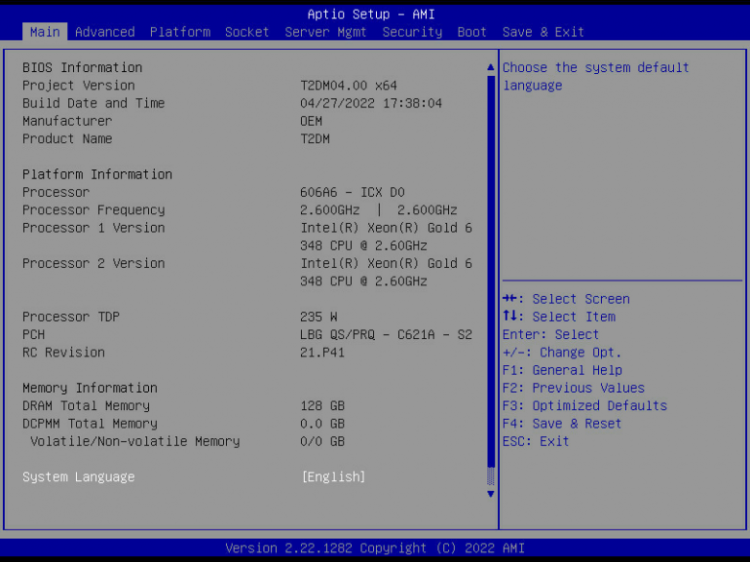

According to information from IPMI, the server is built on the TTY TU628V2 platform and is equipped with a T2DM motherboard. The BIOS and BMC versions date back to 2022; no updates could be found online. The motherboard and case were developed together, so they should be considered as a single whole, and the technical characteristics should be described together.

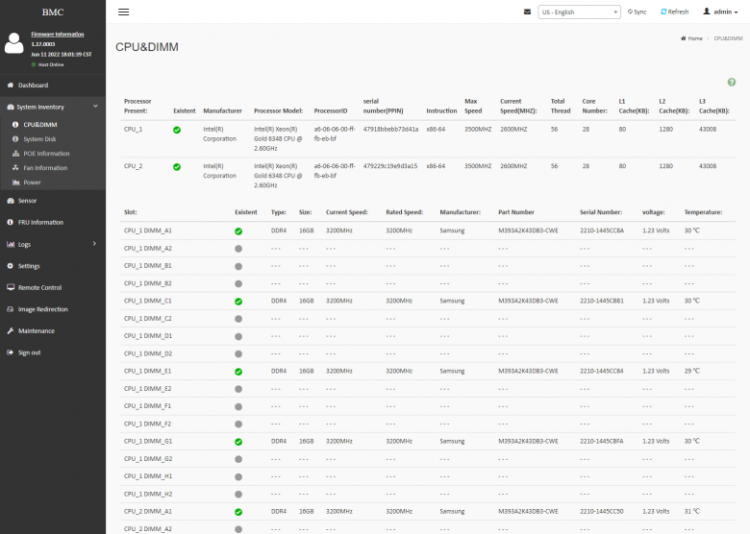

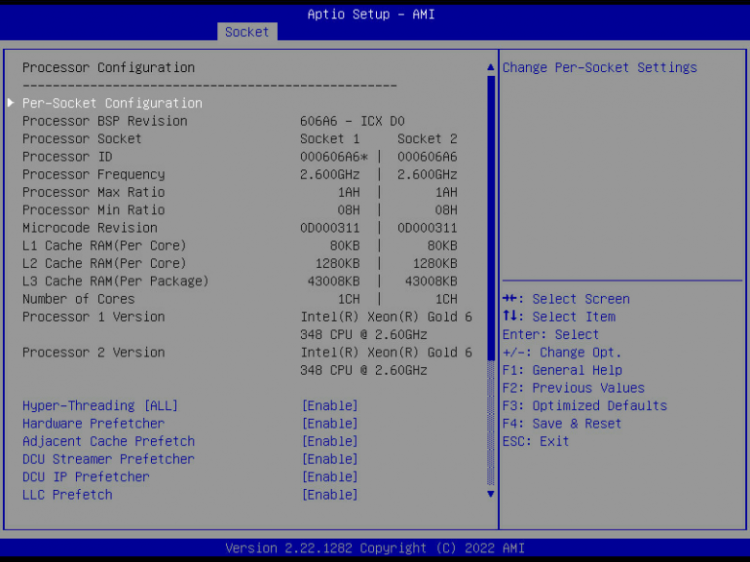

The server is equipped with two FCLGA4189 processor sockets and supports third generation Intel Xeon Scalable processors with a TDP of up to 270 W. In our case, two Intel Xeon Gold 6348 processors were installed: 28 cores, 56 threads, base frequency 2.6 GHz, maximum Turbo frequency 3.5 GHz, TDP 235 W.

The motherboard has 32 slots for DDR4-3200 RAM with ECC support, which allows you to install up to 8 TB of RAM. The tested configuration installed eight Samsung M393A2K43DB3-CWE RAM modules of 16 GB each, giving a total capacity of 128 GB.

The base server platform is not equipped with network controllers other than BMC. In our case, to connect to the network, a Mellanox Technologies ConnectX-4 Lx (PCIe 3.0 x8) controller with two SFP28 ports was installed in the OCP 3.0 slot. These ports allow you to use speeds of 1/10/25 Gbit/s when installing the appropriate transceivers.

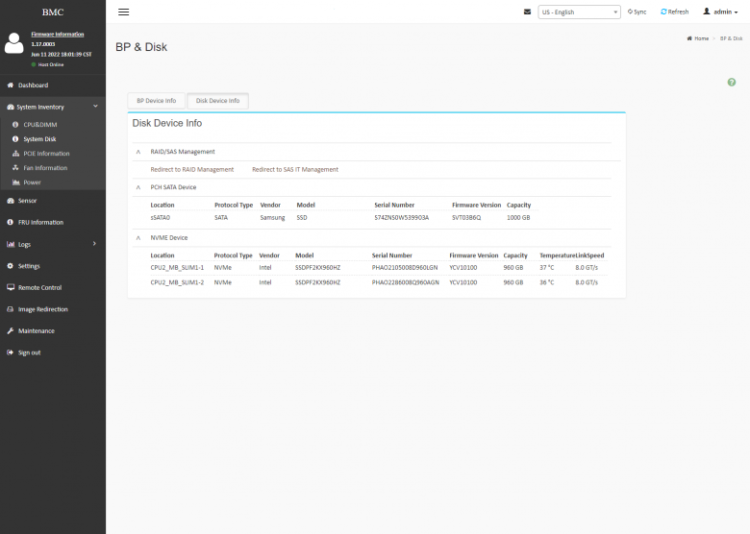

The motherboard has two M.2 2280 slots, into which Kimtigo HNSW22B044Z NVMe drives with a capacity of 512 GB each are inserted. These slots operate in PCIe 3.0 x2 mode and are connected to the chipset, which may limit performance slightly. However, if we consider these drives as system drives, then this limitation will not play a significant role.

The front bays contain two U.2 disks Intel D5-P5530 SSDPF2KX960HZ with a capacity of 960 GB each. They are connected via PCIe 4.0 x4 to the first processor, which ensures maximum data transfer speed.

For testing, one SATA SSD was installed in the bay on the rear panel of the server to install the operating system. You can also use the internal USB 3.0 port as an alternative to the system drive.

To power the server, two Great Wall GW-CRPS1300D units with a power of 1300 W each are used, which is clearly indicated in their name. It is also possible to purchase models with other power up to 2000 W for a given server configuration.

The test server additionally had two controllers installed, which, however, were not used during testing. This is a GRT F902T-V4.0 network controller with two Gigabit ports for copper cables, based on an Intel i350 chip, as well as a Broadcom/LSI MegaRAID 9361-8i RAID controller with a cache protection module, designed for SAS/SATA drives in front bays .

There are three «I/O blocks» located on the rear panel of the server, designed for different configurations. In our case, we chose a configuration focused mainly on the use of expansion cards. However, it is possible to optionally replace these blocks with storage modules. The first and second blocks provide for the use of full-height expansion cards.

Each block has one x16 slot and two x8 slots. Each of these blocks is connected to its own processor. Thanks to the design features of the case, it is possible to use even professional video cards. However, for this you will need to purchase special power cables, since the manufacturer does not even supply them as an option, which seems strange.

In our case, the third block remained unused, but it is possible to purchase a riser for it, which will allow you to add two more half-height expansion cards. However, it was not possible to find out information about how flexibly and quickly you can choose the configuration of the ordered server from the supplier.

Installation and configuration

Installation of the server in the rack went without problems. It is only important to take into account its relatively large depth of the case. After that, we connect the network interfaces and power, and begin configuration. Built-in IPMI tools are typically used for this purpose.

The IPMI web interface is available in English and Chinese. The motherboard manufacturer simply used a ready-made solution from American Megatrends Inc, which we have already seen in other products.

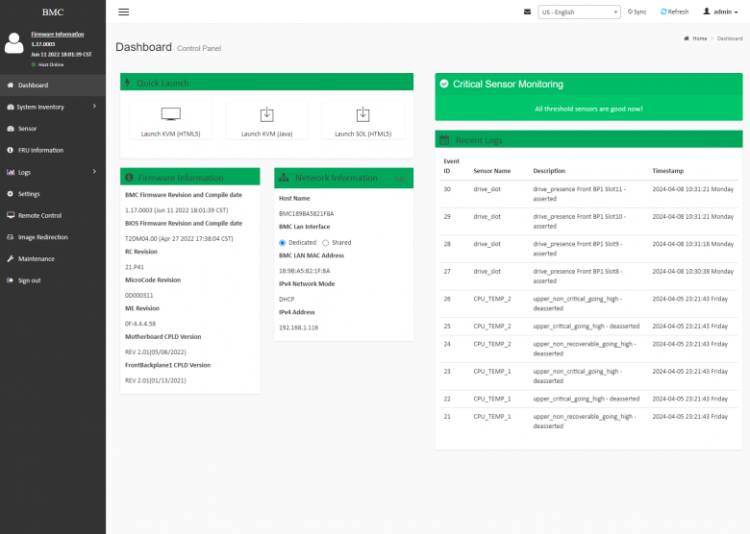

The main page, Dashboard, contains information about the server, including firmware versions, MAC and IP addresses, general sensor status and event log. Quick links to the console are also available.

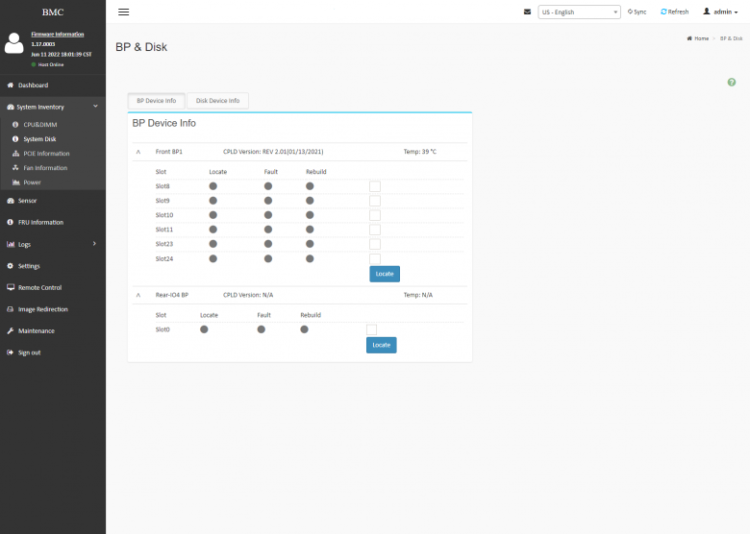

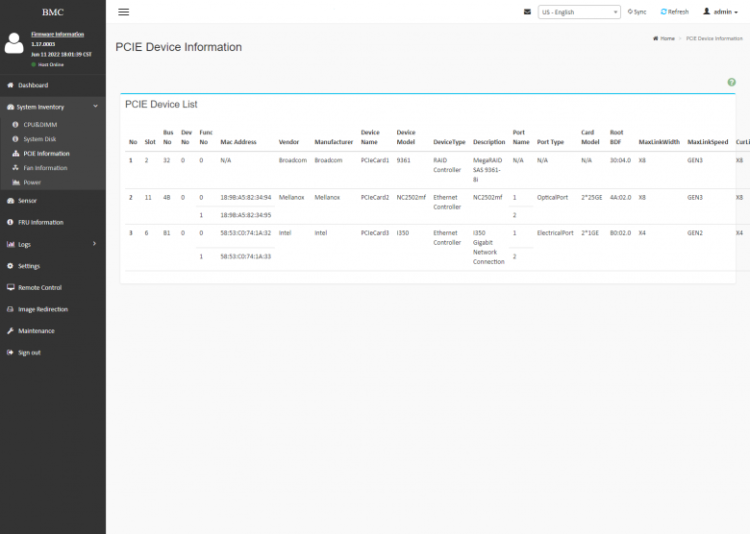

In the inventory section you can get details about processors, RAM modules, drives, expansion cards, fans and power supplies. In the case when U.2 drives are connected to the processor bus, information about them and the backplane is displayed. Numerous data is also available for expansion cards, including connection bus parameters, manufacturer, MAC addresses and port type for network cards. On a Full HD monitor, you may need to scroll horizontally to see all the information. Next, access is provided to the FRU Information section, where information about the platform is indicated.

In addition, there is a traditional section with sensors, which displays presence status, temperatures, fans, voltages, currents, etc. To monitor the operation of the server, event logs are also available, which record system events and calls to IPMI.

Among the BMC settings, the most commonly used may be managing user accounts, network connection and service settings, setting the date and time, sending notifications, and managing virtual media. You may also be interested in access to RAID/HBA controllers, including external ones, to configure the disk subsystem.

The Remote Control section contains links to manage power, enable ID indication, and access remote control via the server desktop or console. For KVM, options are available to work via HTML5 in the browser and a client in Java. Both options provide a wide range of capabilities, including keyboard and mouse control, screen recording, image mounting, and others.

The maintenance section provides functions for working with the BMC, including firmware update, configuration management, BIOS reset, and POST code viewing. It also provides the ability to download information about the server hardware configuration in one file. However, there is no option to update the BIOS.

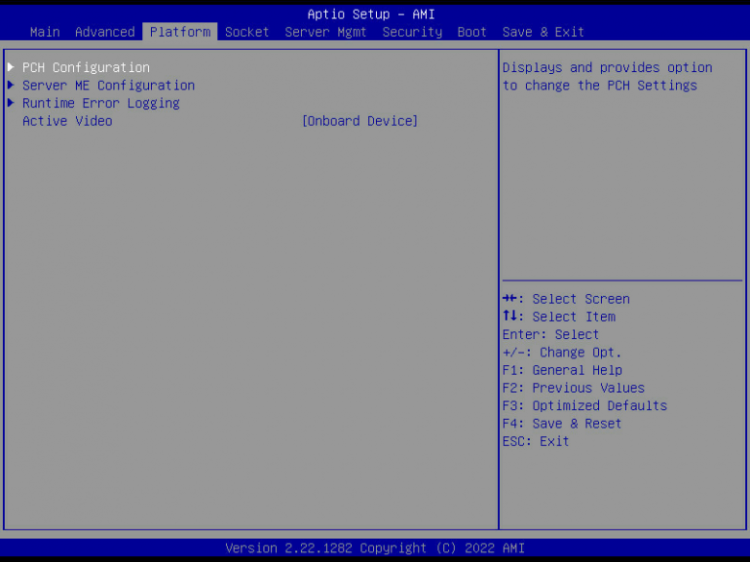

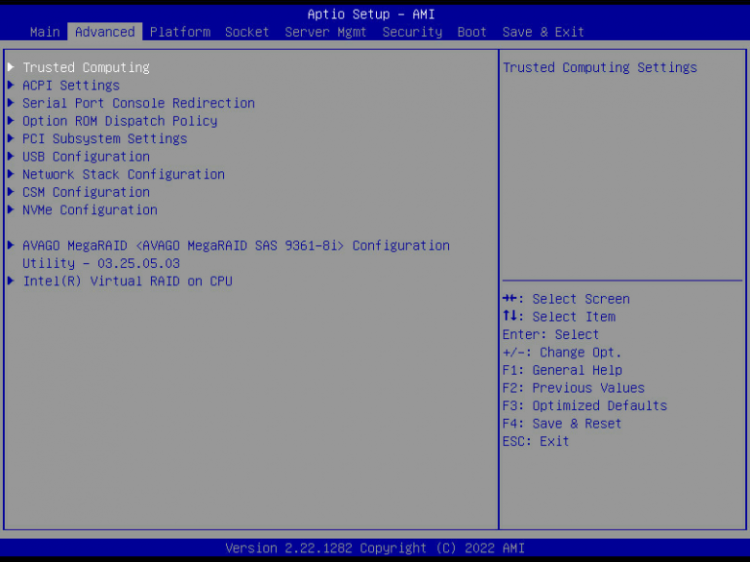

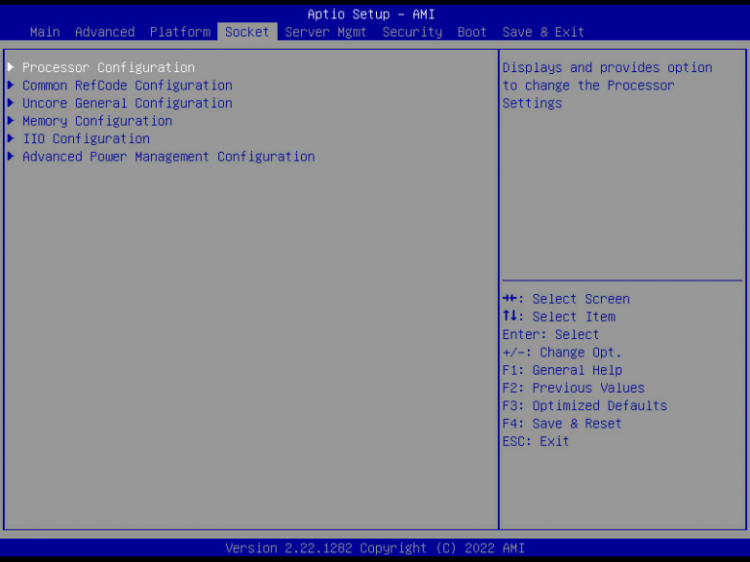

The server motherboard BIOS, as expected, is based on AMI, which is a standard and familiar solution. In general, most users will only need to configure the BMC and boot device, since the rest of the settings are already optimally configured for the server. There is access to the settings of disk controllers, including the built-in Intel VROC and an external Broadcom/LSI model, which greatly simplifies the configuration of disk arrays. In addition, modern features such as virtualization, SR-IOV, UEFI and network boot are supported. Overall, the platform meets industry standards in terms of firmware functionality.

Testing

The server was successfully installed in the rack and connected to the mains power supply via a UPS, providing reliable power. The cooling system has demonstrated high efficiency, maintaining optimal temperatures even at maximum load. Monitoring was carried out using Zabbix, allowing you to monitor the state of the server both through an agent in the operating system and through IPMI.

The maximum system power consumption under load was about 750 W, while the processor temperature did not exceed 80°C. The rotation speed of case fans remained at up to 6000 rpm. The temperature of U.2 drives under load reached 39°C, while the rest of the system components remained at a level of no more than 47°C.

The system showed high stability of operation, which indicates a high-quality design and an effective cooling system. However, it is worth considering that testing was carried out in a basic configuration, and adding additional equipment, such as video cards or additional drives, may affect the overall picture. However, we don't expect any major cooling issues as the configuration expands.

Testing the server allowed us to evaluate its performance in real conditions and identify potential for optimization and further development. The combination of powerful hardware and flexible software configuration makes this server an attractive solution for solving various customer problems.

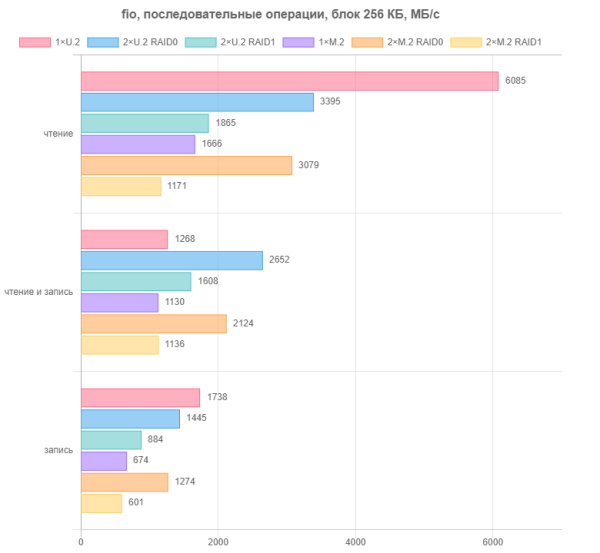

In sequential operations, the standalone U.2 drive exceeded its stated specifications, delivering read speeds of over 6,000 MB/s and write speeds of over 1,700 MB/s. However, its use as part of RAID arrays did not live up to expectations. Although the striped volume showed an increase in performance in mixed mode, read and write speeds in simple modes decreased significantly. In the case of mirrored RAID, it’s not even worth talking about any increase in performance. It might be worth looking for a way to test Intel VROC so as not to lose performance.

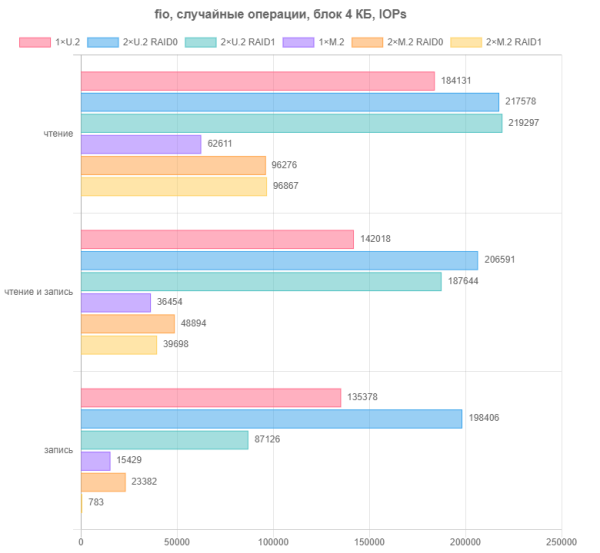

The situation with M.2 format drives is significantly different: here we see an almost twofold increase in performance in RAID0 mode and only minor losses in RAID1 mode.

In random operations, both types of drives showed themselves in an interesting way: there was an increase in performance in all three patterns on the striped array. However, the array in mirror mode shows an increase in speed in read and mixed mode, but a noticeable decrease in write speed, especially in the case of M.2 drives.

The test results for various block device configurations turned out to be quite interesting and not always in line with expectations. This encourages further research in this area.

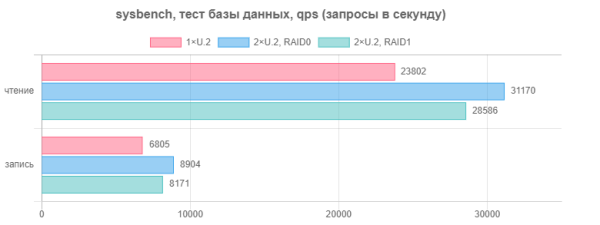

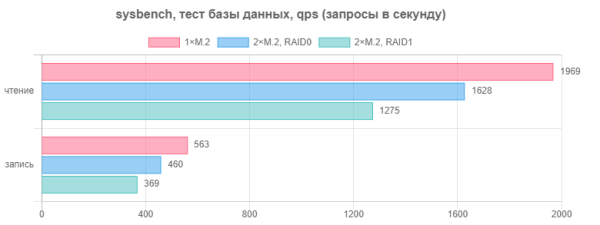

While the speed of block devices gives some indication of their performance, it is their performance in real-world applications that is of greatest interest. To do this, we conducted a database test using the sysbench utility. The configuration and testing conditions were as follows: 100 databases of 10 million records, total volume about 256 GB, the oltp_read_write script from the distribution kit was used, testing was carried out in 32 threads, test duration was one hour.

To store the database, various disk volume configurations were used: a single disk, two disks in RAID0 and two in RAID1 for M.2 and U.2 drives. File system — xfs. The results obtained are presented in operations per second from the last line of the test log. It's interesting to note that M.2 drives were noticeably slower than U.2 drives, which led to the decision to present their results in different charts.

For U.2 devices we see that, this time as expected, the interleaved array takes the lead. However, a mirror also has an advantage over a single drive. So if your goal is to have a fault-tolerant and fast configuration for a high-load database, then the software implementation of mdadm in Linux will not disappoint you.

M.2 drives showed themselves to be completely different. Here a single disk takes the lead, while software arrays are noticeably inferior to it. As for the absolute results, these drives turned out to be several times slower than U.2 drives.

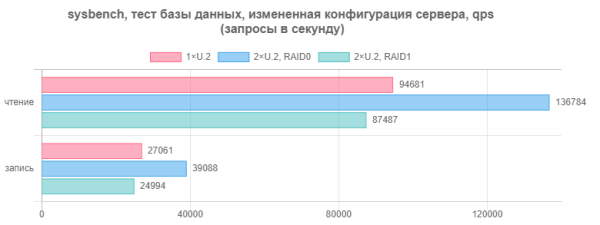

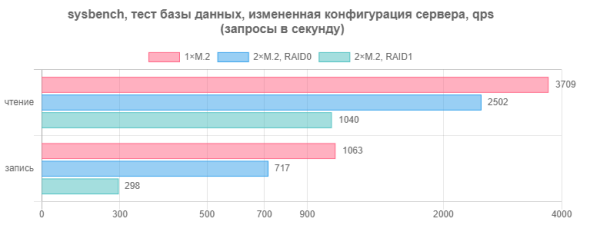

The second pair of diagrams was obtained with the innodb_buffer_pool_size parameter in the /etc/mysql/mariadb.conf.d/50-server.cnf file set to 96 GB (remember that the server had a total of 128 GB of RAM installed).

For configurations with U.2 drives, the overall speed increase was 3-4 times. At the same time, the relative positions of the participants have also changed slightly: the striped array is again in the lead, but the second place is occupied by a single disk, slightly ahead of the mirrored volume.

When storing the database on M.2 drives, we see that for a single disk the increase was two times, for a striped array it was one and a half times, and the mirrored volume was even slower. Of course, we already said at the beginning of the article that the M.2 slots on the motherboard will most likely be used for installing the operating system (if the requirements do not necessarily support hot-swappable drives), and not for intensive work with user data. The presented configuration will cope with this task without any problems, but it’s still a bit of a shame for NVMe.

Conclusion

Without a doubt, the emergence of another server equipment supplier can only be welcomed. Still, under all conditions, it is more convenient to have a local partner than to independently bring equipment of this class from afar. The Hiper Server R3 — Advanced server provided for testing makes a good impression with its well-thought-out design, flexible configuration, and the presence of all the required interfaces and management functions.

This 2U solution is equipped with two sockets for Intel Xeon processors, has a large maximum amount of RAM, an efficient cooling system, the ability to install full-size expansion cards, including video cards, as well as powerful power supplies. Support for drives with SATA, SAS and U.2 interfaces makes it universal in use.

From a software point of view, this platform differs little from other solutions in this class. It implements standard remote management and monitoring standards, and we did not encounter any operating system compatibility issues.