The Asilan company, operating on the market for more than ten years, specializes in the supply of a wide range of IT equipment. Its competitive advantages include its own expertise in the field of modern equipment, which allows us to select the optimal solutions for each client. In addition, the presence of direct contracts for supplies and provision of warehouses allows us to reduce costs, shorten delivery times and ensure a high level of service. All supplied equipment undergoes thorough testing before delivery to the customer.

One of the key activities of the company is the supply of servers of its own production, using components from foreign manufacturers. Their range includes solutions in all common formats, from 1U to 4U, as well as floor-standing models.

In this article we will look at the Asilan AS-R200 server, presented for testing. This is a universal 2U server with two Intel Xeon Scalable processors and 25+2 2.5" drive bays.

Server hardware offers a wide range of configurations covering processors, memory, storage and network interfaces. Customers typically determine the configuration to suit their unique needs. When choosing, factors such as delivery time, cost, flexibility and competence of the supplier, as well as warranty conditions are taken into account.

The manufacturer provides a three-year warranty on all equipment, covering transportation costs throughout Russia.

As for performance testing, in this publication we will focus on the server design, its operational features and test several storage system configurations. Due to the availability of information about the models of installed processors, performing synthetic tests is often unnecessary.

Contents of delivery

The server is shipped in a standard universal rack-mount cardboard box. Of course, no original design is expected here. On one of the short sides there are information stickers with identification data.

The box is made in a double layer, and foamed propylene foam inserts are used for protection during transportation. It is noticeable that their shape matches the body, including cutouts for protruding parts. In general, the design is made at a high level, there are no signs of savings, all parts are similar to products from well-known manufacturers in this segment.

After opening the box, the user finds another cardboard cover inside, which contains rails for installing the server in a rack. In addition, the package includes two power cables (C13 to a standard Euro plug with grounding), a set of screws and labels for the drive bays, as well as screws for mounting the device in a rack. In our case, a sheet with a description of the equipment and a report of pre-sale preparation at the manufacturer’s stand were also attached. Documentation and tools were missing.

The rack mounts feature a modern design with snap latches that require no screws. It is interesting that despite the external similarity, the rails are labeled “right” and “left”. Their workmanship, in our opinion, is slightly lower than that of well-known manufacturers of “white boxes”, but we did not find any significant defects.

The design is standard: two base elements can be moved relative to each other, so that the minimum and maximum depth of the rack is 77 and 93 cm, respectively. This is followed by an intermediate element with blocks of linear bearings on the outside and inside, and the design is completed by a guide installed on the server, which is additionally secured with countersunk screws.

The fixed server can be fully extended to a distance of more than 70 cm thanks to a system of latches that lock its location and can be easily retracted by hand for dismantling. Typically, low-profile servers do not require special cable management. Unfortunately, there is no possibility of additionally securing the rails to the back of the rack due to the lack of appropriate holes for screw fastening (special screws are provided in the kit, but there is no suitable place for their installation). We also encountered a situation where the mount did not fit on a server rack without a known manufacturer due to mismatched pin and hole sizes, but there were no problems with installation in the APC NetShelter.

Appearance and design

The server has standard 2U dimensions for installation in a 19-inch wide server rack, and its depth is 65 cm. It is important to note that the included rails extend beyond the chassis, so it is recommended to use a cabinet or rack of appropriate depth. The appearance of rack-mount equipment is not a priority; reliability, ease of service and other practical features are more important.

The front panel of the server houses 25 bays for 2.5″ drives, which is a standard configuration for increasing storage density to 2U. However, due to the large number of compartments, there is no room to place indicators, buttons or connectors.

The manufacturer solved this problem by adding “ears” on both sides, each measuring 2 cm wide and 2.5 cm deep. The left ear contains a power button, three LED indicators, a hidden reset button, and an ID button to identify the server on both sides of the rack. There is also a hidden USB 3.0 port, protected by a spring-loaded cover, and a screw for securing the server to the rails.

On the right there is a VGA port, a second USB port and a second screw for mounting. This makes it easy to connect a local monitor and keyboard. There is also a pull-out label for posting server information. However, it is worth noting that the MAC address and IPMI password are not included here, but this information applies to the motherboard.

To install drives into the server, standard slides are used, on which they are secured using four screws on the bottom side. The slides are made of metal and plastic, with spring-loaded elements at the ends. Two LED indicators are displayed on the front panel, and the compartment opening lever is held by a latch with a sliding lock button. In general, there are no comments on this issue, but you should be prepared to tighten hundreds of screws when completely filled.

The rear panel is generally almost standard. On the left side there are two hot-swappable power supplies. There are no additional fastenings for power cables.

Next are two more bays for 2.5″ drives (in most cases they will be used to house the operating system).

After that there is the usual motherboard panel and the standard seven slots for low-profile expansion cards.

To remove the top cover and access the internal filling of the server, you need to open the lock with an additional lock on the top panel.

There are holes on the back sides for three small screws. Note that, unfortunately, we did not find a diagram describing the motherboard on the back of the cover.

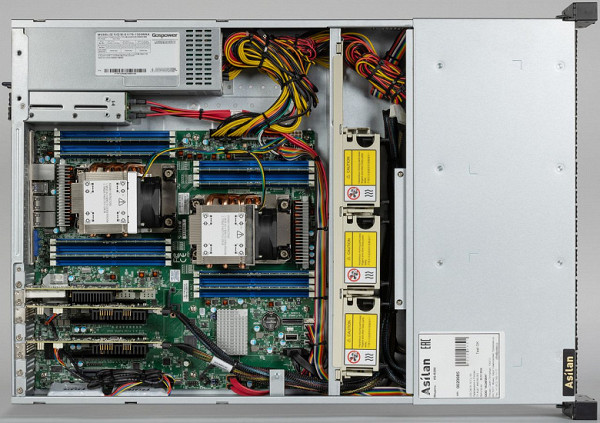

The internal design of the server is quite standard. The front part of the case houses the disk bays and backplane. These are followed by the cooling unit, and then the main motherboard and power system are located.

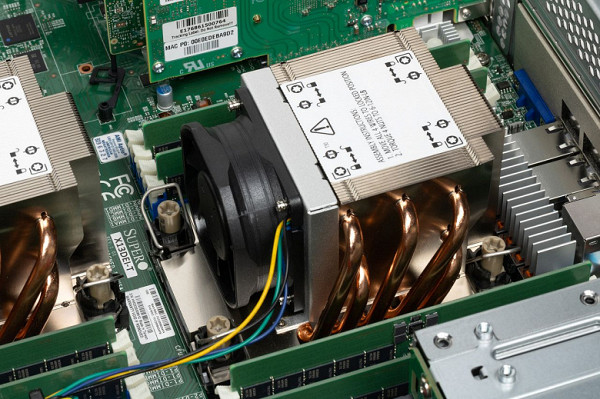

The main cooling system consists of three Snowfan YY8038M12B fans (12 V, 1.8 A). They are made in a quick-release format and have a 4-wire connection; they are attached to the frames through rubber inserts. The fans are connected to three connectors on the motherboard.

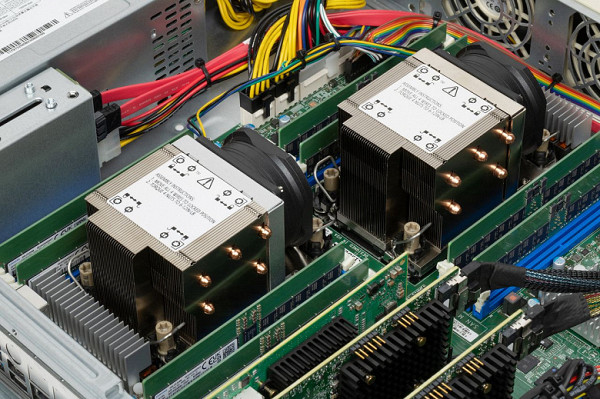

The use of cooling systems with heat pipes and fans on processors, which are also connected to the motherboard, is noted. There are no plastic guides for air flow or a housing opening sensor.

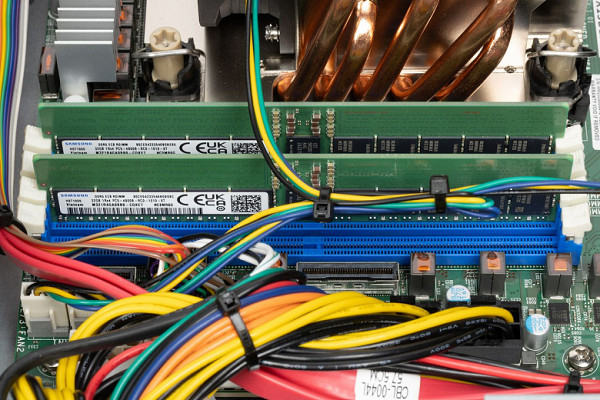

Overall, the build quality looks acceptable with no complaints about the appearance. However, it is worth paying attention to a few points. First of all, the cables for connecting drives are too long and the tangle of power cables is too massive, which is probably due to the use of standard universal components. Also causing concern is mounting RAID controller batteries on the side wall of the case.

Configuration

As noted earlier, the server configuration depends on the customer’s requirements, which may vary depending on the purpose of using the device, financial capabilities, energy consumption and other factors. In this context, we will look at the actual configuration of the server provided, provided to give an overview of the product.

A 2U case today is one of the most common and versatile options for server equipment. It provides the ability to use two powerful processors, install multiple expansion cards and provides sufficient capacity and speed of the data storage system.

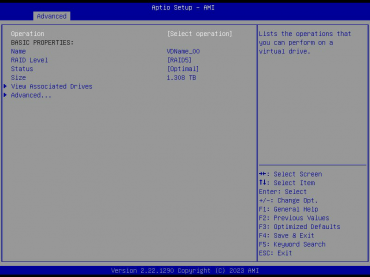

The AS-R200 server is configured with 25 2.5-inch drive bays. Other versions of this platform can support 3.5-inch drives ranging from 8 to 12 pieces. A hybrid backplane is used to connect drives to controllers. The first four bays support three interface options: SATA, SAS and NVMe. NVMe, which is the fastest interface today, is available on dedicated ports, providing four PCIe lanes for U.2 drives, which significantly exceeds the capabilities of SATA and SAS. The remaining bays work with SATA and SAS interfaces via a multiplexer, which reduces the number of cables and requirements for controller ports. The first four bays also operate via a multiplexer in the case of SATA and SAS interfaces.

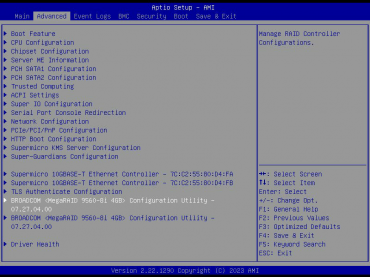

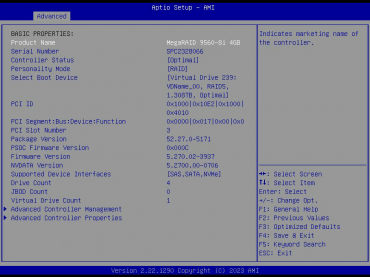

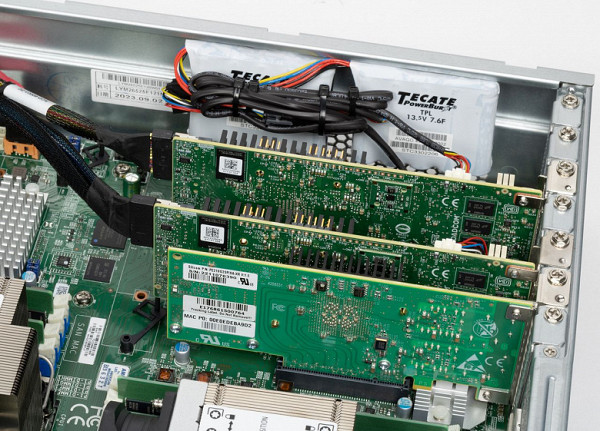

This server was equipped with four 480 GB Micron 5300 Pro drives with a SATA interface and two 1920 GB Samsung PM1733 drives with an NVMe/U.2 interface. To service these drives, two modern Broadcom (LSI) MegaRAID 9560-8i RAID controllers were used.

One of the RAID controllers handled SATA drives, while the second controller managed U.2 drives. Both controllers are equipped with a PCIe 4.0 x8 interface, have 4 GB of cache memory and support the connection of a cache protection unit. In this configuration, they were installed and secured to the side wall of the server. Each controller is capable of supporting up to 240 SATA/SAS drives and up to 32 NVMe drives. To connect to the backplane, the controller has one internal SFF-8654 connector.

Drivers and management programs are provided to support most modern operating systems. The arrays provide all common RAID0/1/5/6/50/60 configurations. Maximum stated speeds reach up to 13,700 MB/s on sequential read operations with a 256 KB block and up to 3M IOPs on random read operations with a 4 KB block. The energy consumption level according to the documentation is 9.64 W. Overall, the storage subsystem has interesting flexibility, allowing you to combine large volumes on SATA/SAS drives with high speeds on NVMe drives and select RAID configurations depending on fault tolerance requirements.

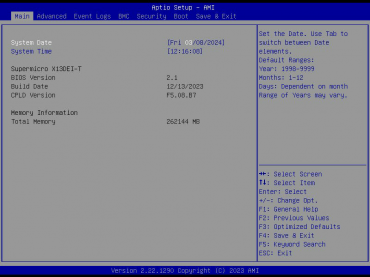

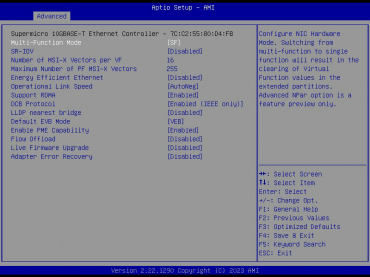

Most of the remaining characteristics of the device are determined by the selected motherboard — Supermicro X13DEI-T. It uses the Intel C741 chipset and the BMC Aspeed AST2600 controller.

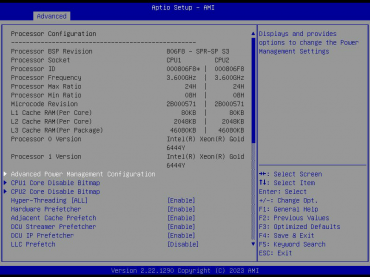

The board contains two LGA-4677 sockets, compatible with fourth and fifth generation Intel Xeon Scalable processors. These processors can have up to 64 cores and a TDP of up to 350W. The tested device was equipped with two Intel Xeon Gold 6444Y processors with parameters: 16 cores/32 threads, base frequency 3.6 GHz, maximum turbo frequency 4 GHz, 45 MB cache, TDP 270 W and 80 PCIe 5.0 lanes.

The motherboard has 16 slots for 3DS ECC RDIMM DDR5-5600 RAM, providing a maximum capacity of up to 4 TB. The tested configuration used 8 DDR5-4800 32 GB modules from Samsung (model M321R4GA0BB0-CQKET), for a total of 256 GB.

The board also has SATA ports for connecting additional SSDs in two additional bays for the operating system. In addition, you can use two M.2 slots for storage devices, although they are not hot-swappable, but the operating speed is higher.

There is a built-in network controller based on the Broadcom BCM57416 chip with two RJ45 ports supporting 10 Gbps speed. Additionally, a dual-port 10 Gbps network card with SFP+ ports, an analogue of the Intel X520-DA2, from Intel was installed.

Thanks to the 2U format and standard chassis design, all slots (two PCIe 5.0 x8 and four PCIe 5.0 x16) are available for (low-profile) expansion cards. In this configuration, three of them are already occupied by expansion cards.

Additional 2.5″ bays with a SATA interface located on the rear panel of the server contain two Micron SSDs, which are used as system drives for the operating system. They are connected to the corresponding SATA ports on the motherboard.

The rear panel also features one DB9 serial port, four USB 3.0 ports, an IPMI network port, two 10 Gbps ports onboard NIC, and a VGA port. In the lower right corner of the port panel is a hidden BMC ID/Reset button.

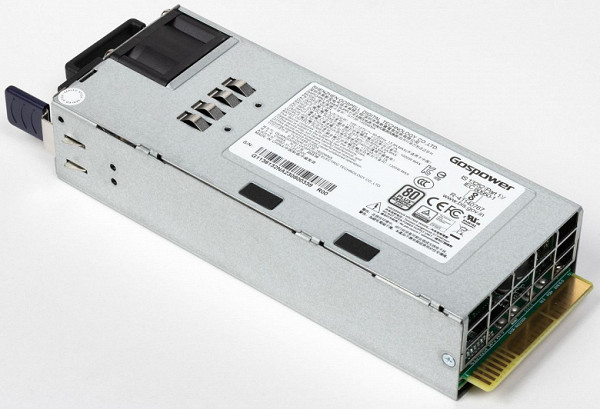

The server is powered by two units with support for redundancy and hot replacement. They are manufactured by Gospell Digital Technology, model — Gospower G1136-1300WNA. With an input voltage of 220 V, they provide a maximum output power of 1300 W.

Given the platform configuration, the specified power will be more than enough for most possible server configurations. Standard C14 connectors are used to connect power cables. There is no additional cable fastening provided. It would be incorrect to assess the quality of these models based on a short test of one device, but during testing no questions arose about them. The system correctly handled the failure of one of the units and the subsequent hot replacement.

It is impossible to highlight the cost of the platform itself for comparison with other similar solutions, since the server is supplied fully packaged.

Installation and configuration

Remote monitoring and management capabilities are important for server equipment. In this case, they are determined by the Supermicro platform and do not cause any complaints. Through a dedicated or shared IPMI network port, you can have full access to the device, from the BIOS to the operating system desktop. Please note that some of the features require an additional license.

This interface works in any browser and supports the English interface language. Through it, you can monitor the status of the server by reading the readings of built-in sensors such as temperatures, voltages and fan speeds. The only note is the lack of ability to remotely read energy consumption via IPMI, although it is displayed in the web interface.

There are also features to check configurations such as processors, memory, and power. However, it will not be possible to make an inventory of expansion cards and drives (even on the motherboard). There are built-in notification systems via SNMP and email.

There are two options for accessing the operating system console: through HTML5 and a browser, as well as through a Java plugin. Thanks to the ability to remotely connect virtual drives, you can install

Update the operating system even without physical access to the server.

The BIOS Setup of the motherboard basically corresponds to the standard settings. Among the most used are BMC network settings, selecting OS boot parameters, storage subsystem and local network settings.

One interesting thing to note is the ability to manage external RAID controllers. Directly from BIOS Setup, you can create the necessary configurations of disk drives, check their status, run a battery test, etc. In the same way, you can configure the parameters of network controllers, if they support this feature.

Testing

During testing, the server was installed in a server rack along with a UPS, air conditioner and switch to conduct all the necessary experiments. The Proxmox virtualization system was installed on the server to be able to run real computing tasks without the need to be tied to the main operating system.

The recorded maximum performance under load is as follows: total power consumption was 800 W, the temperature of the first processor reached 80 °C, the second processor reached 95 °C, and the temperature of the memory modules and VRMs reached 65 °C. The fan speed on the processors was 11,400 rpm, and the case fans were 8,400 rpm.

In general, there are few observations and comments, with the exception of the higher temperature of the second processor, which is located closer to the rear panel of the server. Perhaps the use of additional guides to organize air flows would help improve the situation.

In the tested configuration, only six drives were installed on the front panel, so it’s difficult to say anything definite about the option with fully filled bays. It is worth noting that there is no fan on the right side of the case, which, combined with a dense bundle of wires, can lead to worse temperature conditions.

As for the configuration used, the temperatures of SATA drives did not exceed 35 degrees, and U.2 drives did not exceed 50 degrees. The temperature of the batteries of the RAID controllers did not rise above 33 degrees, and the temperature of the controllers themselves did not rise above 50 degrees.

For SATA drives installed on the rear panel, the maximum recorded temperature was 55 degrees.

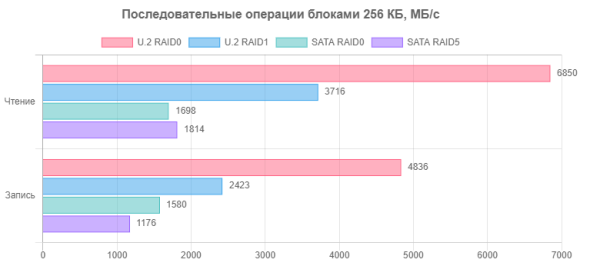

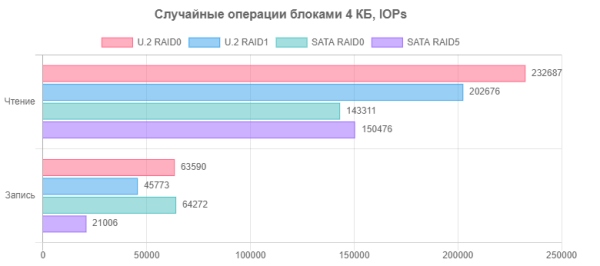

The first part of the storage subsystem tests included the speed of block devices in the fio test. The configurations tested were RAID0 and RAID5 for four SATA drives and RAID0 and RAID1 for two U.2 drives. Testing included sequential reads and writes in 256 KB blocks (matching the RAID array block), as well as random reads and writes in 4 KB blocks. All tests were performed using 16 threads and running for 10 minutes.

The speeds of arrays of U.2 drives via a RAID controller can be compared with those stated for a single disk, which provides speeds of 7000 MB/s for reading and 2400 MB/s for writing. Obviously, writing to a striped array can be noticeably faster, and there is no performance loss when using mirrored RAID. However, reading is more difficult: the striped array ends up at the level of a single disk, while the mirrored RAID suddenly slows down.

For SATA drives, using a RAID array significantly increases overall performance. For one disk, speeds of 540 MB/s for reading and 410 MB/s for writing are stated. Organizing an array of four devices gives an almost multiple increase in the speed of sequential operations for both RAID0 and RAID5 (interestingly, the latter is even faster than the first in reading).

In real-world use cases, especially with SSDs, random operations with small block sizes are also important. However, as we understand, RAID arrays in this case can rarely boast of high efficiency.

Yes, working through a RAID controller significantly reduces the performance of NVMe devices. For one disk, performance is stated at 800,000 IOPs for reading and 100,000 IOPs for writing. However, read arrays only slightly exceed the 200,000 IOPs mark, and write arrays reach about 65,000 and 45,000 IOPs for RAID0 and RAID1, respectively. Probably, with an increase in the number of disks, it would be possible to achieve higher values, but the 3,000,000 IOPs declared for the controller on random reading and 240,000 IOPs on random writing seem very doubtful.

For SATA drives, 85,000 IOPs for reading and 36,000 IOPs for writing are stated. Using four devices allows you to increase read speeds, but the increase in write performance is observed only on a striped array.

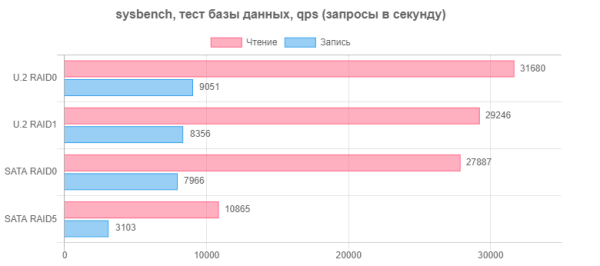

The second test we ran used a database script using the sysbench tool. The main test parameters included 100 databases of 10,000,000 records with a total file size of about 256 GB. The test was carried out in 32 threads for one hour. The results are shown in the diagrams from the last line of the test log.

In the first diagram you can see that the configuration of the array with NVMe disks has virtually no effect on the final result. SATA drives in a striped array almost achieve the performance of NVMe drives. However, using a RAID5 array significantly reduces performance.

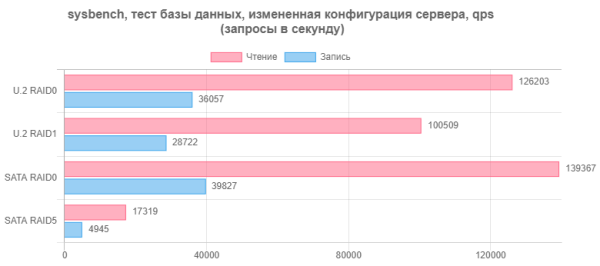

Given the abundance of RAM in our server, it is worth considering the possibility of configuring the database parameters responsible for its use. Of all the settings options, we settled on innodb_buffer_pool_size from the /etc/mysql/mariadb.conf.d/50-server.cnf file, setting it to 128 GB. This led to significant changes in the results, as reflected in the following chart.

Thanks to this setup, the performance of SATA drives in RAID0 increased several times (except for the parity array), allowing them to even outperform NVMe drives. It should be noted that in this case the drives are connected through a RAID controller, which has its own processor and request processing algorithms.

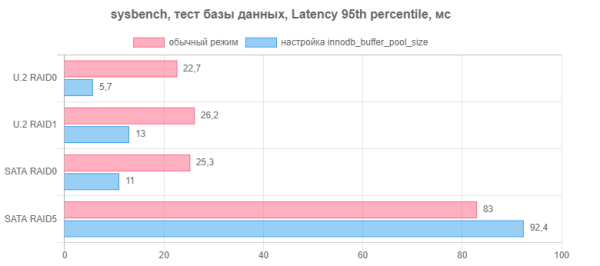

In addition to the number of operations per second, response time is also important. The test provides latency data, which is also shown in the chart.

This option led to a significant reduction in response time for “correct” arrays, reducing it by two or more times. However, for RAID5 based on SATA drives, the results worsened.

In addition, we will present graphs demonstrating the impact of this setting on the activity and resource consumption of the system during test execution.

If you do not increase the RAM pool, then soon after the start of the test the load stabilizes: the processor operates at a level of slightly more than 40%, the speed of read operations is about 28,000 OPs, and write operations are about 8,000 OPs. After completing the hour-long test, the system continues to “sort out the consequences” for about another half hour: we observe a slight processor load and active use of the disk array.

If we use the innodb_buffer_pool_size setting, then the processor is significantly less loaded, memory consumption gradually increases, the read disk is active only at the beginning, and the write load is similar at the level of 7500 OPs. After the test is completed, all resources except RAM become free, although the memory remains occupied until the database service is restarted.

The obtained results of testing the data storage subsystem are of interest and can be useful in the design of modern servers. Using a RAID controller with U.2 disks allows you to create a fast, high-capacity block device. However, the added layer, at least in the tested version, does not fully reveal the capabilities of the drives themselves. Although the server only had two disks, the implementation of a simple striped array leaves much to be desired. It looks more attractive to work through a controller with SATA drives, which, although they have a slow interface, are accessible and can be installed in larger quantities. This is a fairly effective way to obtain an array that combines high speed and large capacity. Despite the fact that such an array may lose to NVMe drives in random operations, its performance may well be sufficient for many applications.

However, even such a simple example as working with a database shows that comparing the speed of block devices directly with the performance of the application may be incorrect. For example, a parity array of SATA drives showed poor performance in this task.

It is also important to note that optimization recipes are not always universal. For example, using RAID5 from SATA drives can lead to undesirable results, highlighting the need for careful analysis and tuning for specific applications.

Thus, the test results show that ultimately the choice of the optimal server configuration depends on the specific application and data requirements.

Conclusion

The acquaintance with the Asilan AS-R200 server was successful. This device corresponds to the segment of universal solutions with a wide range of configurations to suit customer requirements. It is suitable for computing workloads, databases, virtualization and AllFlash storage. When choosing a product like this, the service, warranty and support of the manufacturer are often more important than the results in benchmarks, but we cannot evaluate this. Communication with company representatives during the preparation of the review was positive and effective.

The design of the Asilan AS-R200 server is universal: 2 sockets for processors, many slots for RAM, 7 expansion slots, 25 bays for drives, including U.2, and 2 additional bays for system SATA drives. The motherboard from a well-known manufacturer ensures stability for a long time. The product supports industry standards for remote management and monitoring, and is compatible with modern server operating systems.

Although it is possible to assemble a server yourself, this only makes sense if you have qualified specialists and a willingness to resolve configuration, service and warranty issues. If the goal is to get a ready-made and reliable solution with predictable service times, then paying attention to Asilan is a smart choice.